Visual positioning method of large-size material and carrying robot

A visual positioning, large-scale technology, applied in instruments, image analysis, image enhancement and other directions, can solve the problems of high labor cost, inconvenient handling, time-consuming and laborious manual handling, etc., to ensure the effect of stress and eliminate potential safety hazards.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

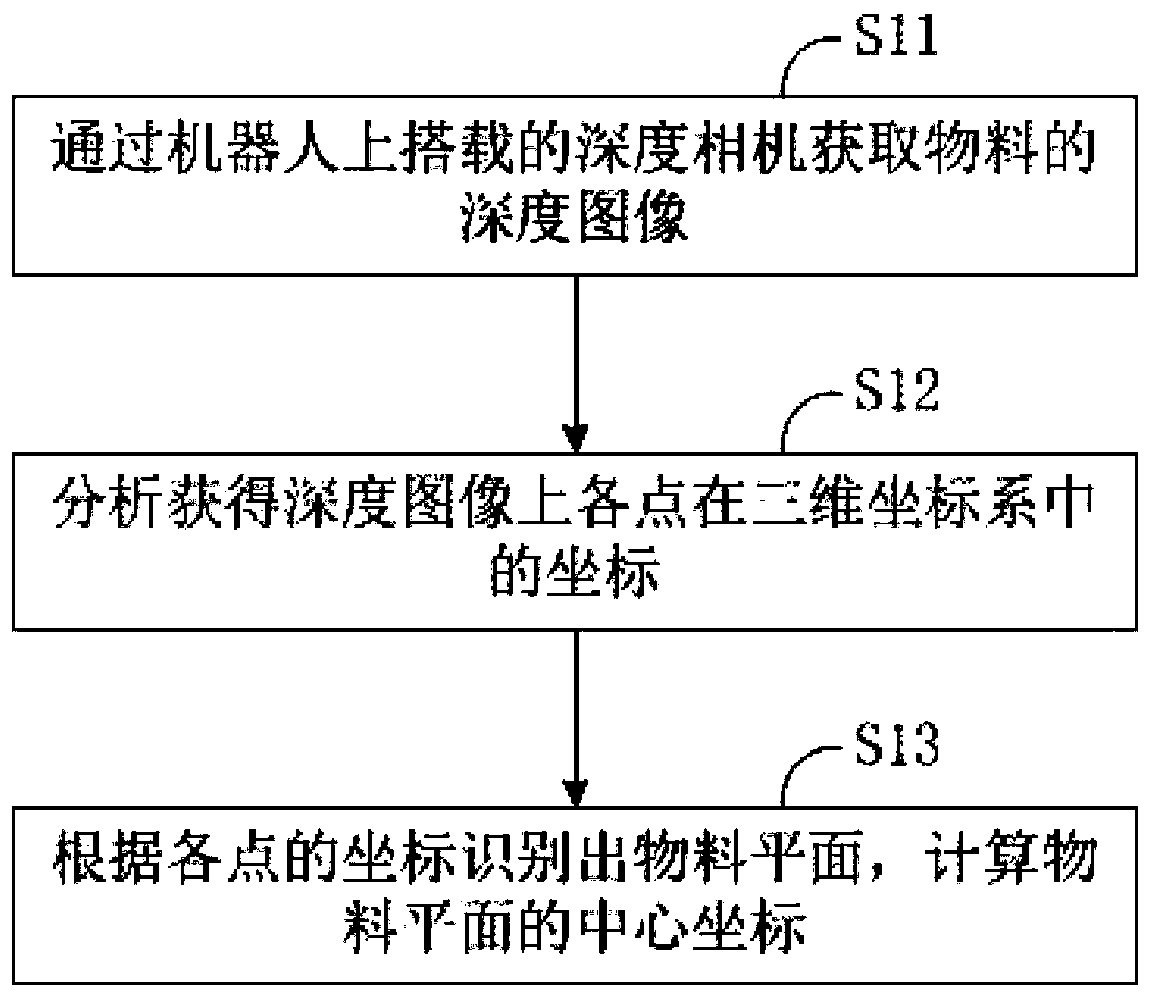

[0055] This embodiment provides a visual positioning method for large-sized materials, which is suitable for locating the position of materials before material handling, and is especially suitable for materials with rectangular cross-sections.

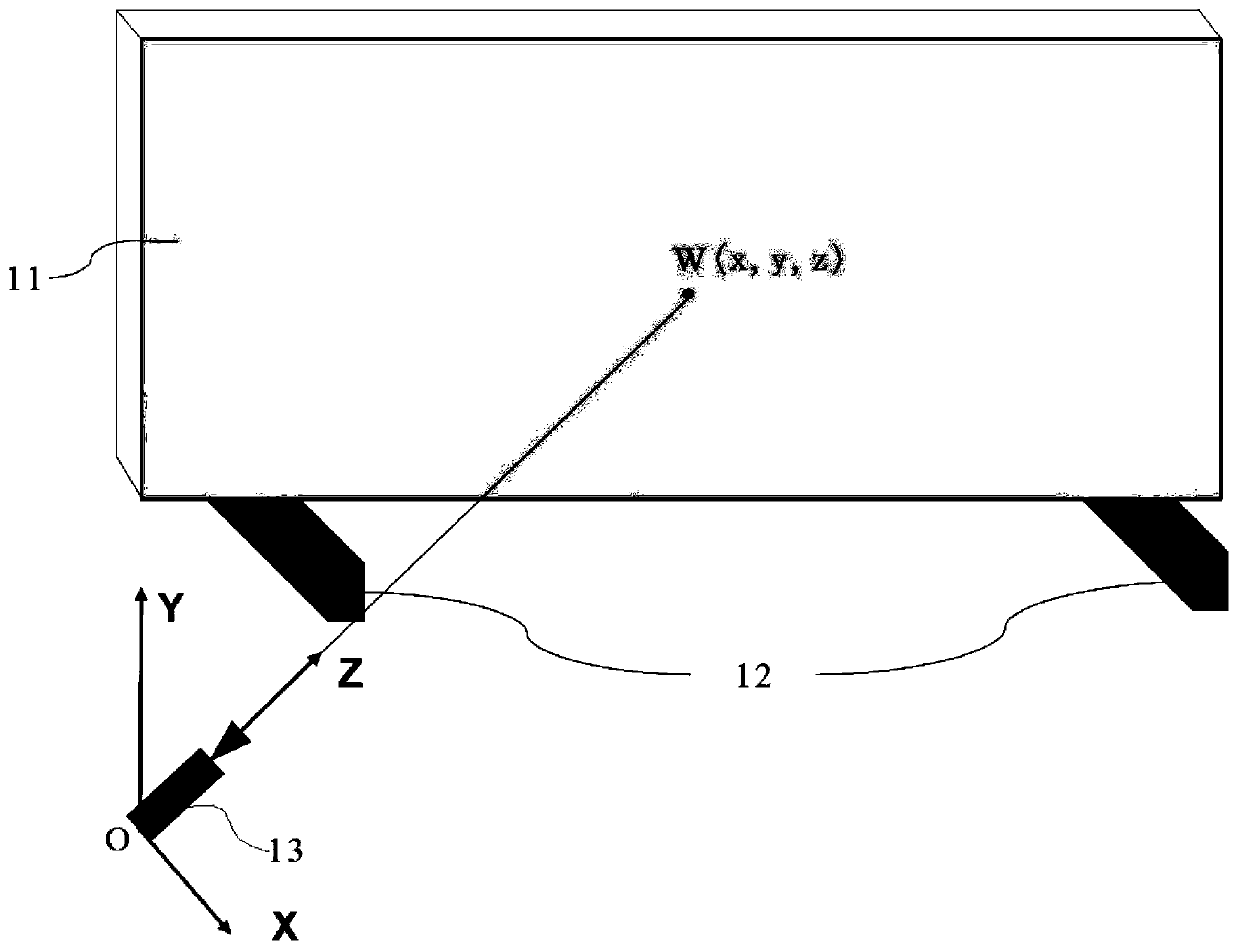

[0056] The visual positioning method is performed by a large-size material handling robot, the handling robot includes a manipulator and a moving component, the manipulator is used to grab the material, and the moving component is used to move the robot to the place where the material is placed; further, The handling robot includes a depth camera and a controller, the depth camera is used to acquire the depth image of the material to be transported, and the height of the camera relative to the ground is greater than the height of the wood; the controller is used to locate the material according to the depth image acquired by the depth camera , and control the manipulator to grab the material according to the positioning, and control the...

Embodiment 2

[0074] In this embodiment, on the basis of the above-mentioned embodiments, when the handling robot transports the materials to the destination, it needs to place the materials according to the placement position of the wooden cubes on the site. Materials are identified and positioned to ensure proper placement of materials.

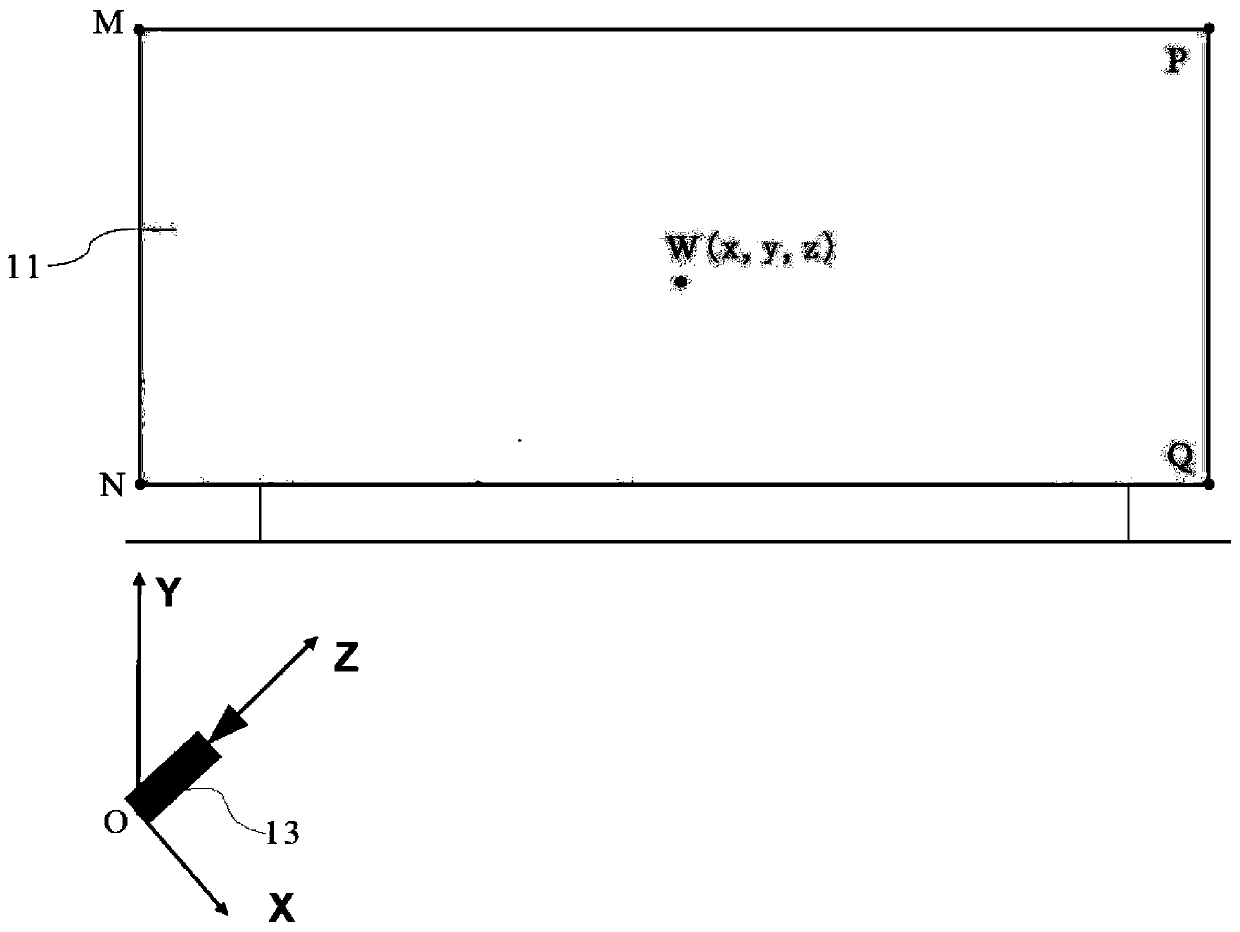

[0075] When placing materials, it is necessary to judge whether the placement of wooden cubes is correct according to the placed materials or the wall surface, and then stack the newly moved materials according to the placement requirements. For the materials that have been placed, use the same method as the above-mentioned embodiment to locate, and the method of identifying and locating the wooden square is as follows:

[0076] After analyzing and obtaining the coordinates of each point in the three-dimensional coordinate system on the depth image, the material plane 11 or the wall surface and the contour lines of at least two wooden squares 12 are iden...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com