A Telephone Speech Emotion Analysis and Recognition Method Based on LSTM and SAE

A technology of emotion analysis and recognition method, which is applied in speech analysis, character and pattern recognition, instruments, etc. It can solve problems affecting analysis results, gradient disappearance, disturbing emotion analysis results, etc., and achieve the effect of accurate experimental results and high efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0052] The present invention will be further described in detail below in conjunction with the embodiments and the accompanying drawings, but the embodiments of the present invention are not limited thereto.

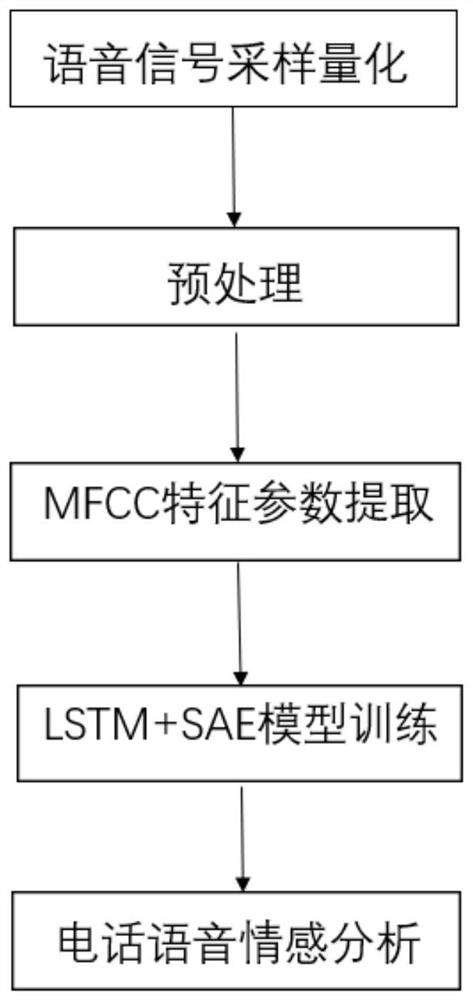

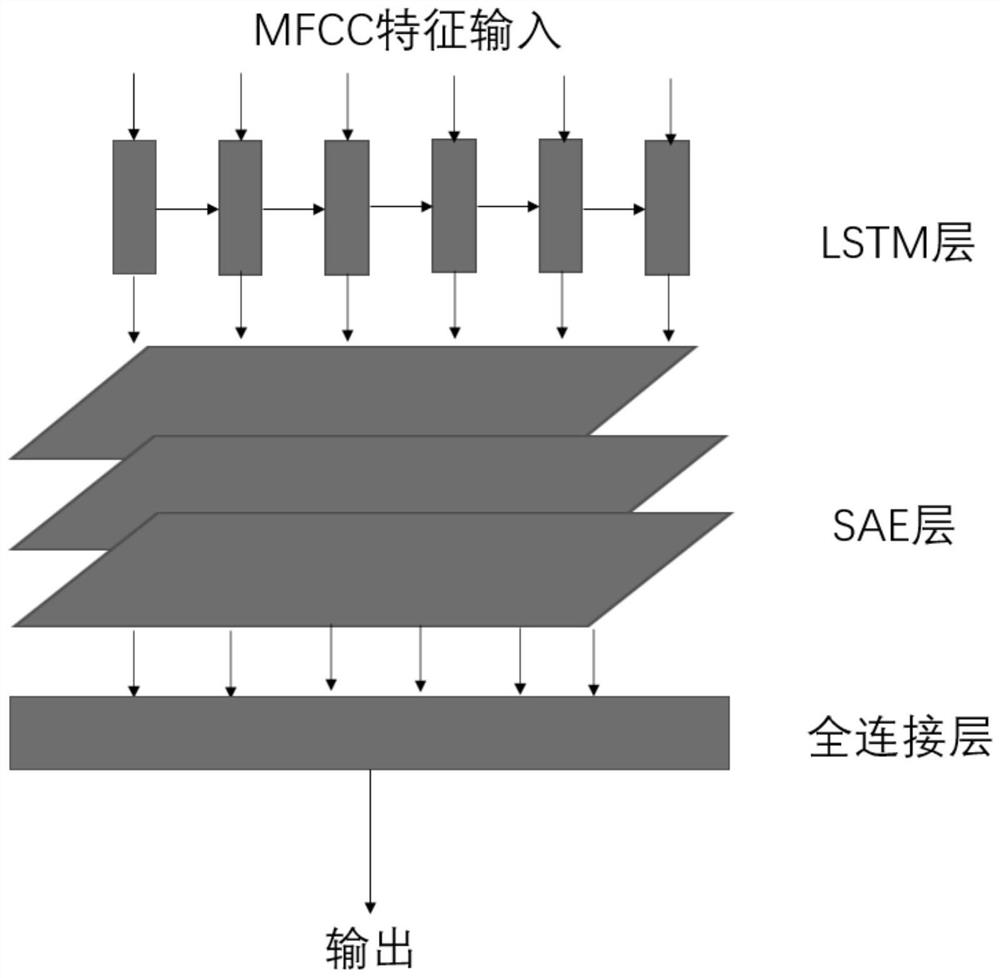

[0053] Such as Figure 1~2 Shown, a kind of telephone speech emotion analysis and recognition method based on LSTM and SAE, comprises the following steps:

[0054] Step 1, voice information sampling and quantization;

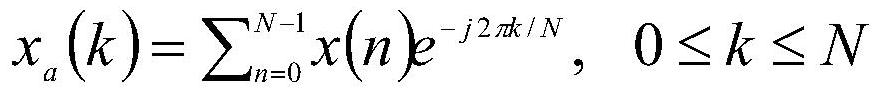

[0055] First of all, it must be clear that the analysis and processing of the voice signal is essentially the discretization and digital processing of the original voice signal; therefore, the analog signal is first converted into a digital voice signal through analog-to-digital conversion; the sampling process is based on a certain frequency, that is, The analog value of the analog signal is measured every short period of time; in order to ensure that the sound is not distorted, the sampling frequency is around 40kHz, which satisfies the Nyquist sampling...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com