Multi-language model compression method and device based on knowledge distillation

A multi-language and model technology, applied in the field of machine learning, can solve problems such as complex model structure and too many model parameters, and achieve the effect of less model parameters, simplified structure, and short time consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

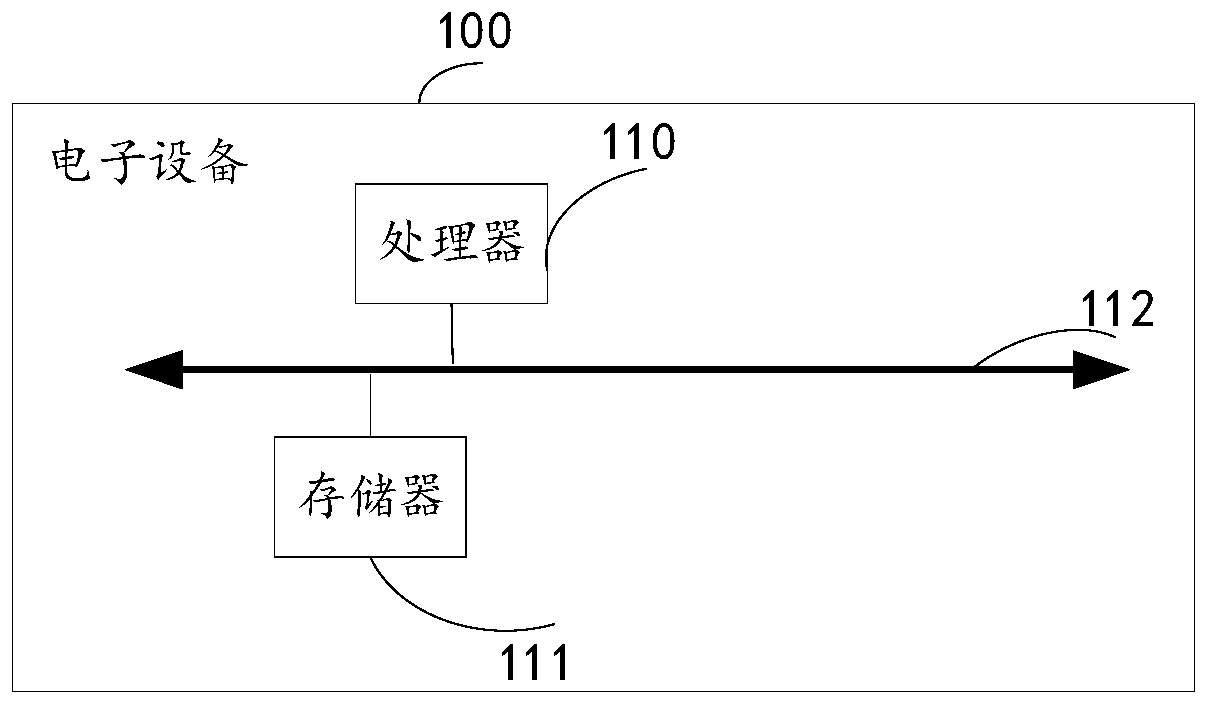

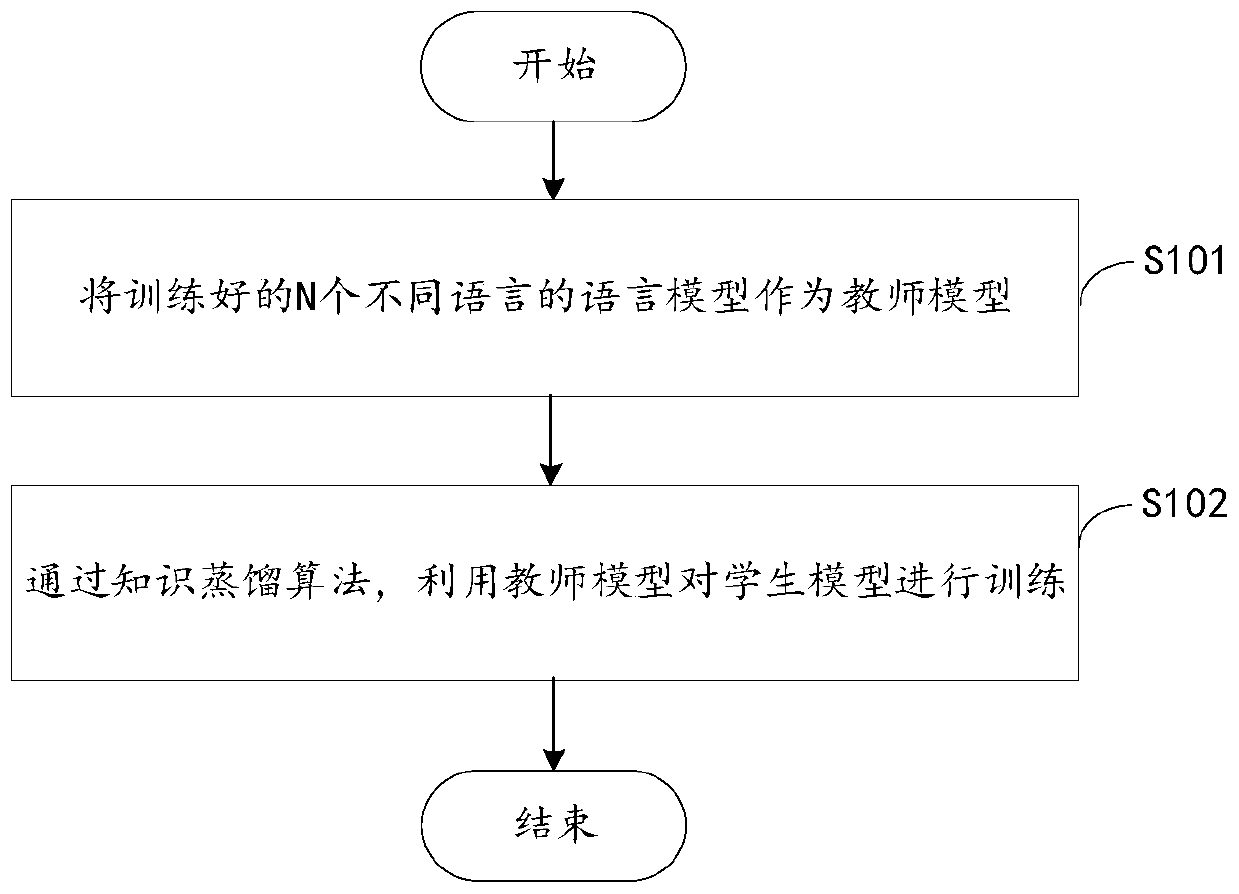

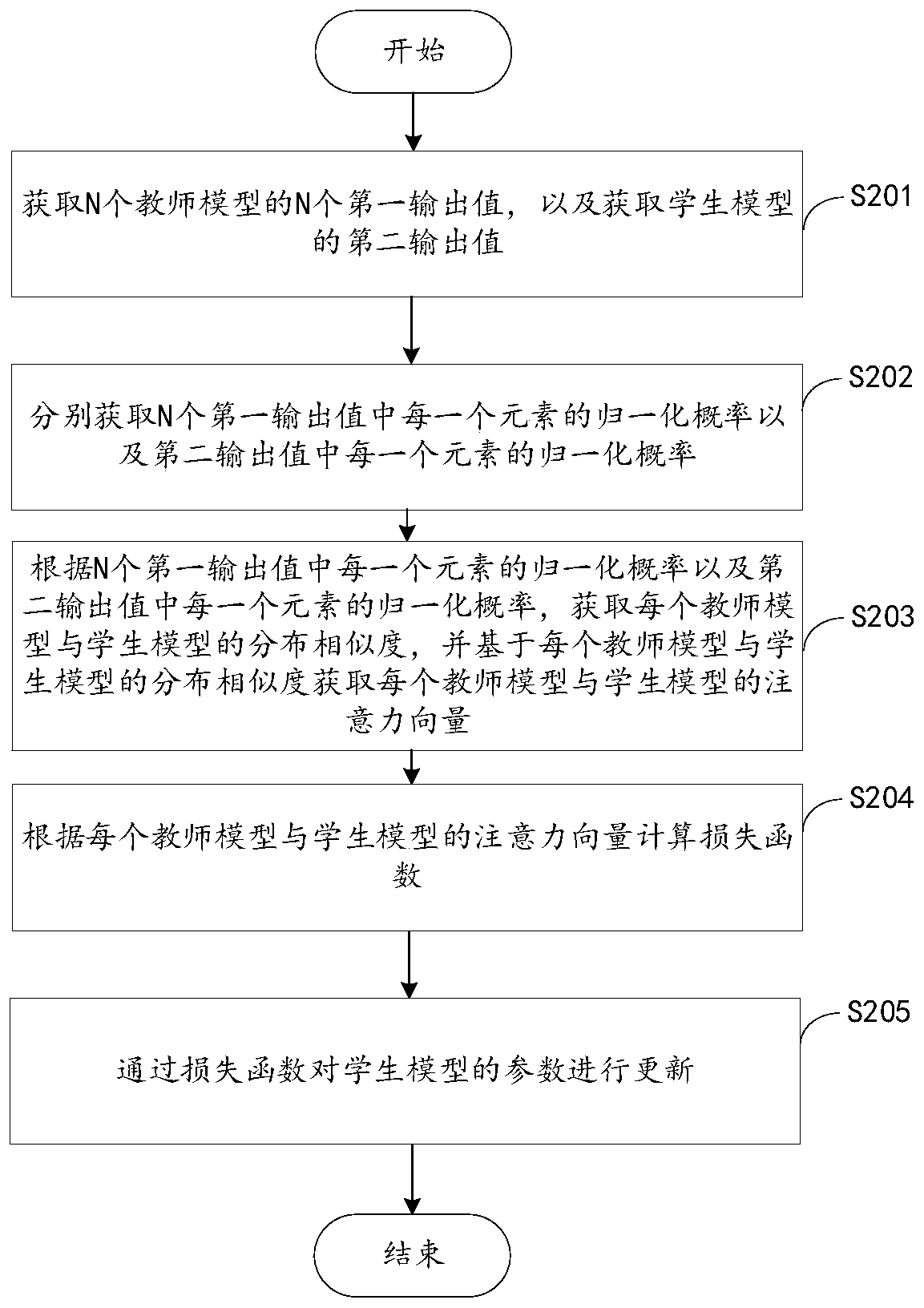

[0026] The technical solutions in the embodiments of the present application will be described below with reference to the drawings in the embodiments of the present application.

[0027] At present, there are two ways to train multilingual models. The first one is to prepare a large amount of corpus in multiple languages to form a large vocabulary, so that the model can learn semantic representations of multiple languages in one training process. The second is to dynamically add a vocabulary of a new language given a model that has been trained in a certain language, map the vocabulary to the weight matrix of the hidden layer, retain the weight matrix of the original model, and add a new vocabulary The corresponding weight matrix, and initialize the weight matrix corresponding to the new vocabulary, the training process is to use the corpus of the new language to train the language model. However, the above two methods will increase the model parameters of the model to be...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com