Distributed SRAM failure analysis method and system

A failure analysis and distributed technology, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve the problems of inconvenient analysis and long analysis time, reduce reading time, improve rendering efficiency, and reduce transmission load Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

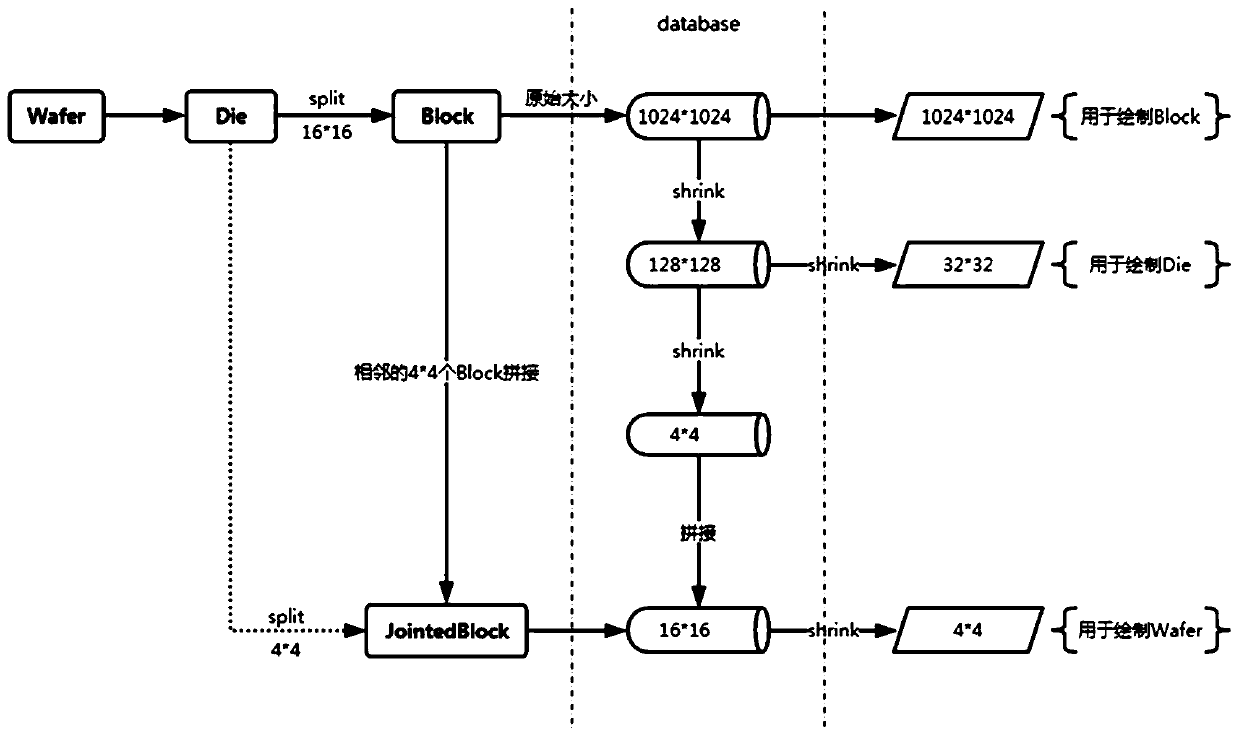

[0061] use figure 1 The SRAM data of the process shown in the detailed description of the data compression method used for distributed SRAM failure analysis:

[0062] 1) Compressed storage:

[0063] The database stores data at the Block level, data at the Die level, and data at the Wafer level.

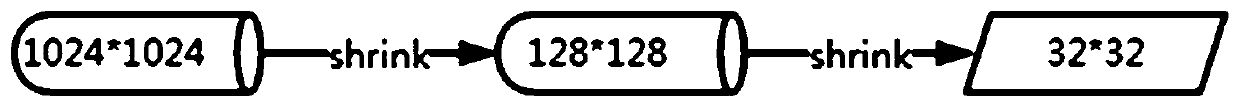

[0064] After decoding the data at the block level, the resolution of each block is 1024×1024; after decoding the data at the Die level, the resolution of each block is 128×128; after decoding the data at the Wafer level, the resolution of each block is 16×16.

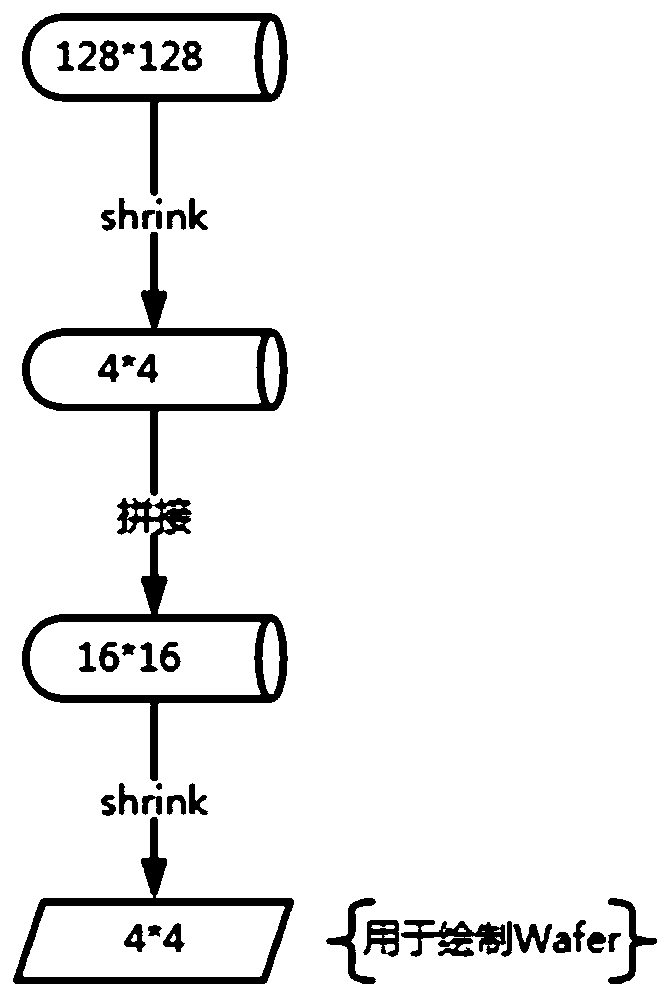

[0065] For the splicing processing of the sampled data during sampling, please refer to figure 1 The data compression process at the middle Wafer level: sampling the analysis result data to compress the resolution of each block to 4×4, and then stitching adjacent 4×4 blocks to obtain a Jointed Block, so that each Block( The resolution of Jointed Block is 16×16.

[0066] 2) Sampling and drawing:

[0067] When drawing a block, directly pr...

Embodiment 2

[0070] Example 2 SRAM test

[0071] According to the definition of the original Block: each Die contains 16×16 Blocks, each Block 1024×1024; at the Wafer level, if a wafer has 84 Dies, the number of Block records contained reaches: 84×256×14 =301056. The 14 multiplied here refers to the test voltage Voltage.

[0072] When dividing tasks for each thread, you need to get all the corresponding Fail Types. At the Die level, only 3584 records need to be traversed. At the Wafer level, the number reaches 301056, which leads to taking this part of the data. Time-consuming has increased significantly. According to the actual measurement, it takes 1500ms to obtain all Fail Types at the first level of Wafer, which accounts for more than 70% of the total background processing time.

[0073] At the wafer level, the original 16 (4×4) blocks (the original resolution is 1024×1024, compressed to 4×4 when stored) are spliced together to obtain a new Jointed Block with a resolution of 16 ×16, the...

Embodiment 3

[0099] Example 3 Simulation and simulation of storage space

[0100] Storage space estimation: A block requires up to 128K of storage space, and a die is 32MB. On the basis of binary coding, the binary data is compressed, which can further reduce the storage space of each die. After the data analysis is completed, a die may have more than 10 types of failures, and the uncompressed storage space will be 10*32M, but the analysis result data is relatively sparse and exhibits more obvious distribution characteristics, so after compression, the space station occupied less than This value. According to actual experiments, regardless of the distribution characteristics of the analysis results, the compressed storage space will not exceed 2*32M. According to the actual simulation, the space occupied by the test data we constructed will increase significantly when the failure rate is 50%. The raw data and analysis data of a die occupy about 33MB, and when the failure rate is less than 4...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com