a computing architecture

A computing architecture and computing array technology, applied in the field of computing architecture, can solve problems such as frequent CacheMiss, low Cache utilization, and restricting computing performance, so as to reduce performance bottlenecks, reduce CacheMiss, and improve flexibility.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

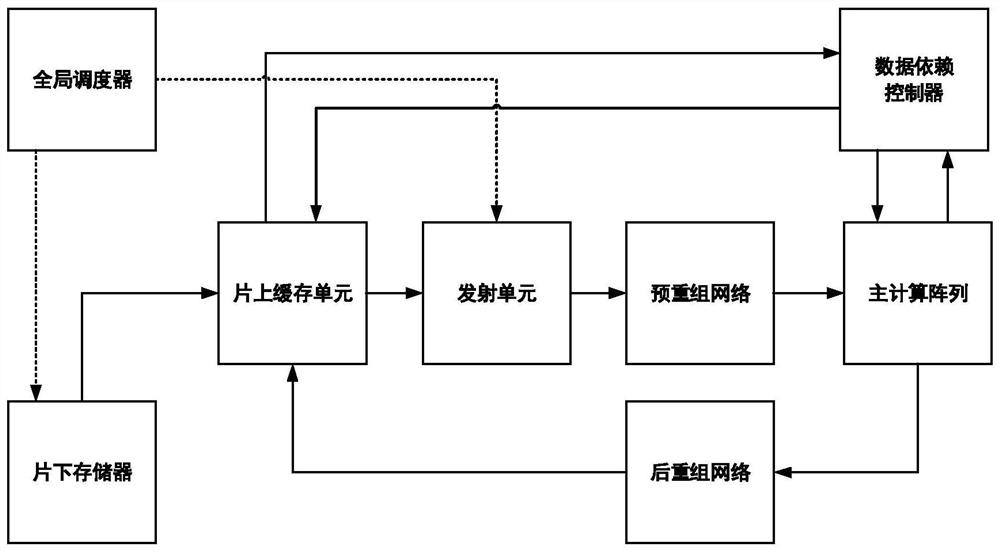

[0033] In one embodiment, such as figure 1 As shown, a computing architecture is disclosed, including: off-chip memory, on-chip cache unit, transmitting unit, pre-reorganization network, post-reorganization network, main computing array, data dependency controller and global scheduler; wherein,

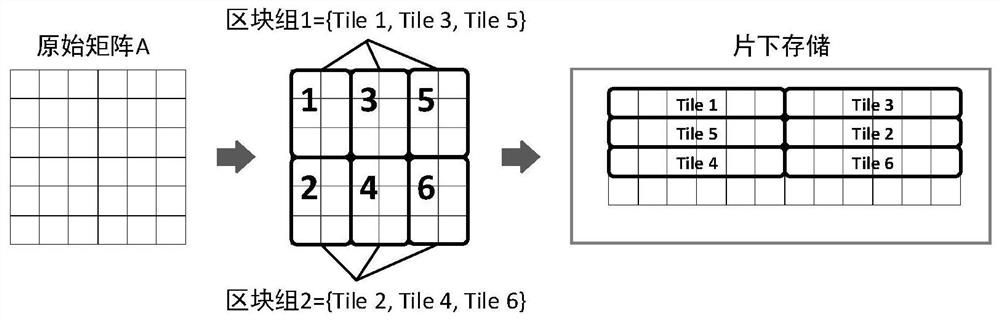

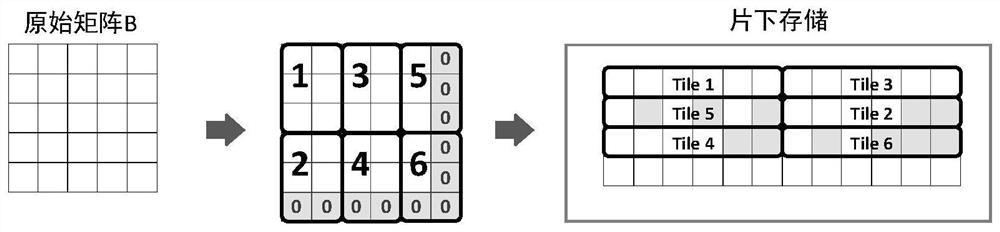

[0034] An off-chip memory for storing all large-scale data in a block format, wherein the large-scale data is divided into multiple blocks of equal size;

[0035] The on-chip cache unit is used to store part of the data of the block to be calculated and the dependent data required for the calculation;

[0036] The transmitting unit is used to read the data of the corresponding block from the on-chip cache unit and send it to the pre-reassembly network according to the order specified by the scheduling algorithm;

[0037] The main calculation array is used to complete the calculation of the data of the main block;

[0038] Pre-reorganization network, which is used to perform arbitrar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com