A data storage method for speech deep neural network operation

A technology of deep neural network and data storage, applied in the direction of biological neural network model, electrical digital data processing, input/output process of data processing, etc. Unable to guarantee, timeliness cannot meet the requirements, etc., to achieve the effects of effectiveness and timeliness guarantee, reduction in quantity, and reduction in effective duration

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] Specific embodiments of the present invention will be further described in detail below.

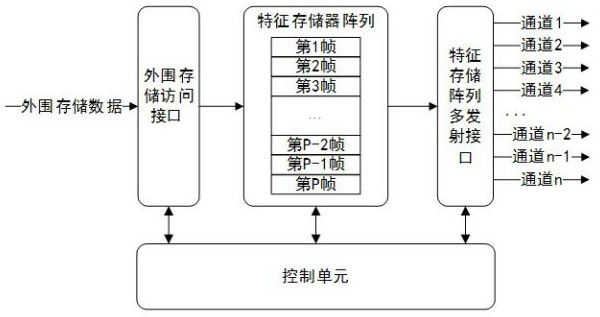

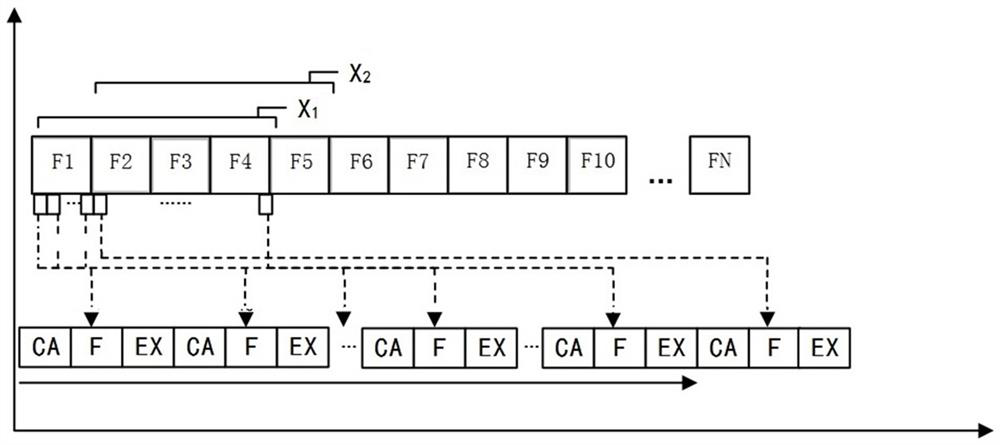

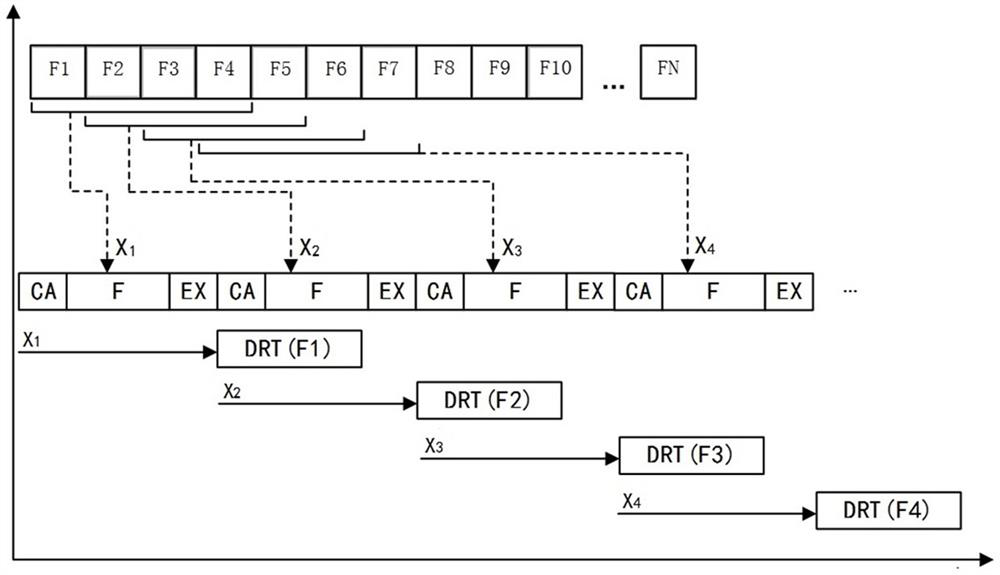

[0044] The storage management method for voice deep neural network computing chip of the present invention comprises the following steps:

[0045] Step 1. The user determines the configuration parameters, specifically:

[0046] Determine the total number of frames, the number of skipped frames, the number of output channels and the number of single-channel output frames of the feature data to be calculated, which are defined by the user according to the requirements of this calculation;

[0047] Determining the number of data per unit frame required for deep neural network operations is defined by the user according to the requirements of this calculation; however, it must satisfy the following formula: the unit memory data depth of the feature storage array in the feature storage device ≥ deep neural network The number of data per unit frame required for network operations;

[...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com