Method for improving parallel NumPy computing performance by using characteristics of non-uniform memory access architecture

A computing performance, parallel computing technology, applied in computing, program control design, multi-program device, etc., can solve problems such as bandwidth waste, achieve the effect of improving performance, effective utilization, and reducing performance problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

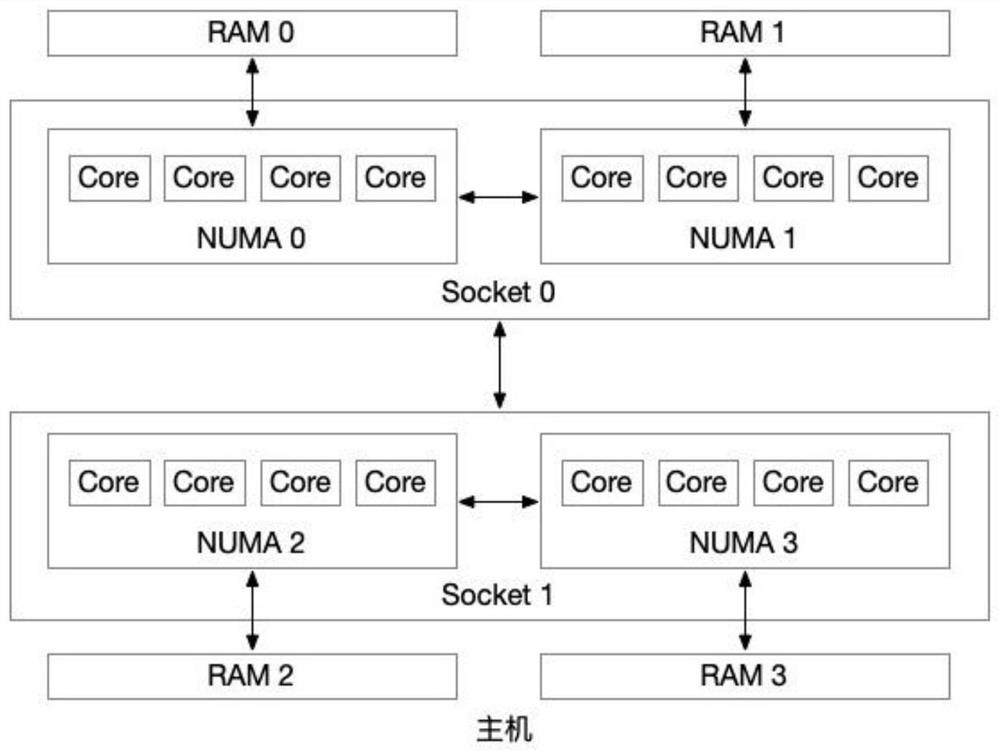

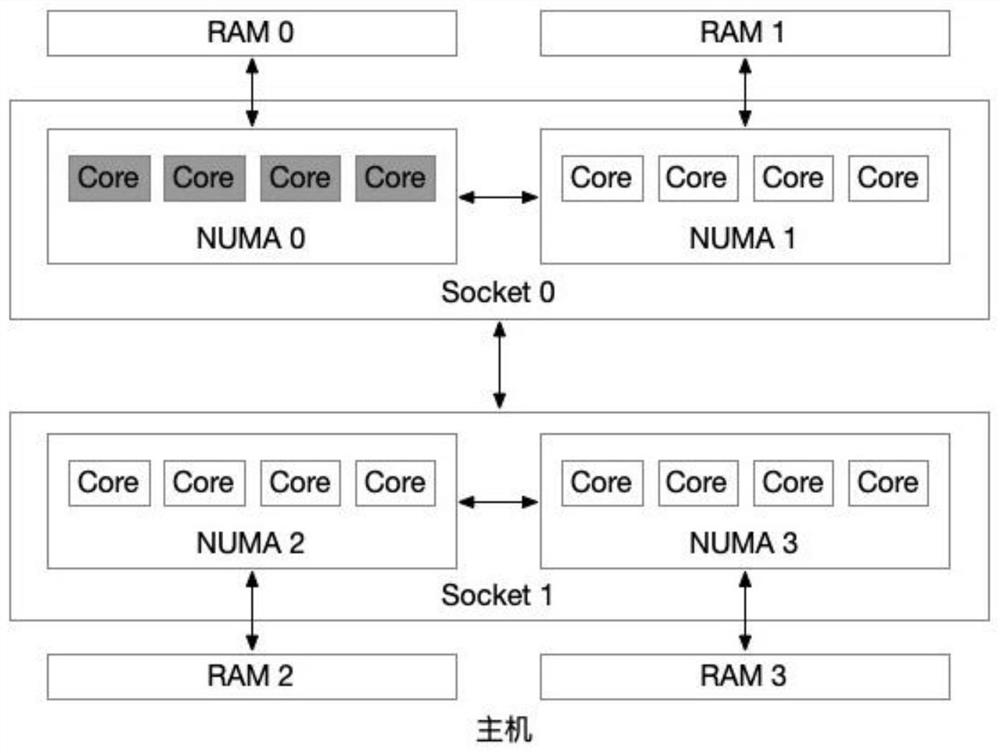

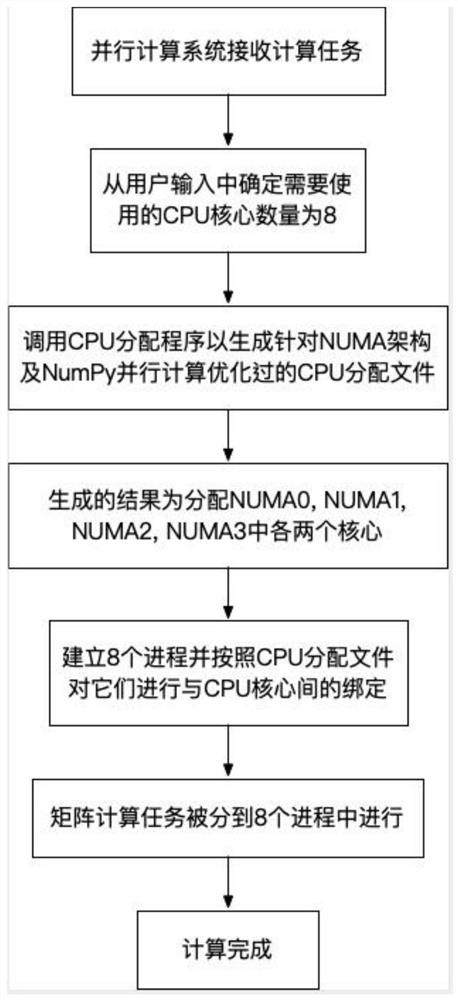

[0074] Figure 5 It is a schematic diagram of the CPU allocation method optimized for NumPy parallel computing. The yellow part in the figure is the core selected for this allocation method, which selects four cores distributed on NUMA0, NUMA1, NUMA2 and NUMA3.

[0075] The principle of using this allocation method is to use as many NUMA nodes as possible. In this way, compared with the traditional CPU allocation method, the memory bandwidth is quadrupled. At the same time, because the CPU in the two sockets is used, the L3 Cache has also become twice the traditional CPU allocation method.

[0076] Although this allocation method increases the overhead of data exchange between cores, it can make full use of the computer's memory and memory bandwidth. And application performance can also benefit from the increase in L3 Cache capacity, which is huge for programs with better program locality.

[0077] The traditional CPU allocation method can make the data exchange delay betwe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com