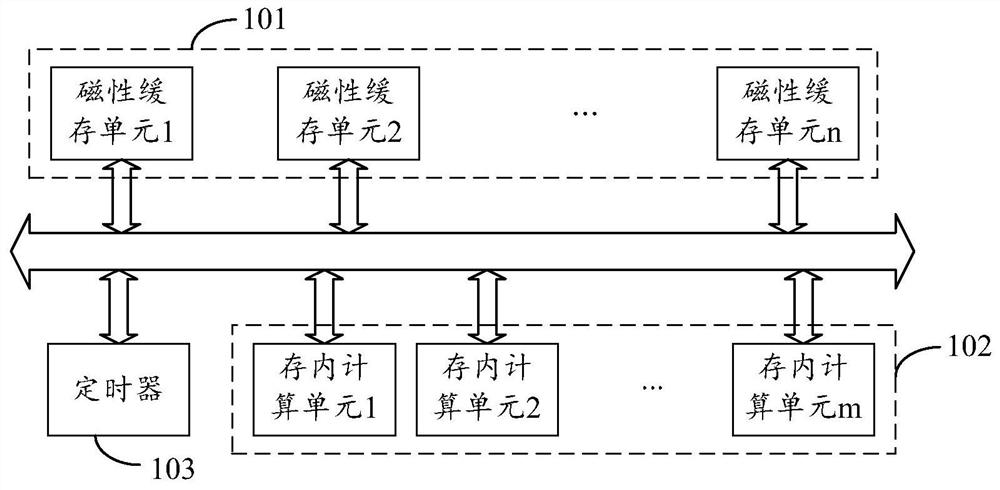

In-memory computing circuit chip based on magnetic cache and computing device

A computing circuit and magnetic technology, applied in the computer field, to achieve the effect of increasing data storage capacity, high density, and large capacity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

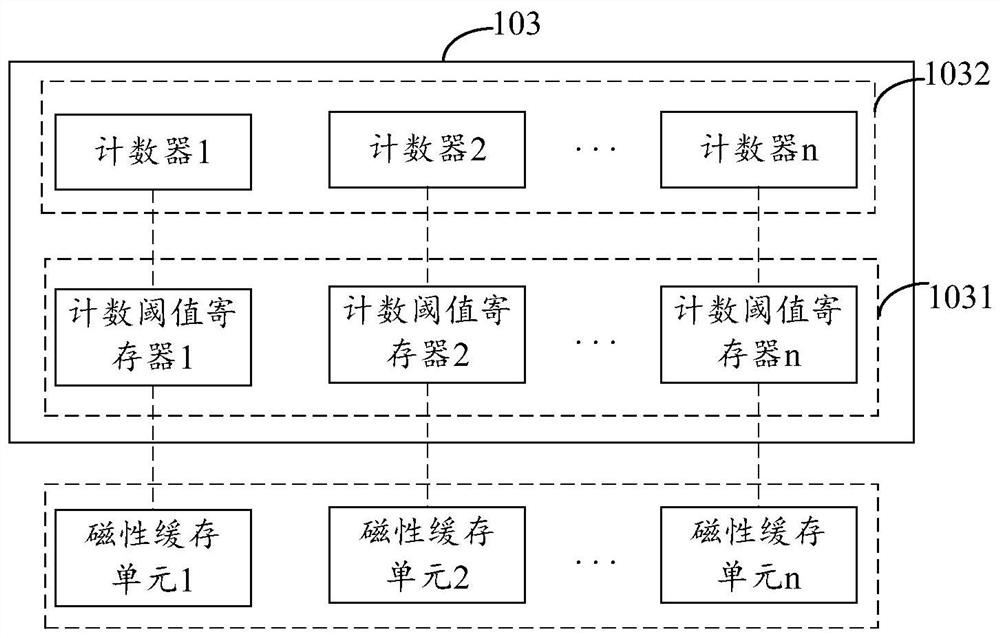

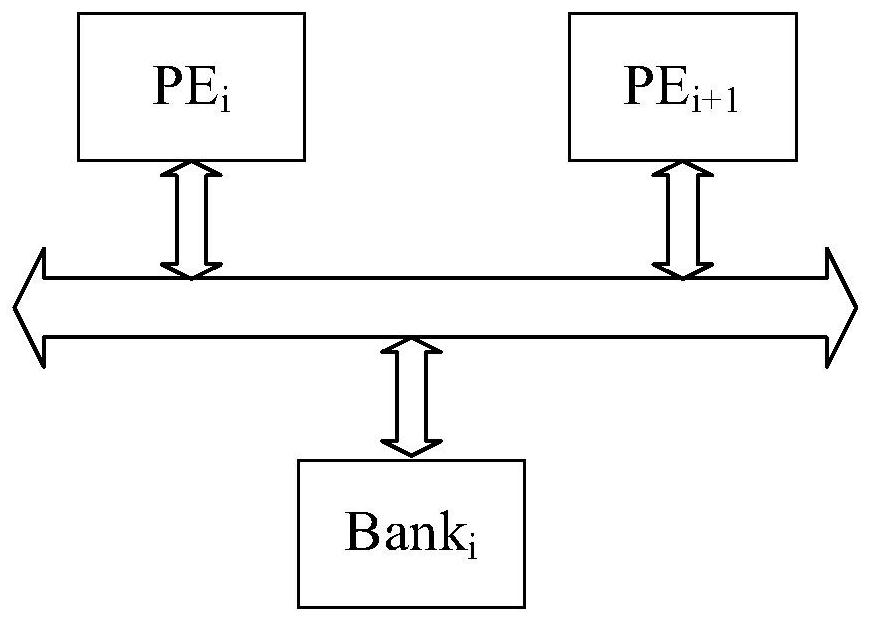

Embodiment Construction

[0028] Hereinafter, exemplary embodiments according to the present disclosure will be described in detail with reference to the accompanying drawings. Apparently, the described embodiments are only some of the embodiments of the present disclosure, rather than all the embodiments of the present disclosure, and it should be understood that the present disclosure is not limited by the exemplary embodiments described here.

[0029] It should be noted that relative arrangements of components and steps, numerical expressions and numerical values set forth in these embodiments do not limit the scope of the present disclosure unless specifically stated otherwise.

[0030] Those skilled in the art can understand that terms such as "first" and "second" in the embodiments of the present disclosure are only used to distinguish different steps, devices or modules, etc. necessary logical sequence.

[0031] It should also be understood that in the embodiments of the present disclosure, "...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com