PM-based database page caching method and system

A page cache and database technology, applied in the field of data processing, can solve problems such as restricting the effective throughput of the disk, write amplification, etc., and achieve the effect of improving the disk brushing efficiency, low management overhead, and improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

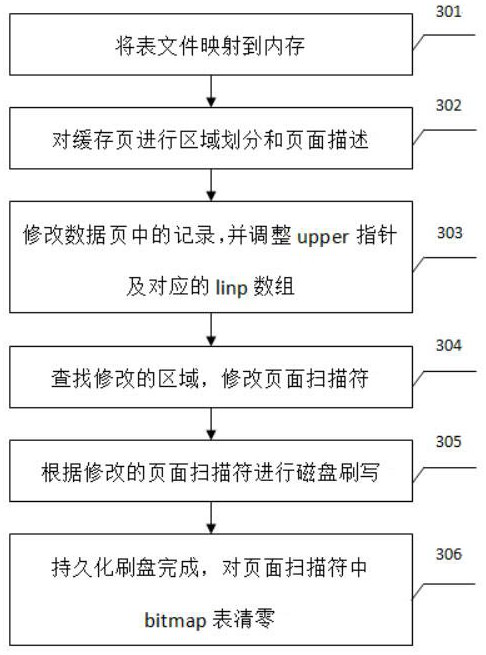

[0092] image 3 In accordance with the PM-based database page cache method according to the present invention, the following will be referenced image 3 The PM-based database page cache method of the present invention will be described in detail.

[0093] First, at step 301, the table file is mapped to the DRAM memory.

[0094] In the embodiment of the present invention, the table file of the PM memory is mapped to the DRAM memory by a persistent memory development kit PMDK.

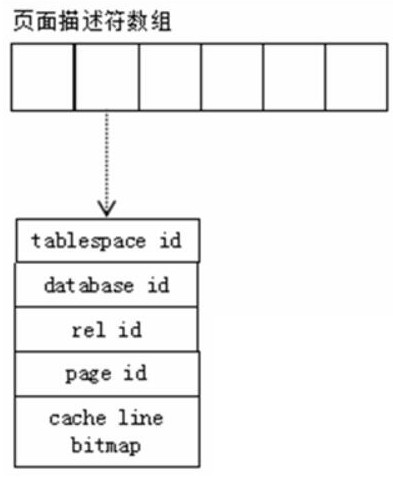

[0095] At step 302, the cache page is specifically divided and page description.

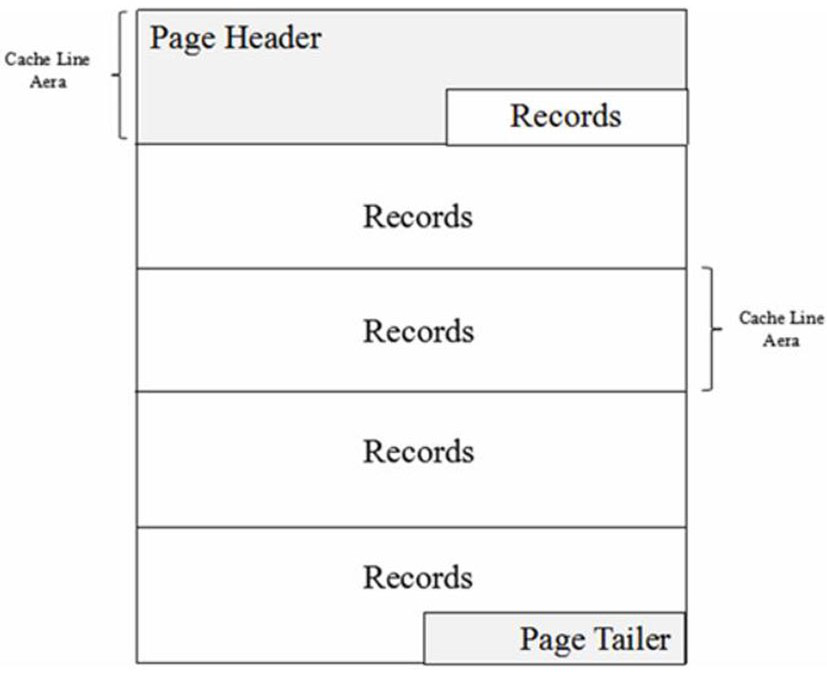

[0096] In the embodiment of the present invention, the database buffer page is divided, including, dividing each cache page into a fixed size area, where the zone size is set to 1 cache line, and the data page is split into multiple regions (CacheLine) Block). Database cache page after the region, such as figure 1 As shown, including the page and the page, where

[0097] The pages include: Checksum, Lower, Upper, Linp, where

...

Embodiment 2

[0123] Figure 4 Insert a flowchart of the data page and brush the disk according to the insertion of the present invention, and will be referenced below Figure 4 The insertion of the present invention modifies the data page and brush the flow of the disk. First, in step 401, the record is inserted into the idle block of the cache page.

[0124] In the embodiment of the present invention, it is scanned from the tail of the cache page, and an idle space is found in the cache page idle spatial location and inserts a record.

[0125] At step 402, the UPPER pointer and the corresponding LINP array are adjusted.

[0126] In the embodiment of the present invention, the UPPER pointer is adjusted to point to the last recording head, which is offset to PTR; adjust the corresponding LNP array, pointing to the record.

[0127] At step 403, the record inserted is calculated and the page descriptor is modified.

[0128] In the embodiment of the present invention, which area is calculated which...

Embodiment 3

[0137] Figure 5 For a PM-based database page cache system architecture, such as Figure 5 As shown, the PM-based database page cache system, including, PM memory 501, DRAM memory 502, region division module 503, page description module 504, and disk brush module 505, where

[0138] PM memory 501, which is used to store table files.

[0139] In the embodiment of the present invention, the PM memory 501, which maps the table file to the DRAM memory 502 through a persistent memory development kit PMDK, accepts the disk brush module 505 instruction, and updates the table file.

[0140] DRAM memory 502, which stores the database buffer page and page descriptor, and brushes the modified area to the PM memory 501 based on the disk brush module 505 instruction.

[0141] Regional division module 503, which divides the database buffer page.

[0142] In the embodiment of the present invention, the database is built into the database, including, each cache page is divided into a fixed size ar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com