Model compression method based on layer number sampling and deep neural network model

A technology of neural network model and compression method, which is applied in the direction of biological neural network model, neural architecture, neural learning method, etc., can solve problems such as limiting the popularization and application of neural network technology, insufficient computing speed, and unfavorable smart home application scenarios, etc. Achieve the effect of optimizing the configuration, speeding up the operation speed, and improving the operation speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

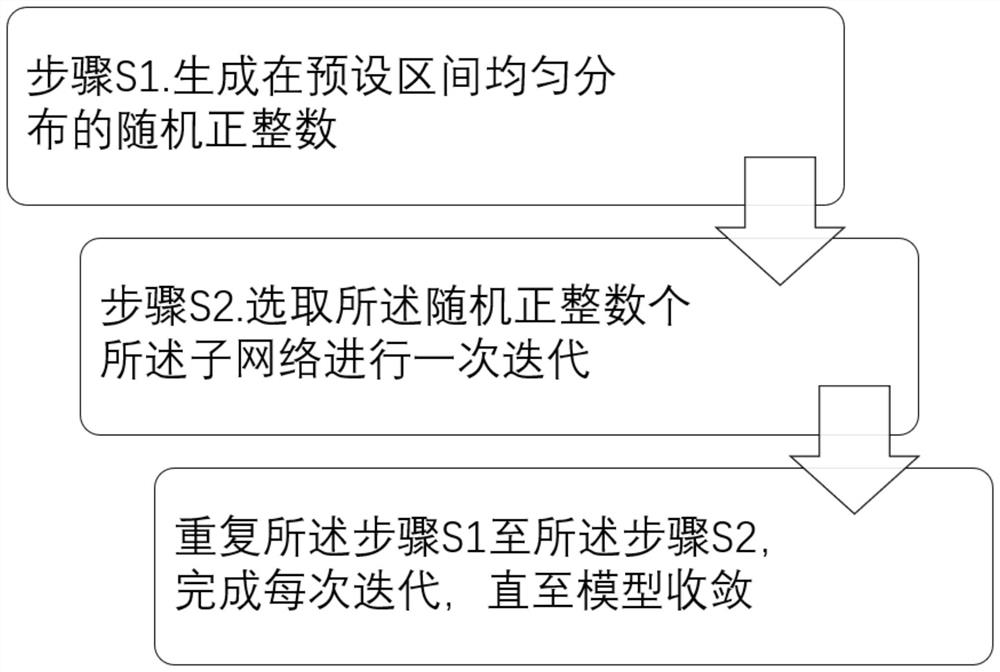

[0035] In Embodiment 1 of the present invention, the model compression method in the first aspect of the present invention is specifically described as a training situation. The model compression method used in training in this example is as follows: figure 2 As shown, a neural network based on several cascaded sub-networks (Conformer layer) with the same structure includes: Step S1. Generate random positive integers uniformly distributed in a preset interval, and the extreme value of the interval is not greater than that of the sub-network The total number; step S2. selecting the random positive integer number of the sub-networks to perform one iteration; repeating the steps S1 to S2 to complete each iteration until the model converges.

[0036] The several cascaded sub-networks described in this embodiment use the same set of training parameters, and the neural network in this embodiment is based on a parameter sharing strategy. In some other practical applications, the st...

Embodiment 2

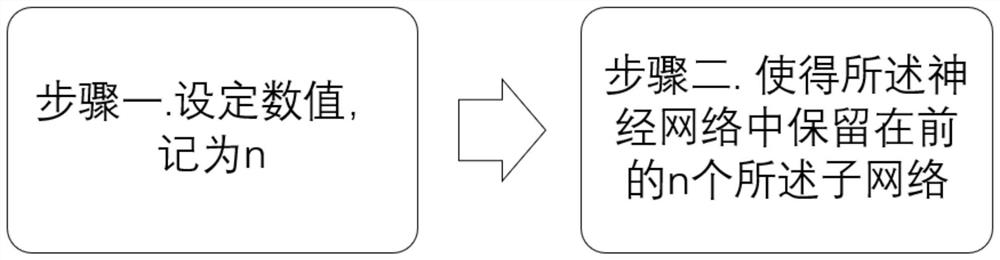

[0043] Such as image 3 As shown, Embodiment 2 expands the model compression method based on layer number sampling provided by the second aspect of the present invention during reasoning, including: Step 1. Set a value, denoted as n, where n is a positive integer and less than the stated The total number of sub-networks; Step 2. Keeping the first n sub-networks in the neural network; Setting the value in Step 1 includes: evaluating the performance of the neural network model and determining the ideal value of n.

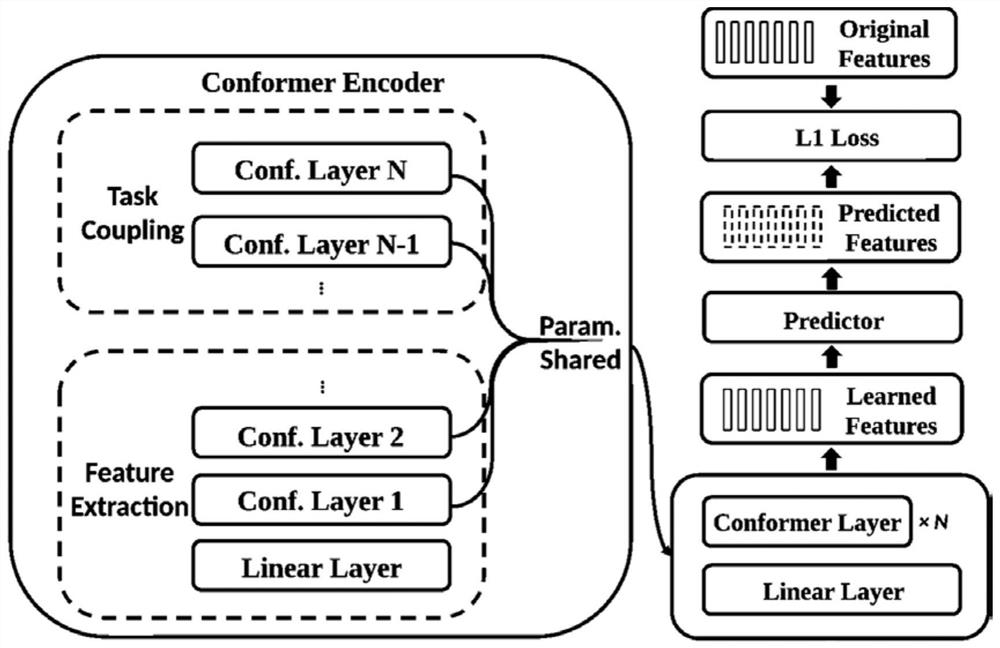

[0044] The neural network of this embodiment is not sensitive to pruning of the number of layers. In self-supervised learning tasks based on Transformer architectures, not all layers are used to encode context and capture high-level semantic information. The last few layers of sub-networks try to transform the hidden representations between layers into a space where the original features are more predictable. This is an additional layer coupling phenomenon. The ca...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com