Memory device search system and method

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

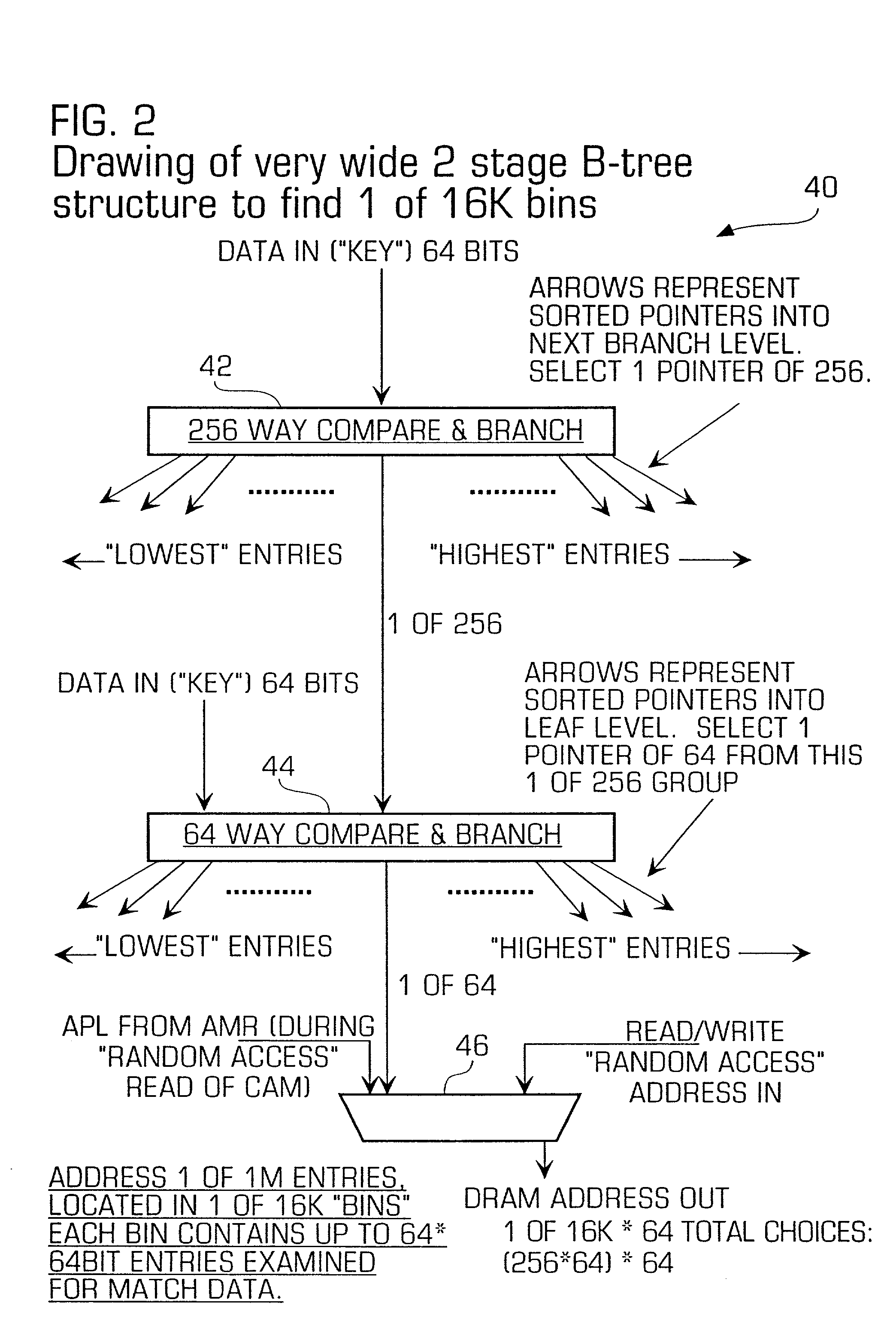

[0025] The invention is particularly applicable to a 64 Mbit content addressable memory (CAM) device that uses 128 Mb of DRAM and it is in this context that the invention will be described. It will be appreciated, however, that the system and method in accordance with the invention has greater utility, such as to other types of memory devices that may use other types of typical memory blocks such as SRAM or Embedded DRAM. In addition, the invention may be implemented on a single silicon die or as several die in a multi-chip package. It will be appreciated that this architecture achieves the same advantages over traditional CAM in subsequent memory density generations. Now, the preferred embodiment of the invention implemented as a CAM will be described.

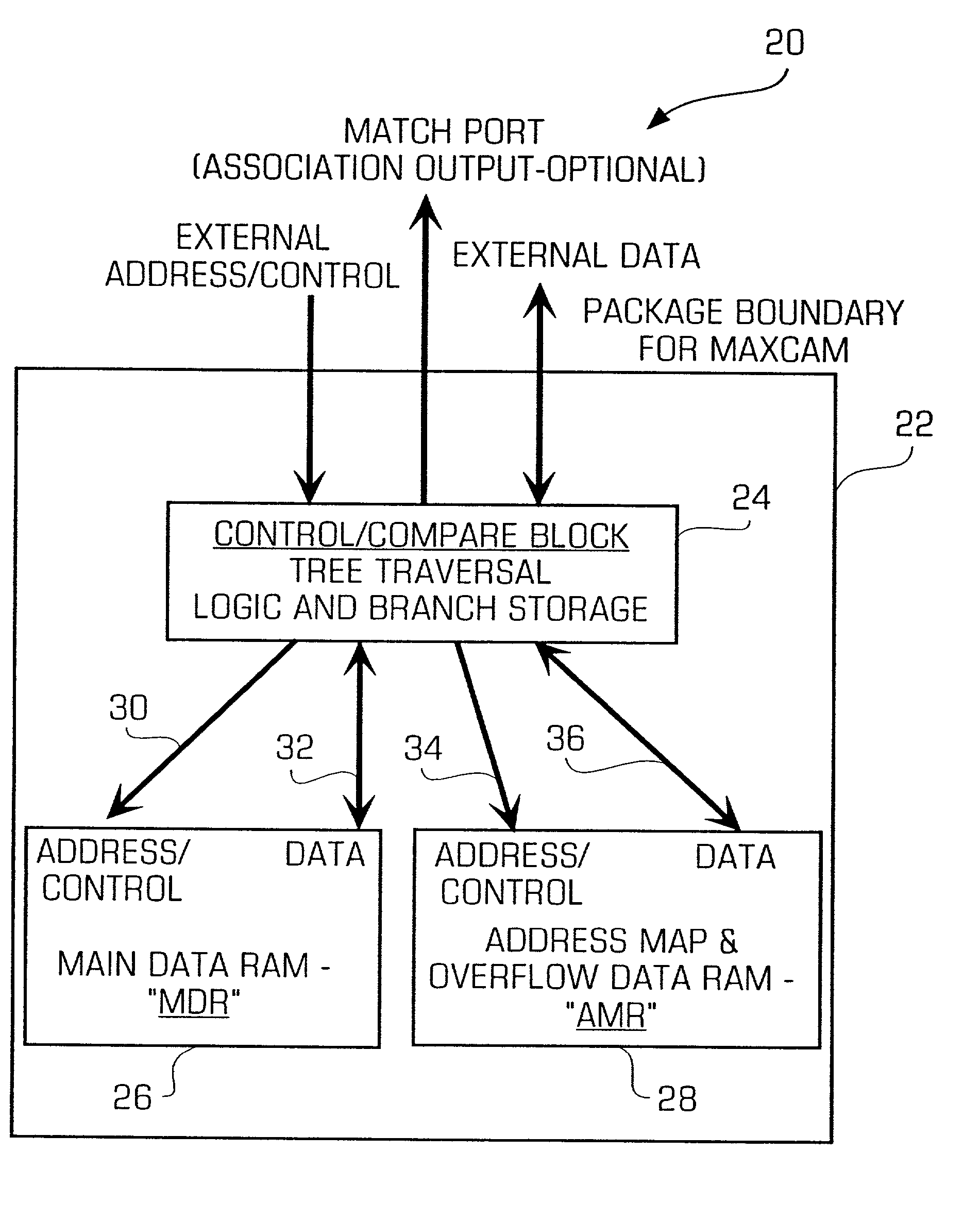

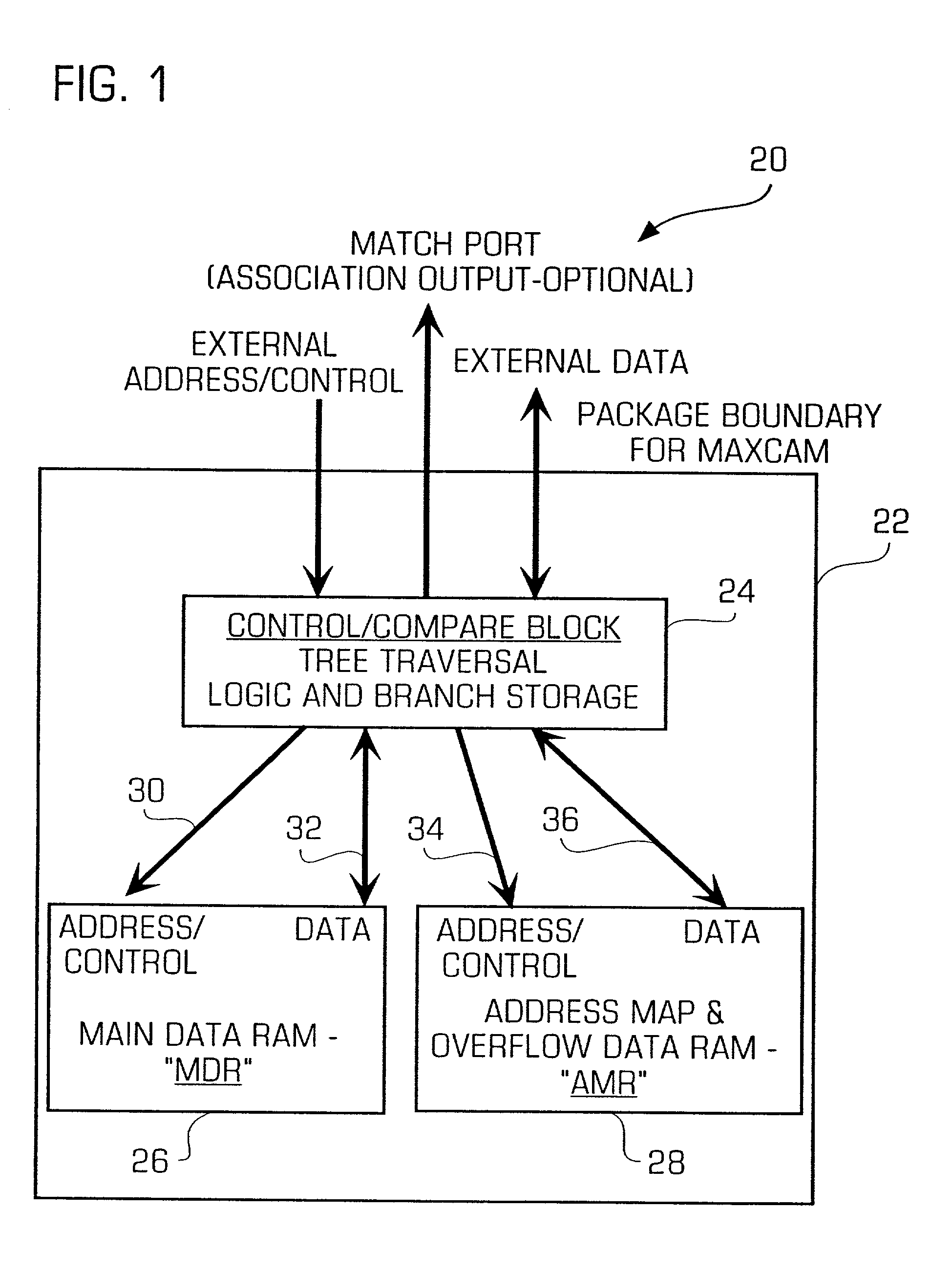

[0026] FIG. 1 is a block diagram illustrating a preferred embodiment of a single die content addressable memory (CAM) 20 in accordance with the invention that implements the search architecture in accordance with the invention. The CA...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com