Method and apparatus for visual background subtraction with one or more preprocessing modules

a technology preprocessing modules, applied in the field of visual background subtraction techniques, can solve problems such as image jitter, adversely affecting the efficacy of this class of techniques, and changes in camera responses

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

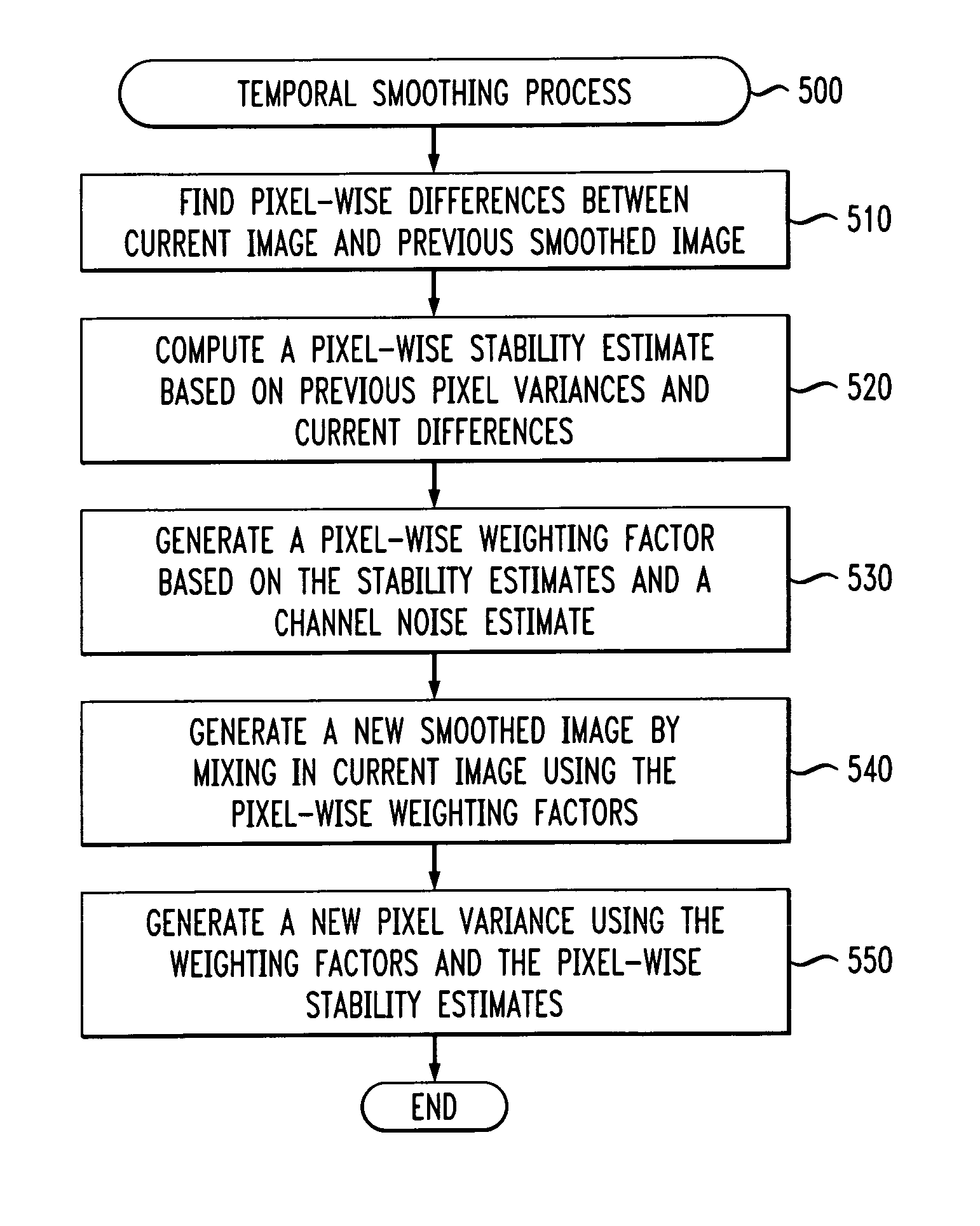

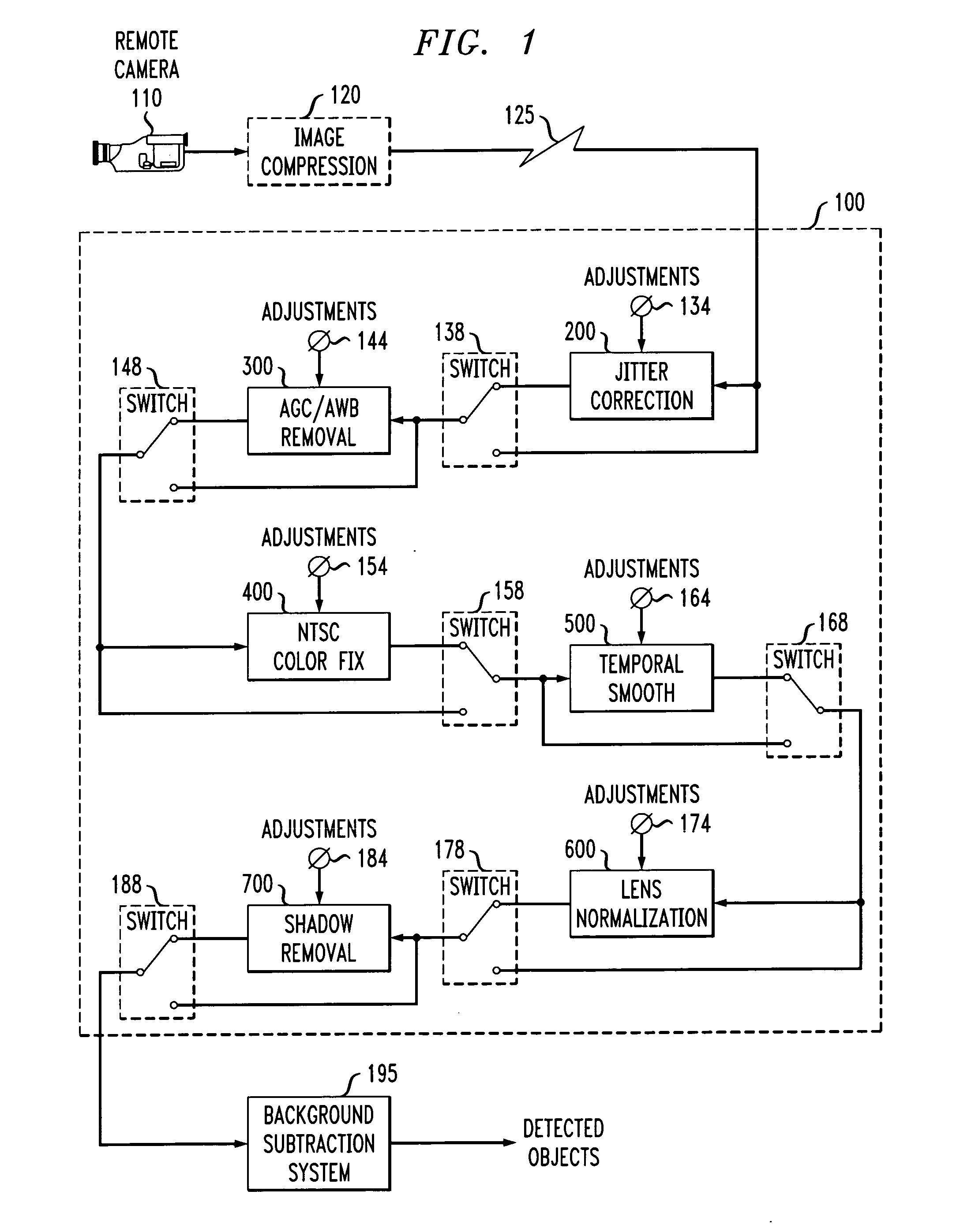

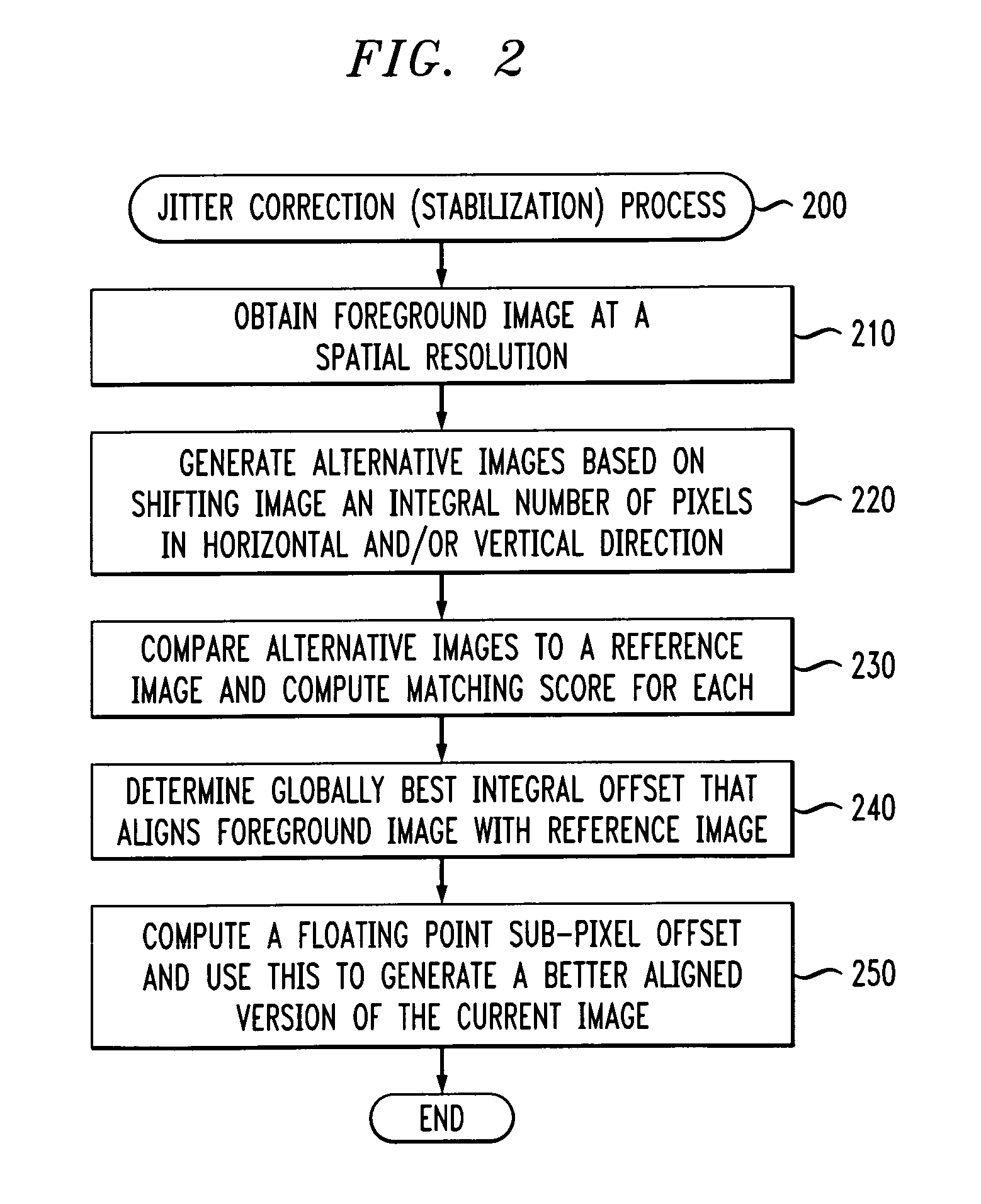

Method used

Image

Examples

Embodiment Construction

[0024] The present invention provides methods and apparatus for visual background subtraction with one or more preprocessing modules. An input video stream is passed through one or more switchable, reconfigurable image correction units before being sent on to a background subtraction module or another visual analysis system. Depending on the environmental conditions, one or more modules can be selectively switched on or off for various camera feeds. For instance, an indoor camera generally does not require wind correction. In addition, for a single camera, various preprocessors might only be invoked at certain times. For example, at night, the color response of most cameras is poor in which case they revert to essentially monochrome images. Thus, during the day, the signal from this camera might be processed to ameliorate the effect of chroma filtering (e.g., moving rainbow stripes at sharp edges) yet this module could be disabled at night.

[0025] The present invention copes with ea...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com