System and method for occluding contour detection

a contour detection and contour detection technology, applied in the field of system and method for occluding contour detection, can solve the problems of affecting the pixel-wise semantic segmentation unable to enable the identification of important objects in the input image, and the size of the feature map of the last few layers of the network is inevitably downsampled, so as to improve the pixel-wise semantic segmentation, improve the practical use effect, and effectively enlarge the network

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022]In the following description, for purposes of explanation, numerous specific details are set forth in order to provide a thorough understanding of the various embodiments. It will be evident, however, to one of ordinary skill in the art that the various embodiments may be practiced without these specific details.

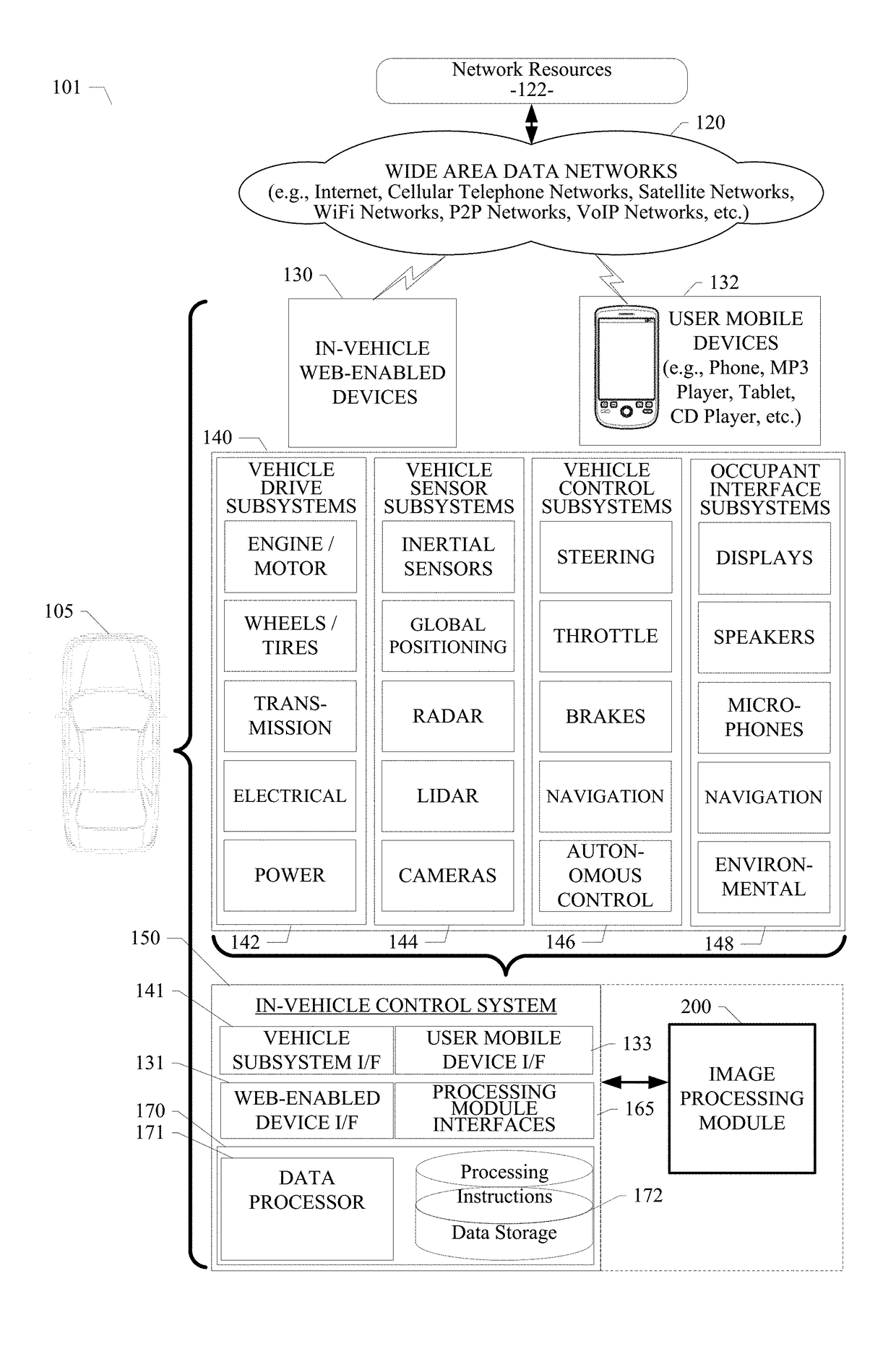

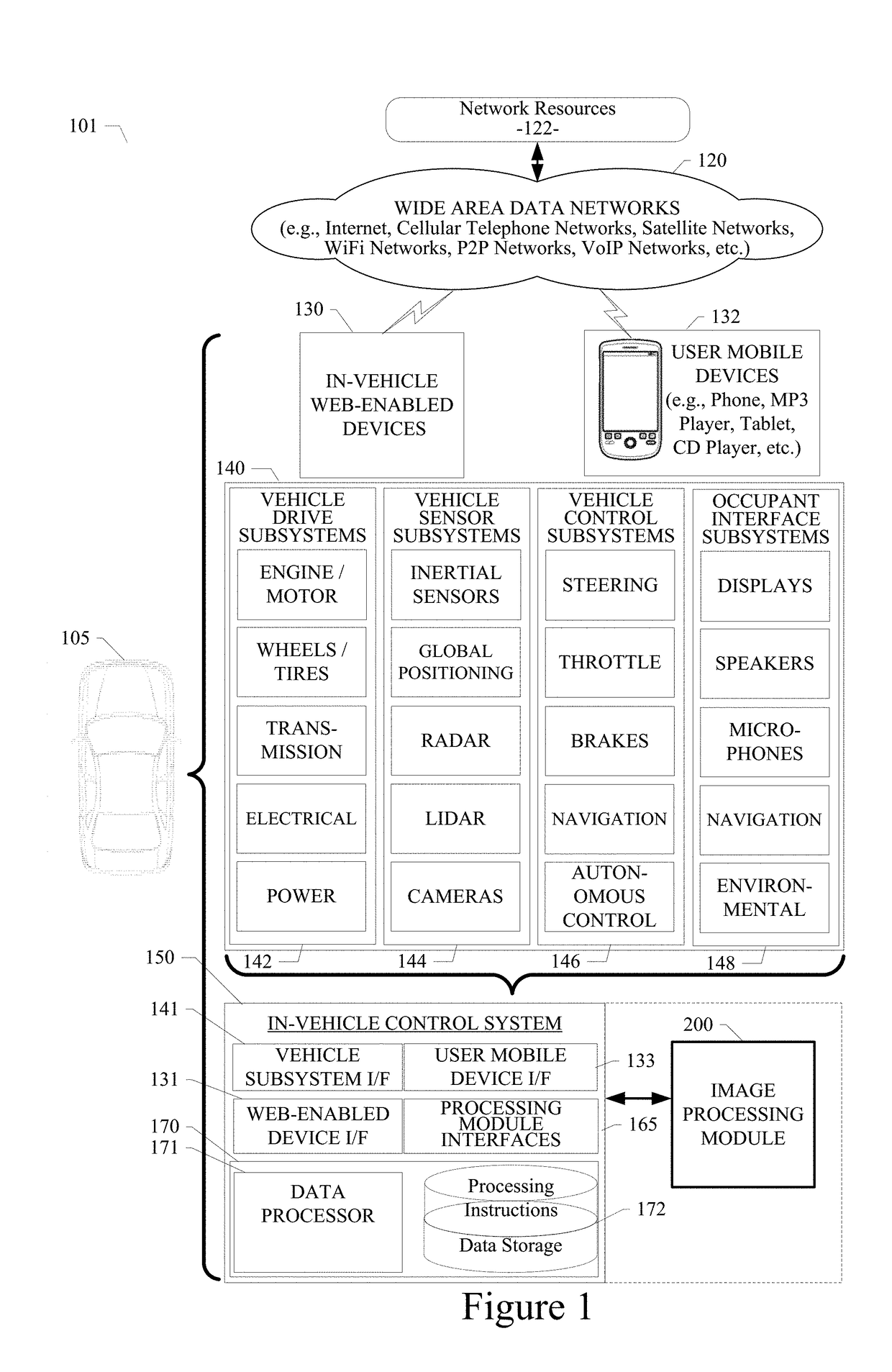

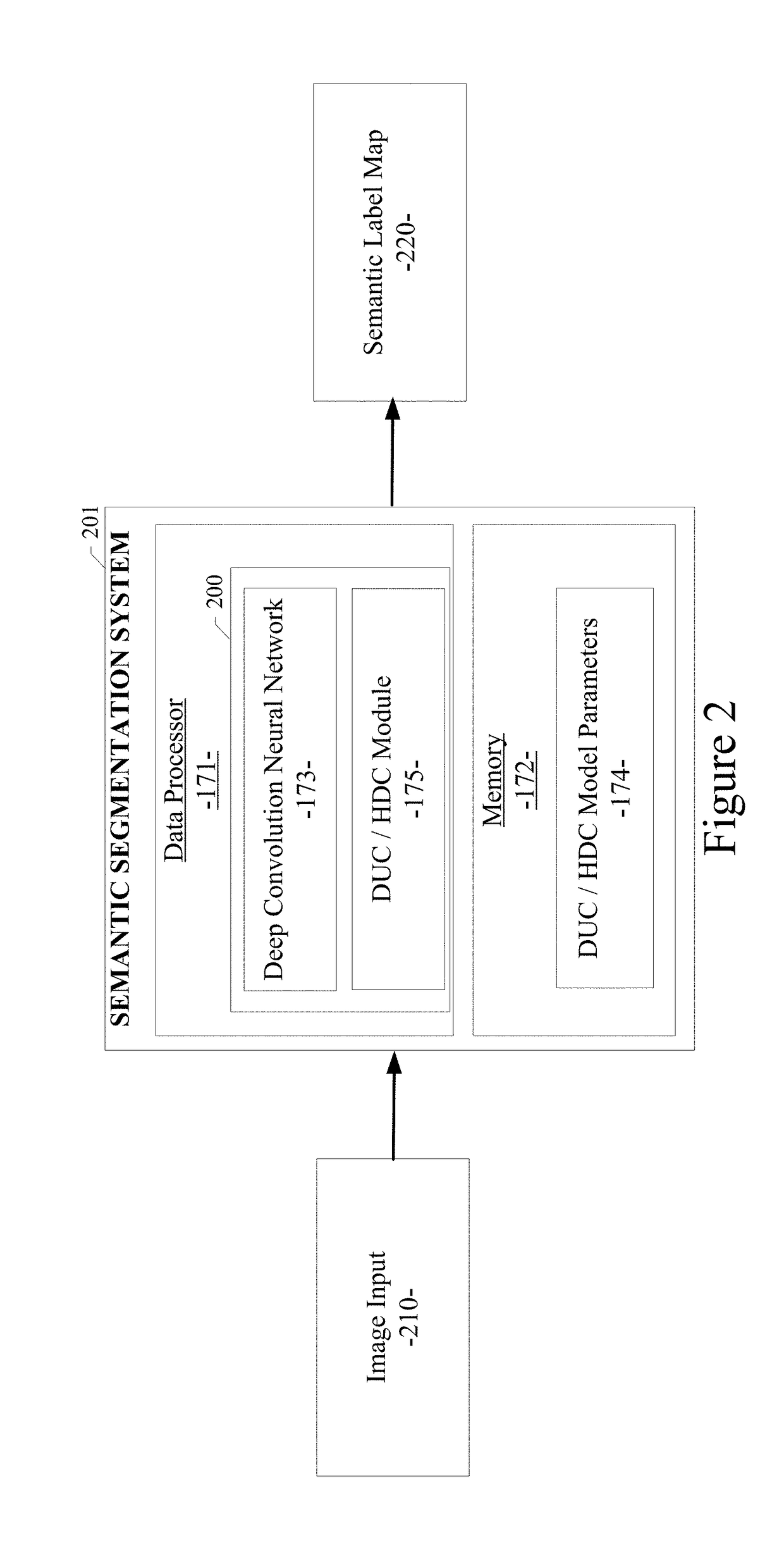

[0023]As described in various example embodiments, a system and method for occluding contour detection are described herein. An example embodiment disclosed herein can be used in the context of an in-vehicle control system 150 in a vehicle ecosystem 101. In one example embodiment, an in-vehicle control system 150 with an image processing module 200 resident in a vehicle 105 can be configured like the architecture and ecosystem 101 illustrated in FIG. 1. However, it will be apparent to those of ordinary skill in the art that the image processing module 200 described and claimed herein can be implemented, configured, and used in a variety of other applications and system...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com