Virtual memory management method and virtual memory management device for mass data processing

A technology of big data processing and virtual memory, which is applied in the direction of program control device, memory address/allocation/relocation, software simulation/interpretation/simulation, etc., and can solve problems such as low efficiency of virtual memory scheduling, inability to process, frequent transfer, etc. , to achieve high accuracy, reduce bumps, and enhance accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

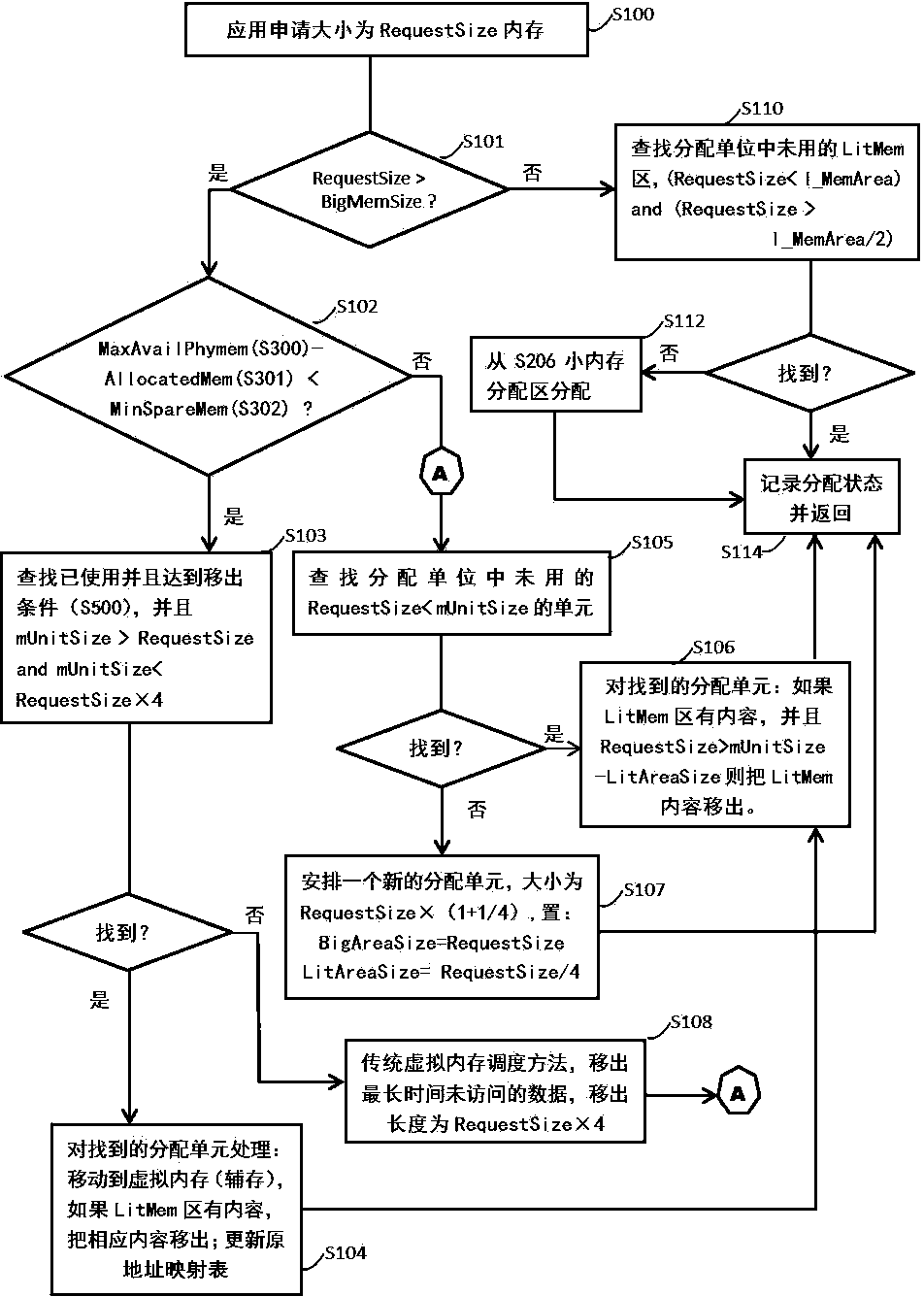

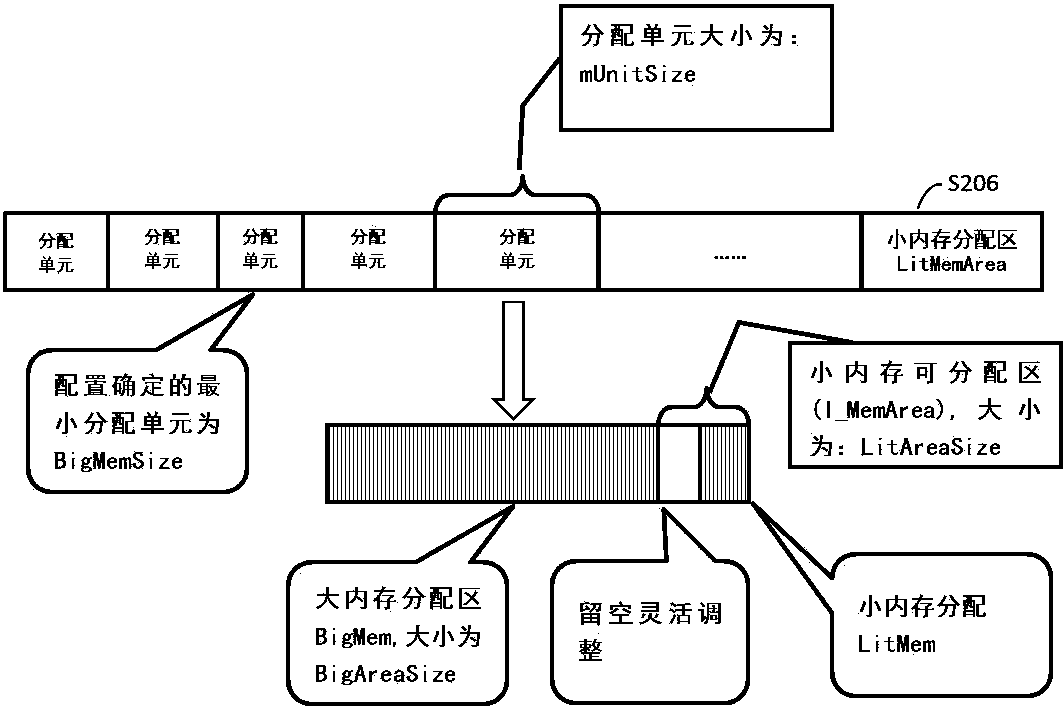

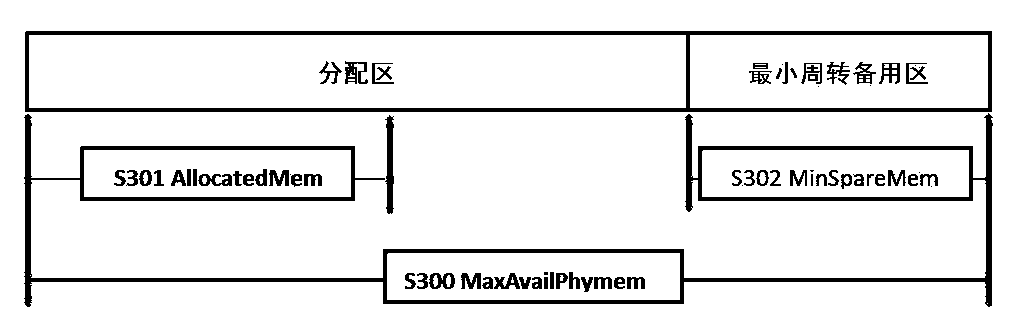

[0047] The technical solutions of the embodiments of the present invention will be described in detail and completely below in conjunction with the accompanying drawings.

[0048] The invention provides a virtual memory scheduling management method, which at least includes: a memory allocation unit management method, a virtual memory scheduling matching and replacement method, and a memory allocation unit access association comprehensive index. The main workflow is described below.

[0049] Such as figure 1 As shown, in the virtual memory management method of the present invention, the memory allocation process including virtual scheduling management:

[0050] S100, when a data processing application application size is RequestSize memory, enter the process, including:

[0051] S101 judges whether the requested memory size belongs to the category of large memory, if it is a large memory, execute S102, otherwise execute S110. In the present invention, the large memory refers...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com