Computer vision processing method and device for processing equipment with low computing power

A technology of computer vision and processing equipment, applied in the field of computer vision, can solve problems such as poor real-time performance, low computing power processing equipment, slow neural network computing speed, etc., to reduce the amount of data, increase memory overhead, and improve convolution operation speed. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

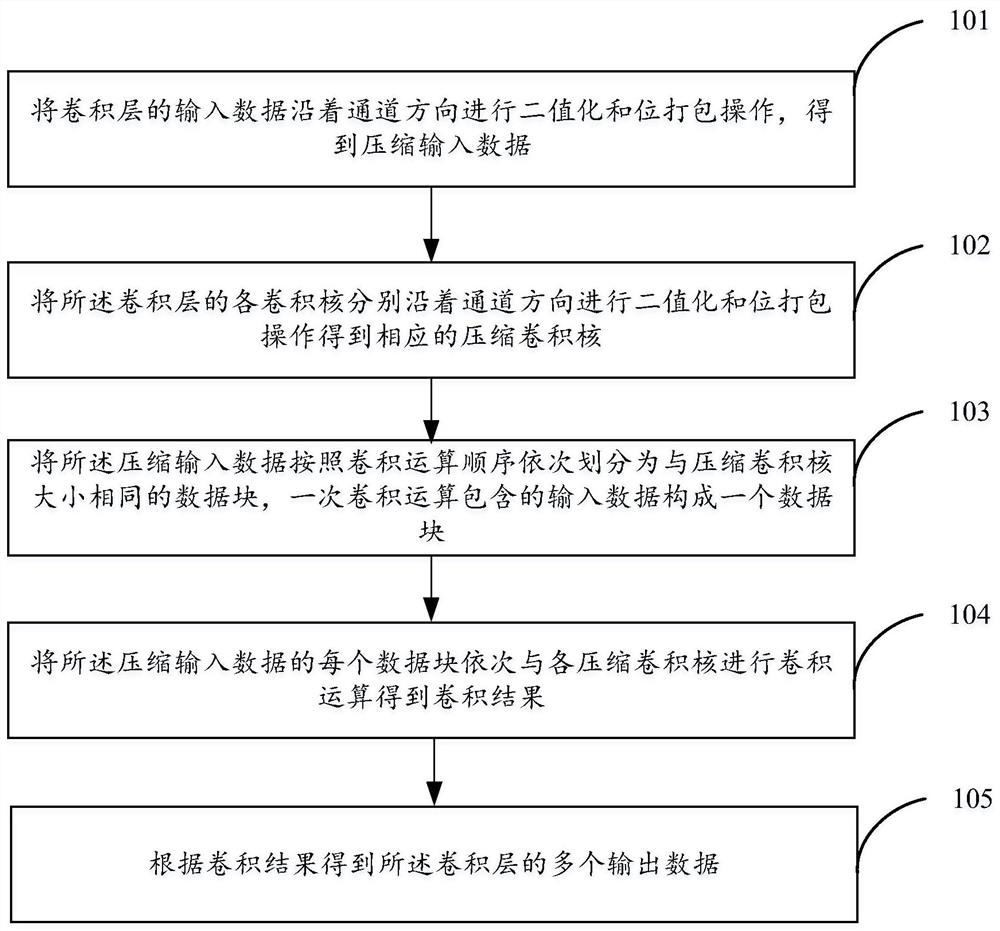

[0037] see figure 1 , is a flow chart of the neural network optimization method provided by the embodiment of the present invention. In this embodiment, the convolutional layer of the neural network is processed, and the method includes:

[0038] Step 101: Perform binarization and bit packing operations on the input data of the convolutional layer along the channel direction to obtain compressed input data.

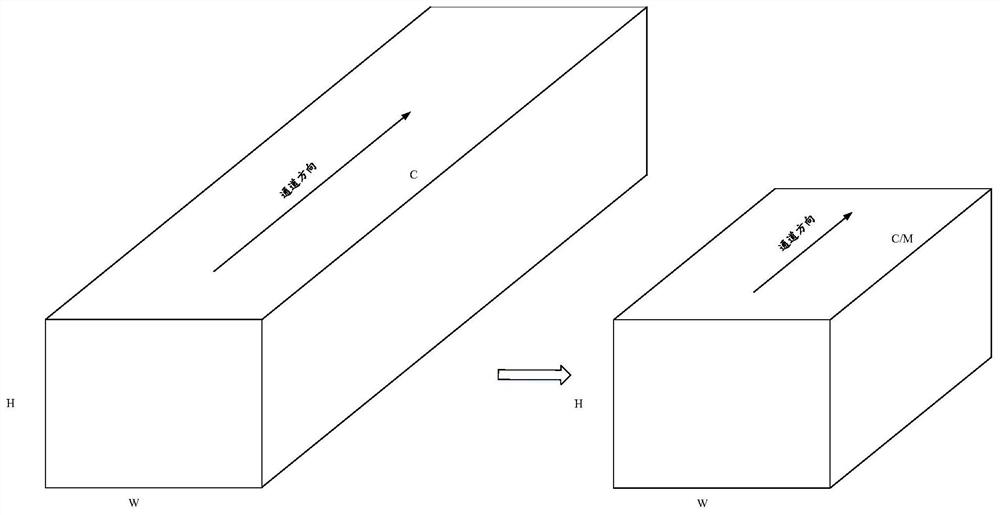

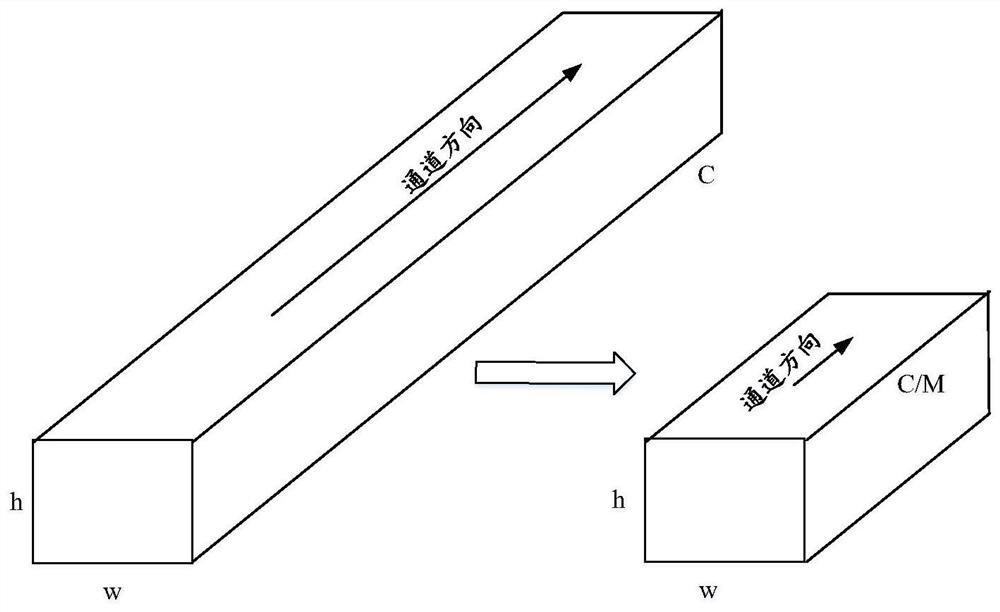

[0039] The input data of the convolutional layer is generally three-dimensional data, which includes the height, width and number of channels of the input data, and the number of channels of the input data is more, generally a multiple of 32. Such as figure 2 Shown is a schematic diagram of the input data and the compressed input data corresponding to the input data. H represents the height of the input data, W represents the width of the input data, and C represents the number of channels of the input data; the height and width of the compressed input data are not cha...

Embodiment 2

[0082] see Figure 7 , is a schematic flowchart of a neural network optimization method provided by an embodiment of the present invention, the method includes steps 701 to 709, wherein steps 701 to 705 process the convolutional layer in the neural network, and figure 1 Steps 101 to 105 are in one-to-one correspondence. For the corresponding specific implementation, refer to Embodiment 1, which will not be repeated here. Steps 706 to 709 process the fully-connected layers in the neural network. The order of steps 706 to 709 and steps 701 to 705 is not strictly limited, and is determined according to the structure of the neural network. For example, the neural network contains The network layers are convolutional layer A, convolutional layer B, fully connected layer C, convolutional layer D, and fully connected layer E in sequence, and steps 701 to 100 are applied to each convolutional layer in sequence according to the order of the network layers included in the neural network...

Embodiment 3

[0115] Based on the same idea of the neural network optimization method provided by the foregoing embodiments 1 and 2, embodiment 3 of the present invention provides a neural network optimization device. The structural diagram of the device is as follows Figure 10 shown.

[0116] The first data processing unit 11 is configured to perform binarization and bit packing operations on the input data of the convolutional layer along the channel direction to obtain compressed input data;

[0117] The second data processing unit 12 is configured to perform binarization and bit packing operations on the convolution kernels of the convolution layer along the channel direction to obtain corresponding compressed convolution kernels;

[0118] The division unit 13 is used to sequentially divide the compressed input data into data blocks of the same size as the compressed convolution kernel according to the order of convolution operations, and the input data included in one convolution op...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com