Combination system of computing chip and memory chip based on high-speed serial channel interconnection

A memory chip and computing chip technology, applied in computing, computers, digital computer components, etc., can solve the problems of difficult interface implementation, low granularity, and high signal integrity requirements, so as to realize data sharing and improve memory access bandwidth , the effect of reducing cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

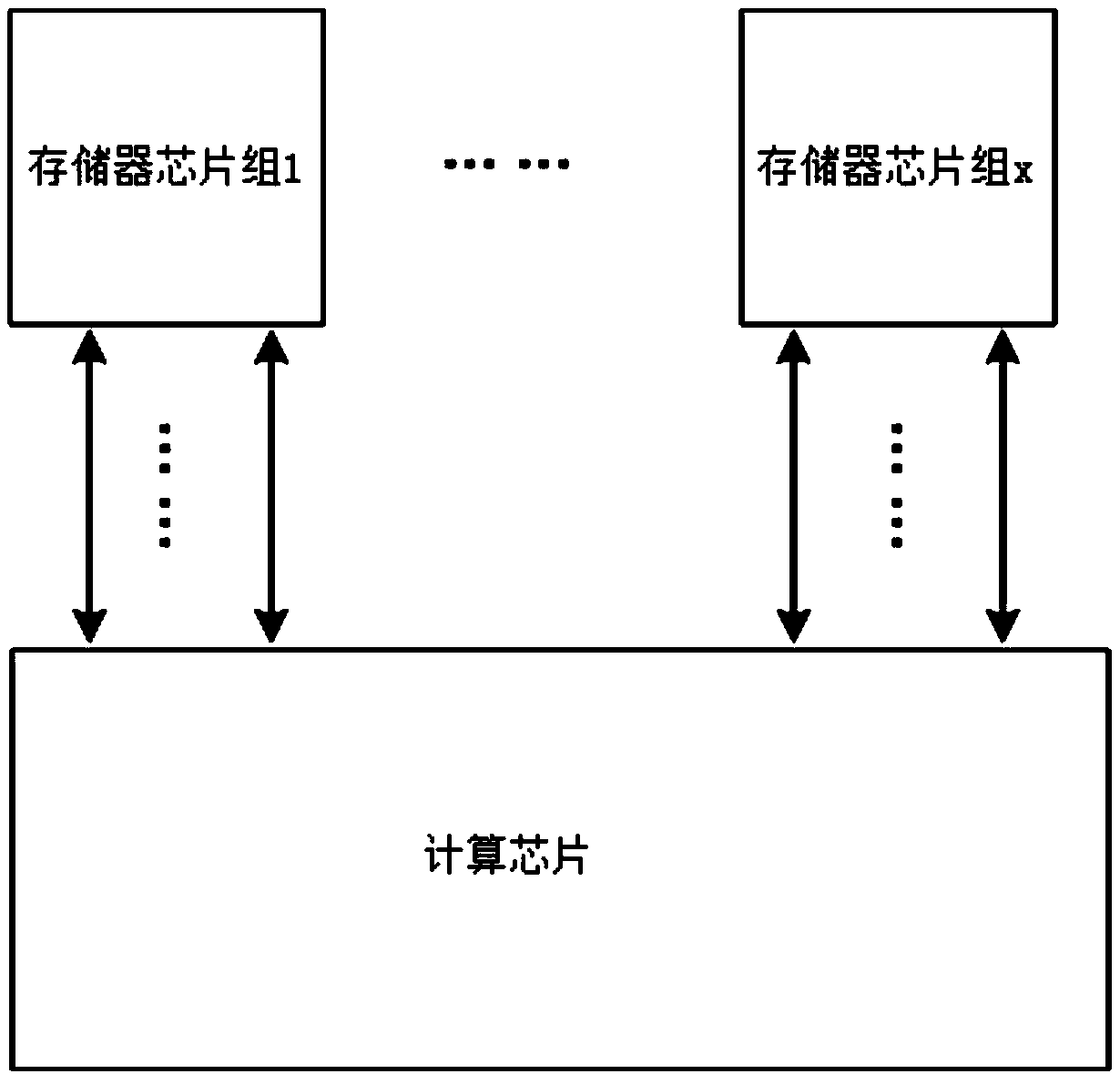

[0022] Such as figure 2 As shown, a combination system of computing chips and memory chips based on high-speed serial channel interconnection includes 1 computing chip and x memory chipsets, x is a natural number, and each memory chipset contains several memory chips. The chip is the main control chip, and the computing chip is connected to each memory chipset through at least one high-speed serial channel, or more than one high-speed serial channel can be used to connect each memory chipset according to application requirements. When the chip is running, the data is distributed among all the memory chips, and all the memory chips are exclusively used by one computing chip. In this embodiment, the memory chips in the memory chipset are cascaded through high-speed serial channels.

Embodiment 2

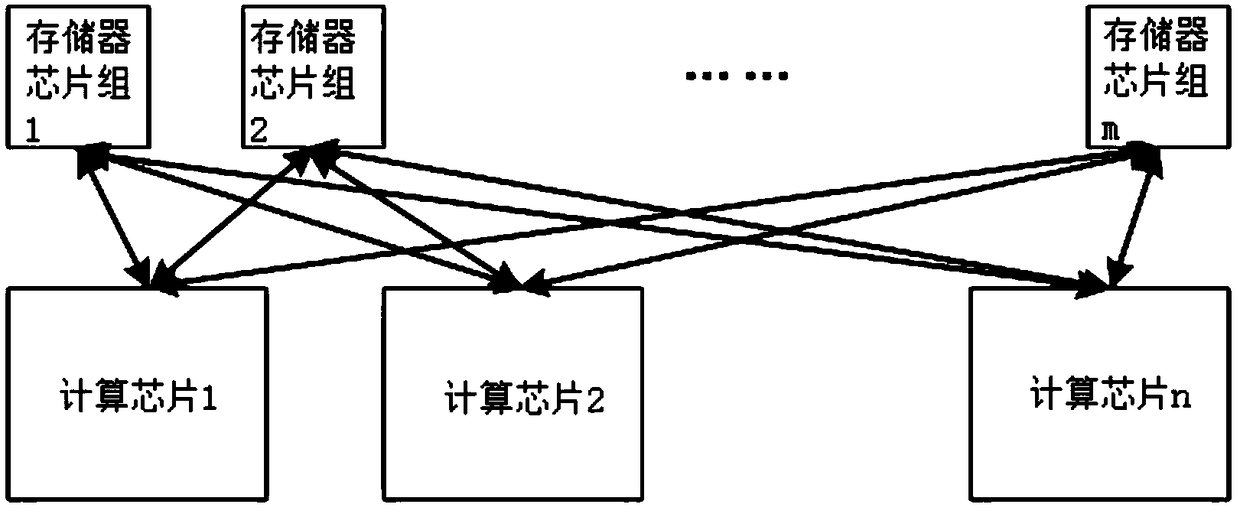

[0024] Such as image 3 As shown, a combination system of computing chips and memory chips based on high-speed serial channel interconnection includes n computing chips and m memory chipsets, each memory chipset contains several memory chips, and the memory chipsets in the memory chipset The memory chips are cascaded through high-speed serial channels, and n and m are natural numbers. The computing chips are divided into a group of computing chipsets, and each computing chip is connected to each memory chipset through several high-speed serial channels. That is, each computing chip can access all memory chipsets, and these m memory chips are shared by n computing chips. This system structure can not only realize data sharing among n computing chips very simply, but also reduce the It reduces the usage cost overhead of memory chips in the system.

Embodiment 3

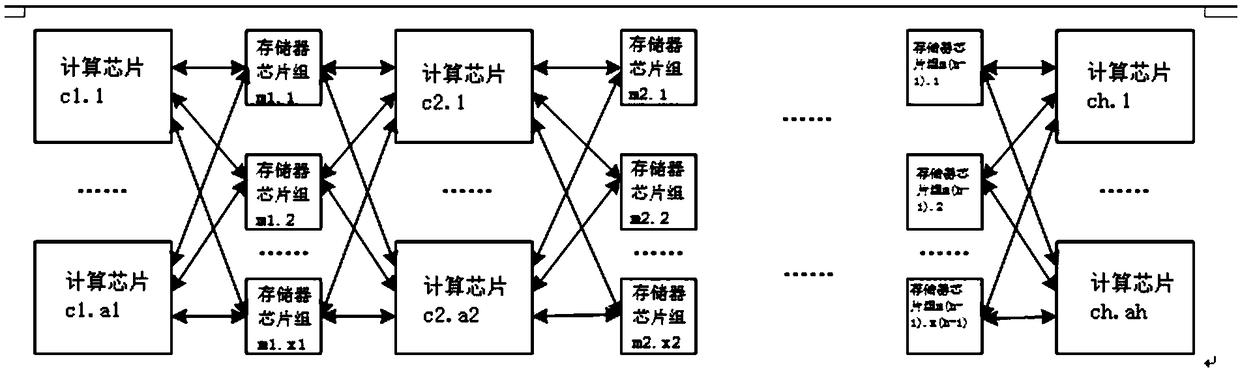

[0026] Such as figure 1 As shown, a computing chip and memory chip combination system based on high-speed serial channel interconnection includes several computing chips and several memory chipsets, the memory chipset contains several memory chips, and the memory chips in the memory chipset Through high-speed serial channel cascading, based on the system structure of algorithm data flow graph mapping, computing chips are divided into h groups of computing chipsets, h is a natural number, and each computing chipset contains several computing chips, such as the first group of computing The chipset contains a1 computing chips, which are respectively denoted as c1.1, c1.2, ..., c1.a1, and the second group of computing chips contains a2 computing chips, which are respectively denoted as c2.1, c2.2, ..., c2.a2, and so on, the computing chipset of the hth group contains ah computing chips, which are respectively recorded as ch.1, ch.2, ..., ch.ah. There are several memory chipsets b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com