Online video target tracking method based on depth cross similarity matching

A target tracking and video technology, applied in image data processing, instruments, computing, etc., can solve the problems of difficult integration of multi-task technical solutions, background noise interference, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The specific implementation manners of the present invention will be further described below in conjunction with the accompanying drawings and technical solutions.

[0045] Step 1: Collect about 4,000 pieces of video data, intercept templates and search areas of different frames of the same video as positive sample pairs, and use negative sample pairs from different videos to form a training set. Under the constraints of the loss function (4), the invented All parameters of the model are trained offline;

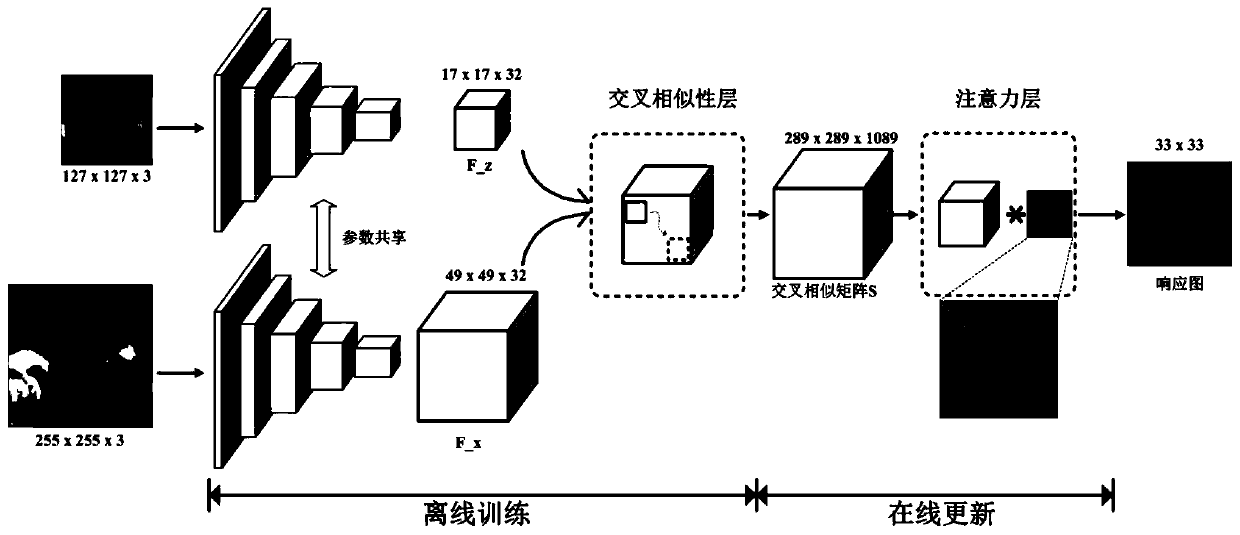

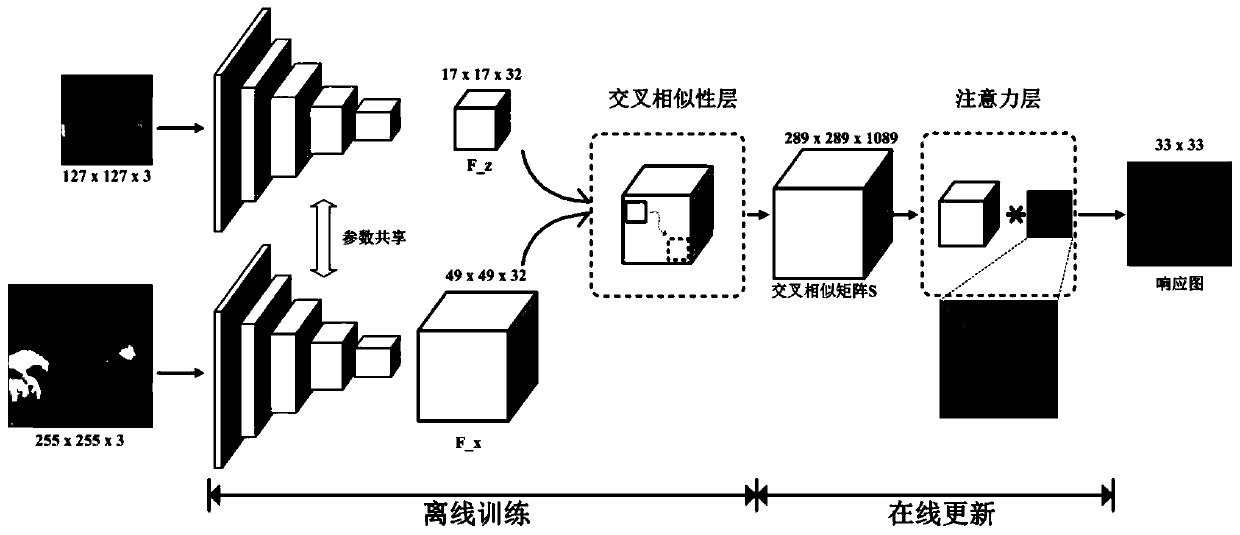

[0046] Step 2: Intercept the target template and the image of the search area at the given initial target position in the first frame, and send them into the designed tracking framework (attached figure 1 ) for forward propagation, and get their respective depth features after the convolutional layer ends;

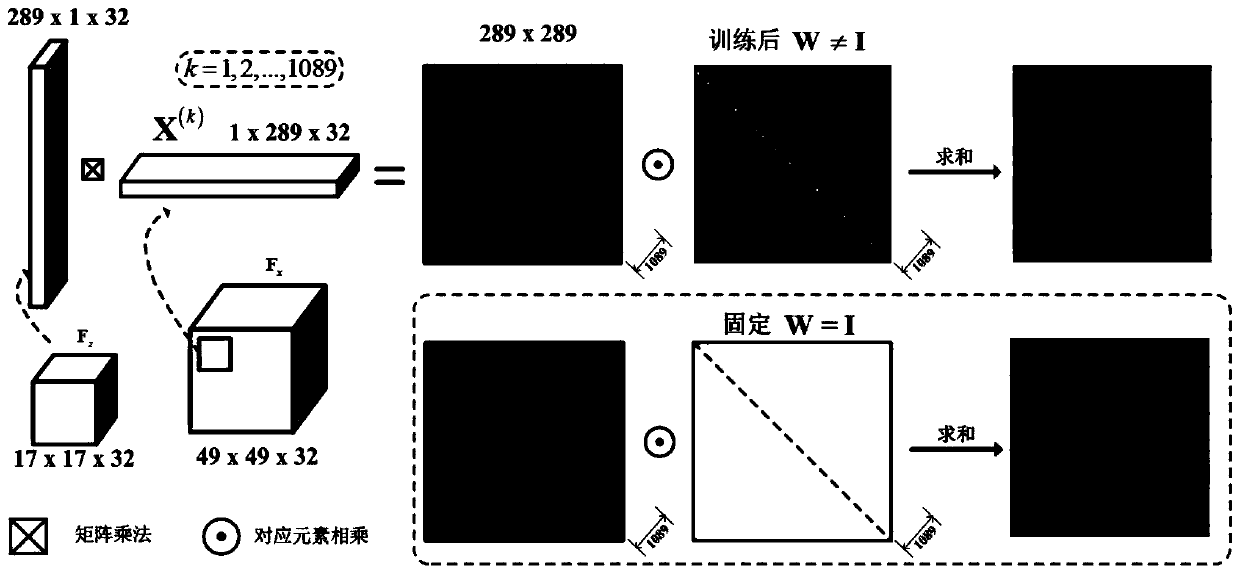

[0047] Step 3: Calculate all cross-similarities M of two features according to formula (2), and convert them into cross-similarity matrix S according to the mapp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com