Video saliency detection method

A detection method and remarkable technology, which is applied in the fields of computer vision and image processing, can solve the problems that the inter-frame consistency does not consider the global information constraints, the overall consistency of the results needs to be improved, and the motion information is not fully excavated, so as to achieve accurate inter-frame saliency Strong results, strong background suppression ability, and fast calculation speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

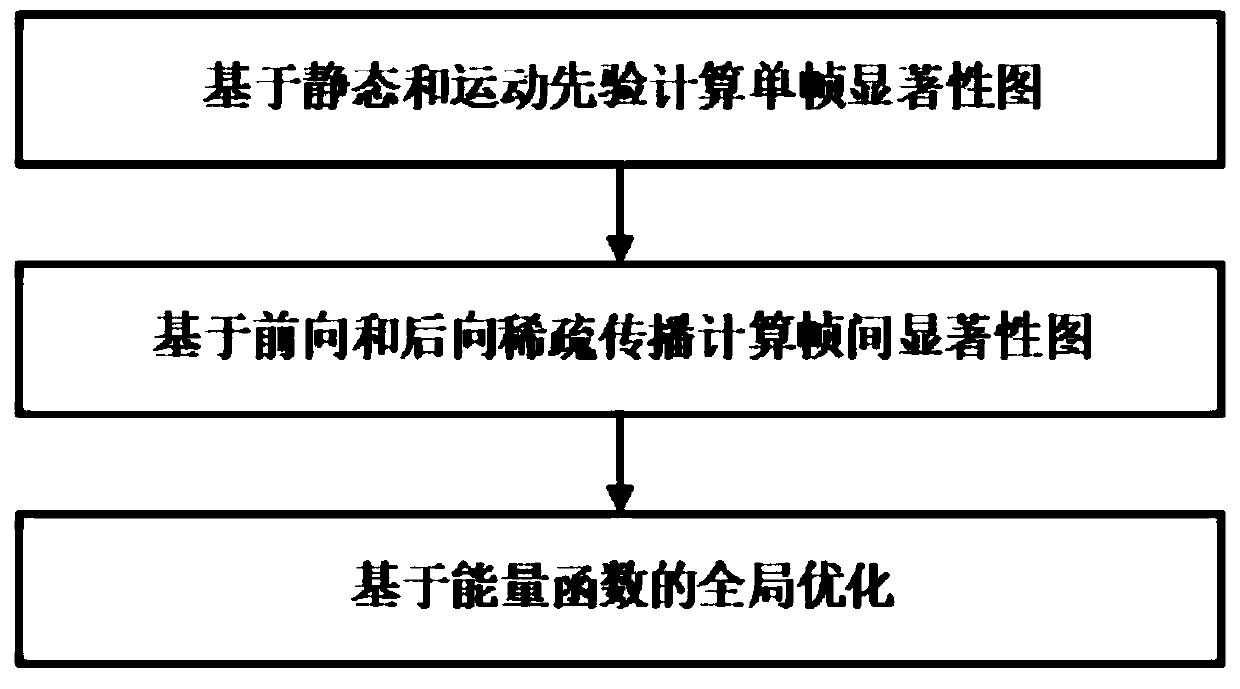

[0038] The embodiment of the present invention proposes a video saliency detection method, see figure 1 , the method includes the following steps:

[0039] 101: Computing the spatial saliency of each frame in a video sequence through a sparse reconstruction model based on static cues and motion priors;

[0040] 102: Through a progressive sparse propagation model, capture the timing correspondence in the time domain, and generate an inter-frame saliency map;

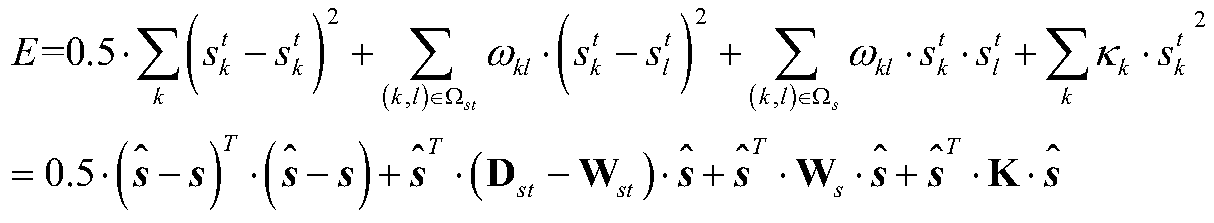

[0041] 103: Fusing two saliency results into a global optimization model to improve spatio-temporal smoothness and global consistency of salient objects across videos.

[0042] To sum up, the embodiment of the present invention designs an effective video saliency detection model by digging deeply into the motion information of the objects in the video sequence and the inter-frame constraint relationship, and continuously extracts the salient objects in the video sequence.

Embodiment 2

[0044] The following combined with specific examples, figure 1 The scheme in Example 1 is further introduced, see the following description for details:

[0045] 201: single frame saliency reconstruction;

[0046] Among them, for the video saliency detection task, the detected object should be salient and moving in each video frame relative to the background area. To this end, based on still and motion priors, two sparse reconstruction models are constructed to detect salient objects in each video frame. The first is a static saliency prior, which utilizes three color saliency cues to construct a color-based reconstruction dictionary (DC), and the second is a motion saliency prior, which integrates motion uniqueness cues and motion compactness sexual cues, a motion-based dictionary (DM) was constructed.

[0047] set up a video sequence Contains N video frames, and uses SLIC (Simple Linear Iterative Clustering) method to divide each frame of video into 500 superpixel regions...

Embodiment 3

[0092] The scheme in embodiment 1 and 2 is carried out feasibility verification below in conjunction with specific example, see the following description for details:

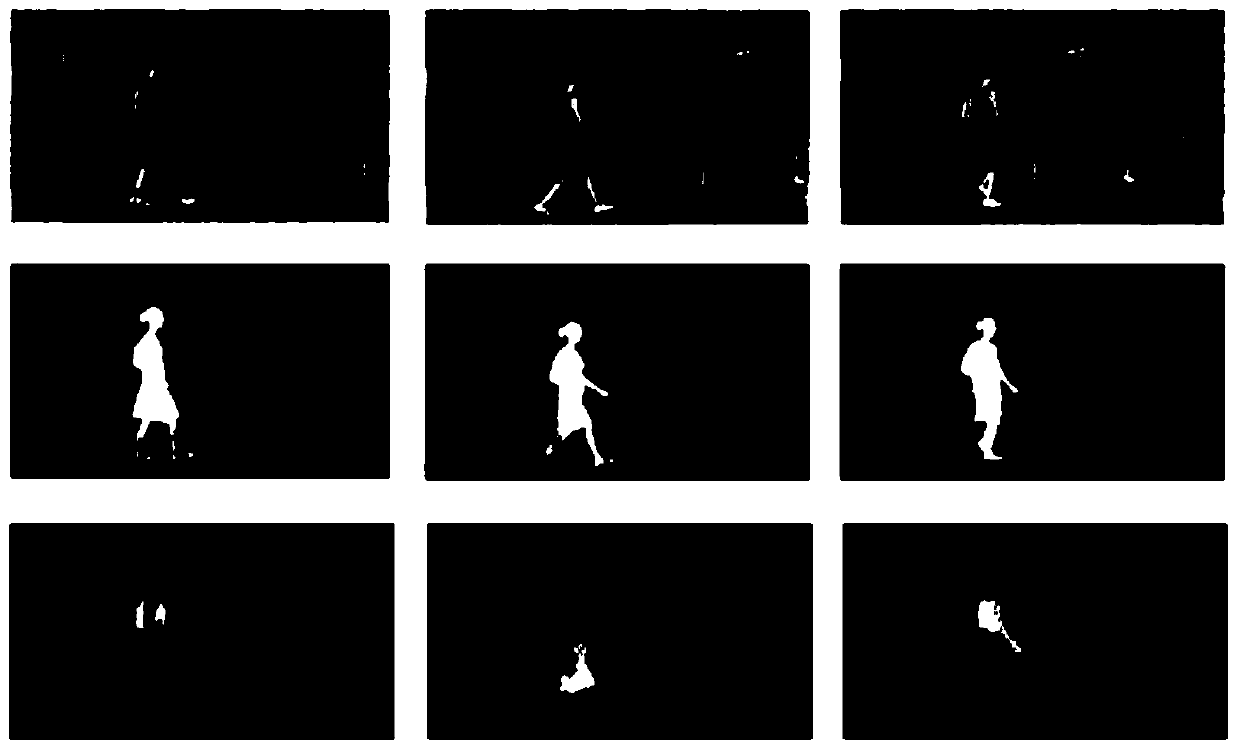

[0093] figure 2 Saliency detection results for a video sequence in which women are salient objects are given. The first row is the RGB image of different video frames, the second row is the ground-truth map of video saliency detection, and the third row is the result obtained by this method. It can be seen from the results that this method can accurately extract salient objects in video sequences, and has a good suppression effect on background areas and non-moving salient areas (such as benches) with clear outlines.

[0094] Those skilled in the art can understand that the accompanying drawing is only a schematic diagram of a preferred embodiment, and the serial numbers of the above-mentioned embodiments of the present invention are for description only, and do not represent the advantages and disadvantages ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com