Multi-modal image target detection method based on image fusion

A multi-modal image, image fusion technology, applied in the fields of deep learning, computer vision and image fusion, can solve problems such as lack of structural features

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

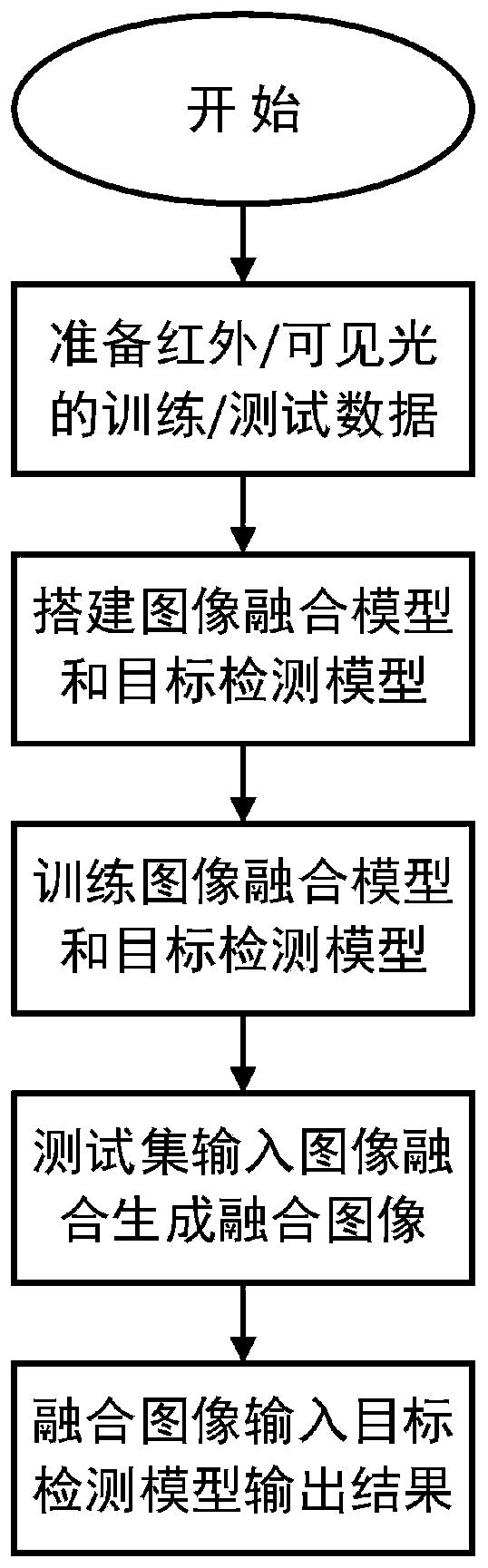

[0029] In order to make the technical solution of the present invention clearer, the specific embodiments of the present invention will be further described below in conjunction with the accompanying drawings. The specific implementation plan flow chart is as follows figure 1 shown.

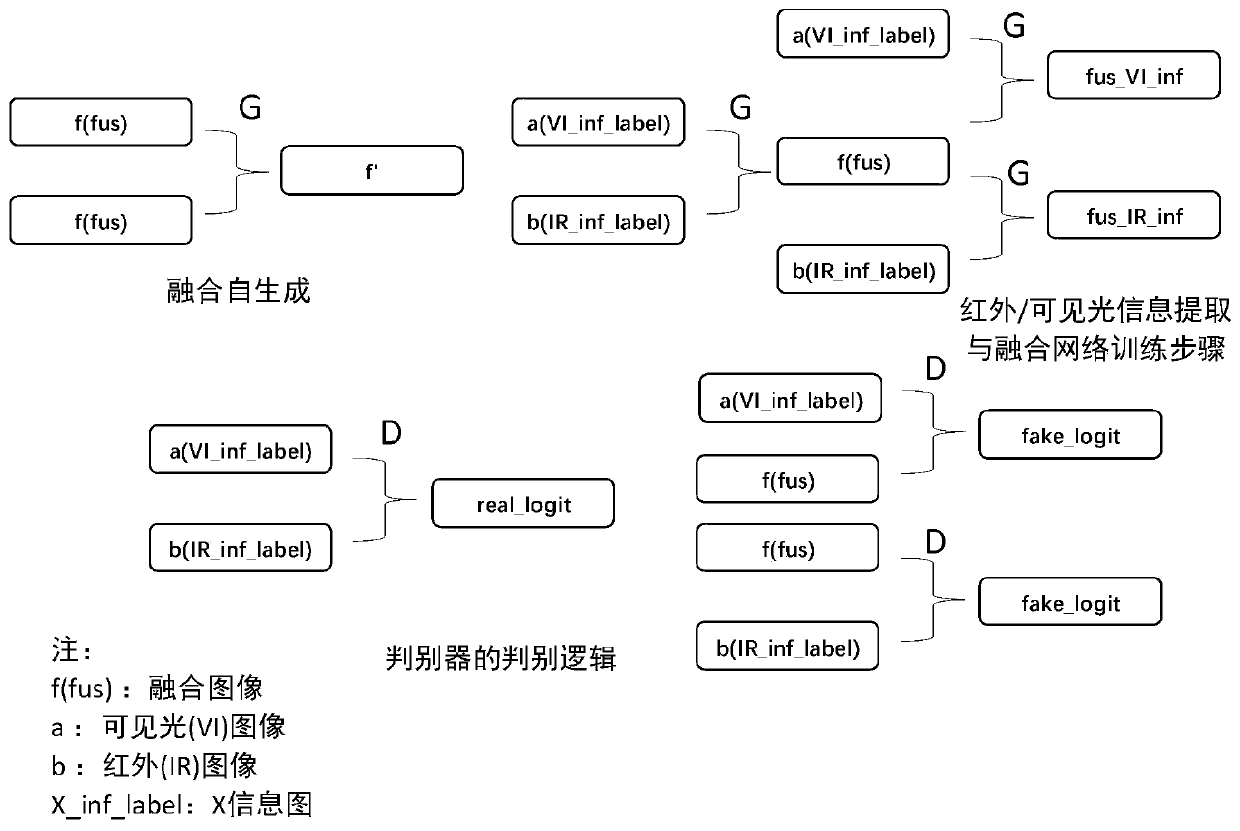

[0030] The goal of the fusion network work of this scheme is to learn a mapping function based on the structure of the generative confrontation network. This function generates a fusion image based on two input images given by multiple unlabeled sets, which are the visible light input image v and the infrared input image u. The network is not limited to image domain translation between two images, but can be used on unlabeled image sets for fusion tasks.

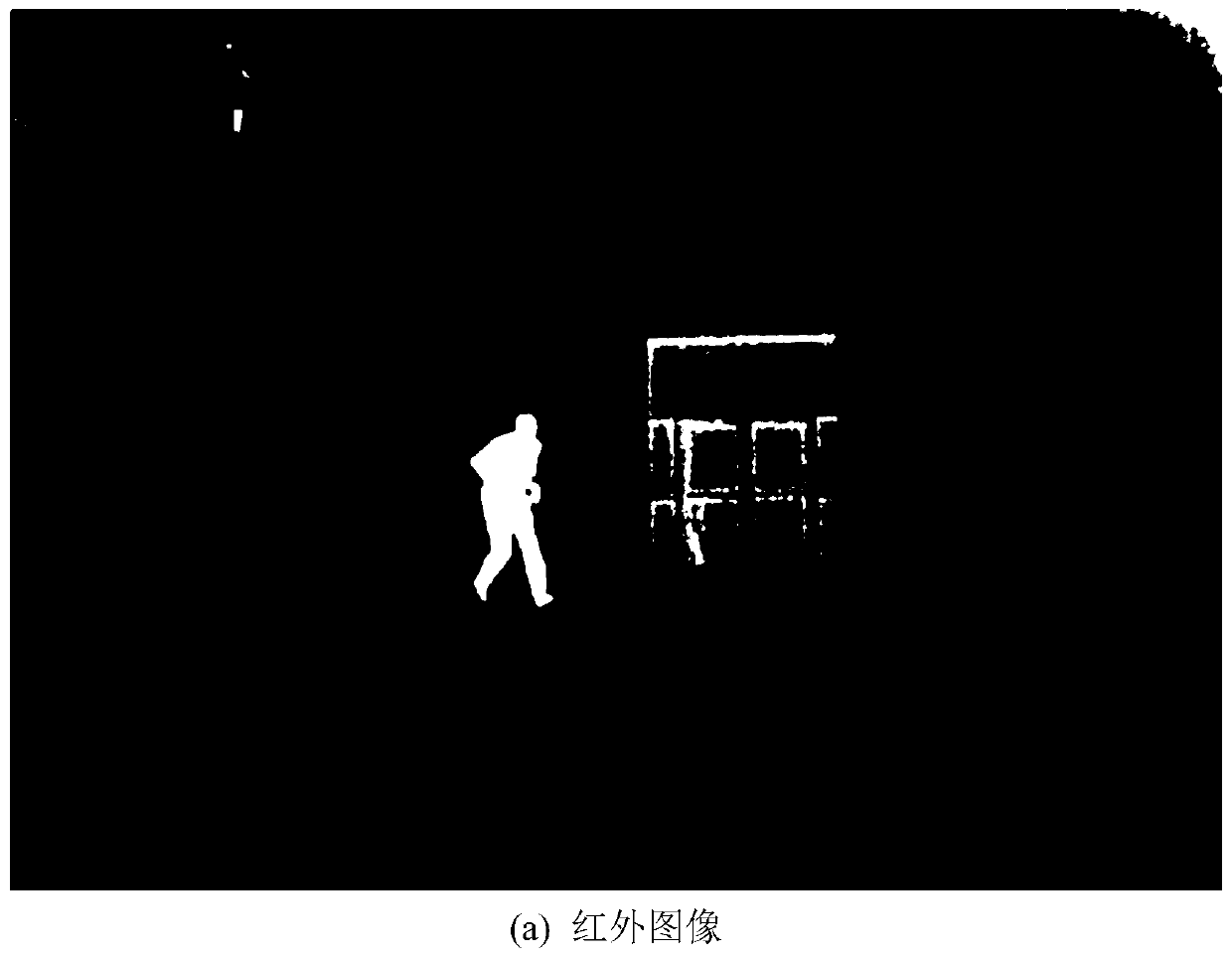

[0031] The fused image can not only preserve the high contrast between the target and the background in the infrared image, but also retain more texture details than the source image. Similar to the sharpened infrared image, the fused image h...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com