Robot indoor environment three-dimensional semantic map construction method based on deep learning

A semantic map and indoor environment technology, applied in the direction of instruments, image analysis, image enhancement, etc., can solve the problems of reducing the efficiency of mapping, large amount of calculation, and high requirements for computing power, so as to improve the efficiency of mapping, reduce the amount of data, The effect of simplifying the build process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] The present invention will be further introduced below in conjunction with the accompanying drawings and specific embodiments.

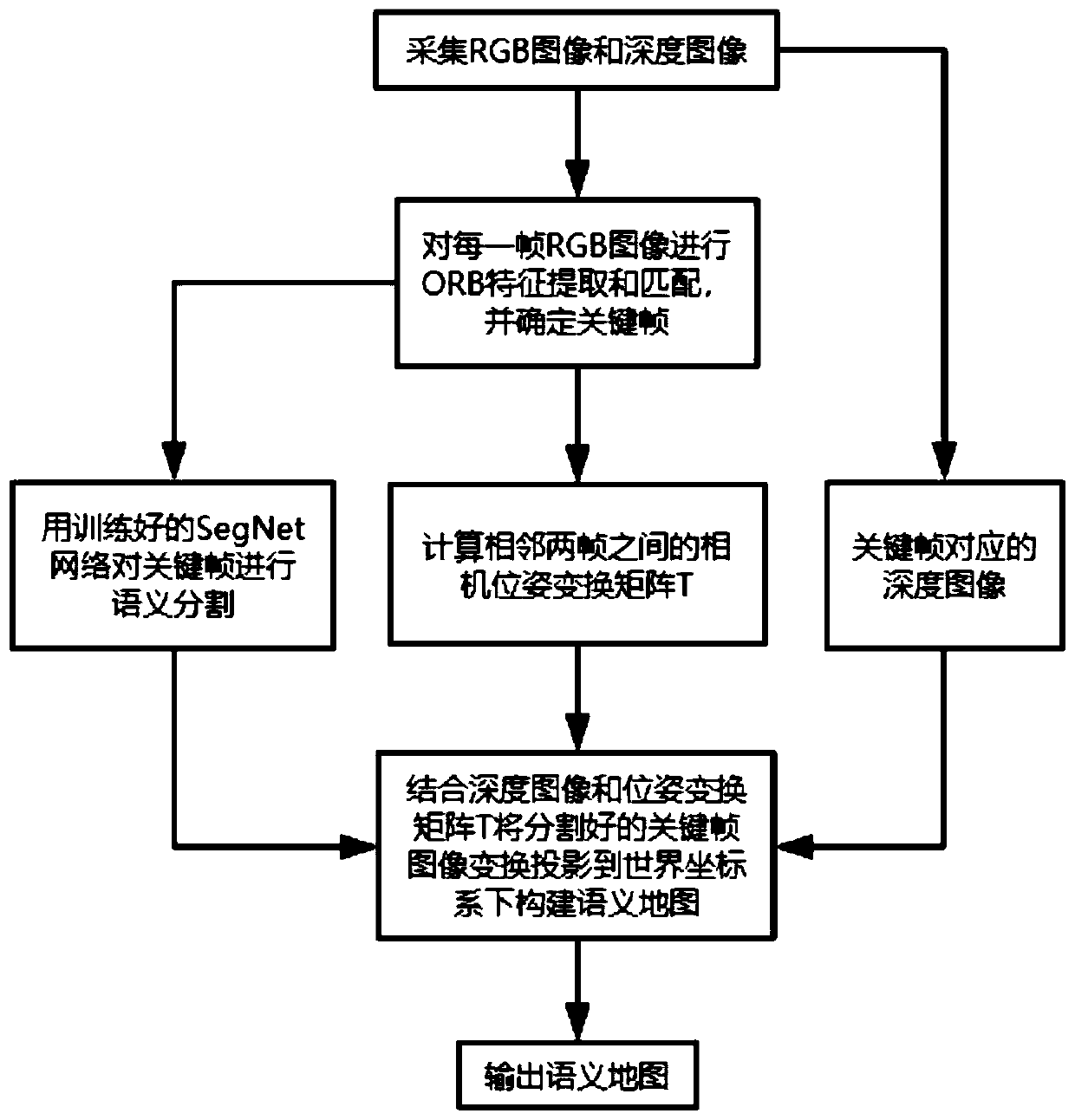

[0020] combine figure 1 , a method for building a three-dimensional semantic map of a robot's indoor environment based on deep learning of the present invention, comprising the following steps:

[0021] Step 1: Collect the RGB image sequence and the depth image sequence of the indoor environment through the depth camera.

[0022] The specific implementation steps are as follows: the user continuously shoots the environment indoors by holding the depth camera or carrying the depth camera on the robot to obtain continuous RGB image sequences and depth image sequences.

[0023] Step 2: Perform ORB feature extraction and matching on each frame of RGB image collected, and determine the key frame.

[0024] The specific implementation steps are:

[0025] Step 21: Detect the Oriented FAST corner position of each frame image, and calculate the BRIEF...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com