Operational circuit of neural network

A neural network and computing circuit technology, applied in the field of artificial intelligence, can solve problems such as lack of flexibility, lack of generalization ability, and lower precision requirements, and achieve the effects of saving hardware resources, saving multiplication power consumption, and increasing configurability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] In order to make the purpose, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the implementation methods and accompanying drawings.

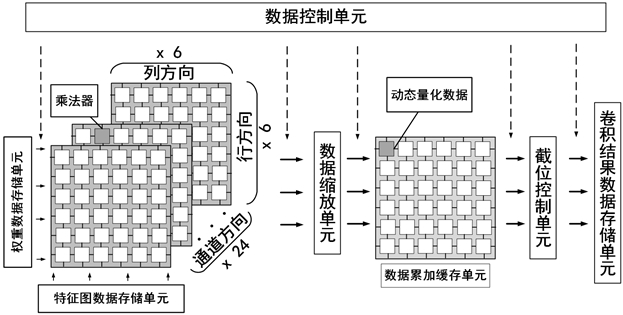

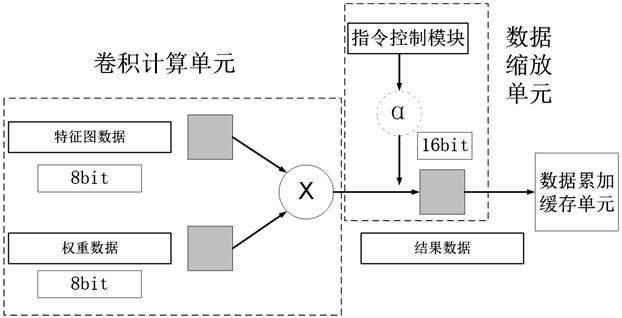

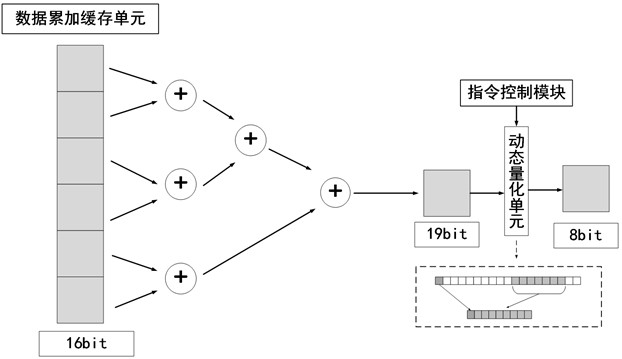

[0041] In order to realize the approximate computing hardware implementation of high precision and low power consumption of the convolution operation involved in the neural network, the computing circuit of the neural network of the present invention includes a data control unit, a weight data storage unit, and a feature map data storage unit , a convolution calculation unit, a data scaling unit, a data accumulation buffer unit, a truncation control unit and a convolution result data storage unit. The schematic diagram of the hardware architecture of the computing circuit can be referred to figure 1 ,Right now figure 1 The multiple multipliers shown in are arrayed to form the convolution calculation unit of this embodiment. Among them...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com