Class activation mapping target positioning method and system based on convolutional neural network

A convolutional neural network and target positioning technology, which is applied in the field of class activation mapping target positioning methods and systems, can solve problems such as weakly supervised task performance, and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

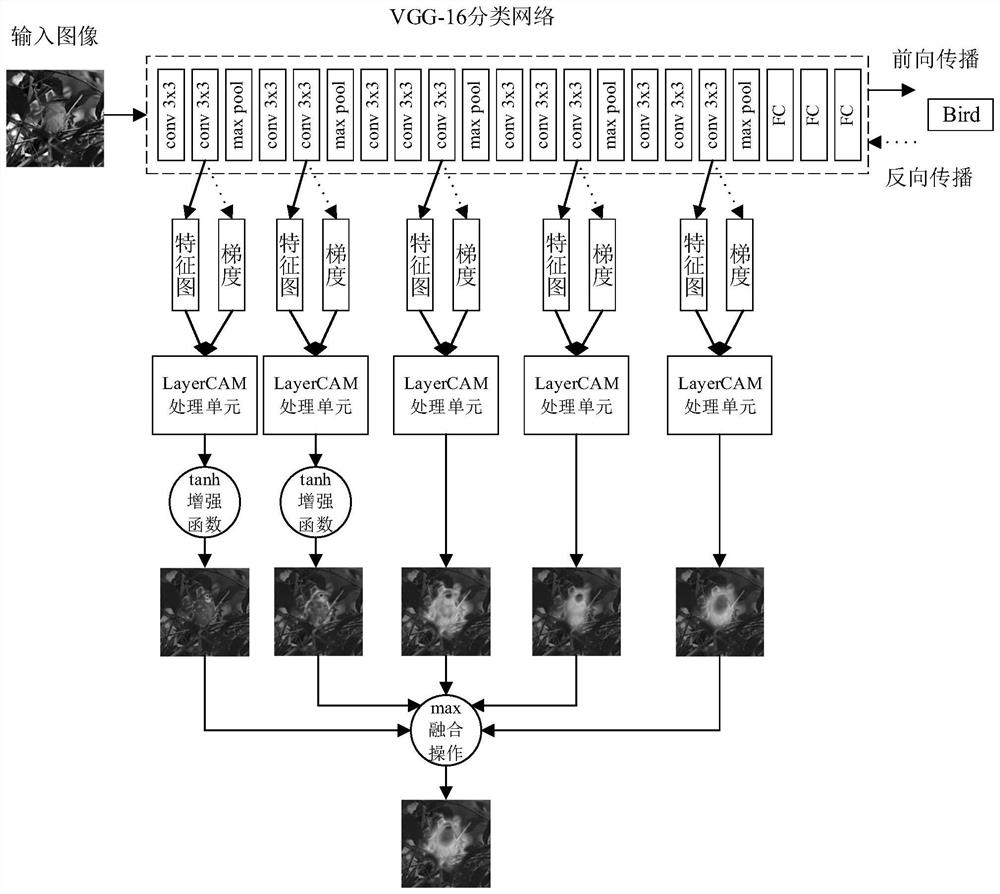

[0043] This embodiment provides a convolutional neural network-based class activation mapping target location method;

[0044] Convolutional Neural Network-based Class Activation Mapping object localization methods, including:

[0045] S101: Input the image to be processed into the trained convolutional neural network, perform backpropagation according to the category information, and obtain the gradient corresponding to each feature map of each convolutional layer in the network; wherein, each convolutional layer is Output a feature map; each feature map includes C sub-feature maps; C is a positive integer; each sub-feature map has a one-to-one corresponding gradient;

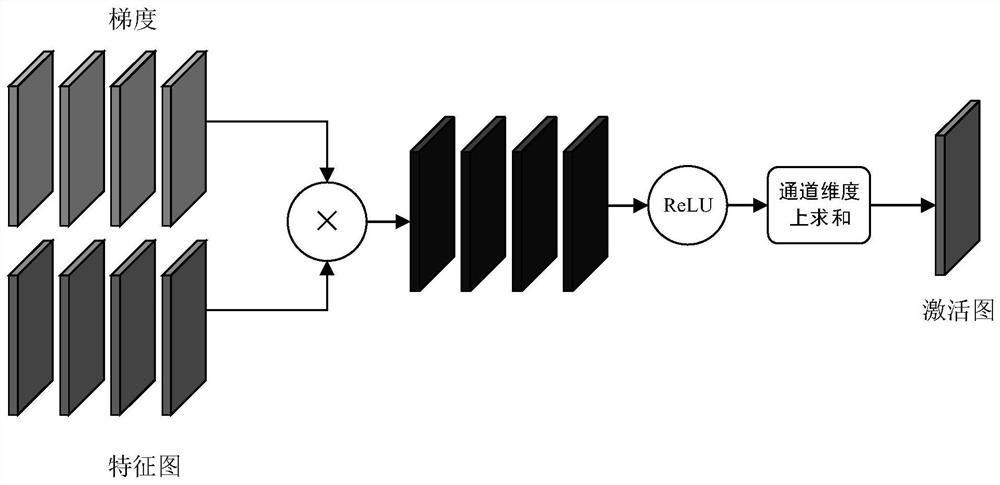

[0046] S102: Select M convolutional layers from the convolutional neural network, and multiply the C sub-feature maps extracted by each of the M convolutional layers with weights; wherein, the weights are The gradient corresponding to the feature map;

[0047] Input the multiplication processing result into ...

Embodiment 2

[0109] The present embodiment provides a class activation mapping target positioning system based on a convolutional neural network;

[0110] Convolutional Neural Network-based Class Activation Mapping Object Localization System, including:

[0111] Gradient calculation module, which is configured to: input the image to be processed into the trained convolutional neural network, perform backpropagation according to category information, and obtain the gradient corresponding to each feature map of each convolutional layer in the network; wherein , each convolutional layer outputs a feature map; each feature map includes C sub-feature maps; C is a positive integer; each sub-feature map has a one-to-one corresponding gradient;

[0112] The class activation map acquisition module is configured to: select M convolutional layers from the convolutional neural network, and extract C sub-feature maps and weights from each convolutional layer in the M convolutional layers Perform multi...

Embodiment 3

[0118] This embodiment also provides an electronic device, including: one or more processors, one or more memories, and one or more computer programs; wherein, the processor is connected to the memory, and the one or more computer programs are programmed Stored in the memory, when the electronic device is running, the processor executes one or more computer programs stored in the memory, so that the electronic device executes the method described in Embodiment 1 above.

[0119] It should be understood that in this embodiment, the processor can be a central processing unit CPU, and the processor can also be other general-purpose processors, digital signal processors DSP, application specific integrated circuits ASIC, off-the-shelf programmable gate array FPGA or other programmable logic devices , discrete gate or transistor logic devices, discrete hardware components, etc. A general-purpose processor may be a microprocessor, or the processor may be any conventional processor, o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com