Visual mechanical arm automatic grabbing method and system oriented to moving objects

A technology of mechanical arms and objects, applied in the field of industrial intelligent control, can solve the problems of low perception accuracy and poor real-time performance, and achieve the effect of reducing production costs and reducing costs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

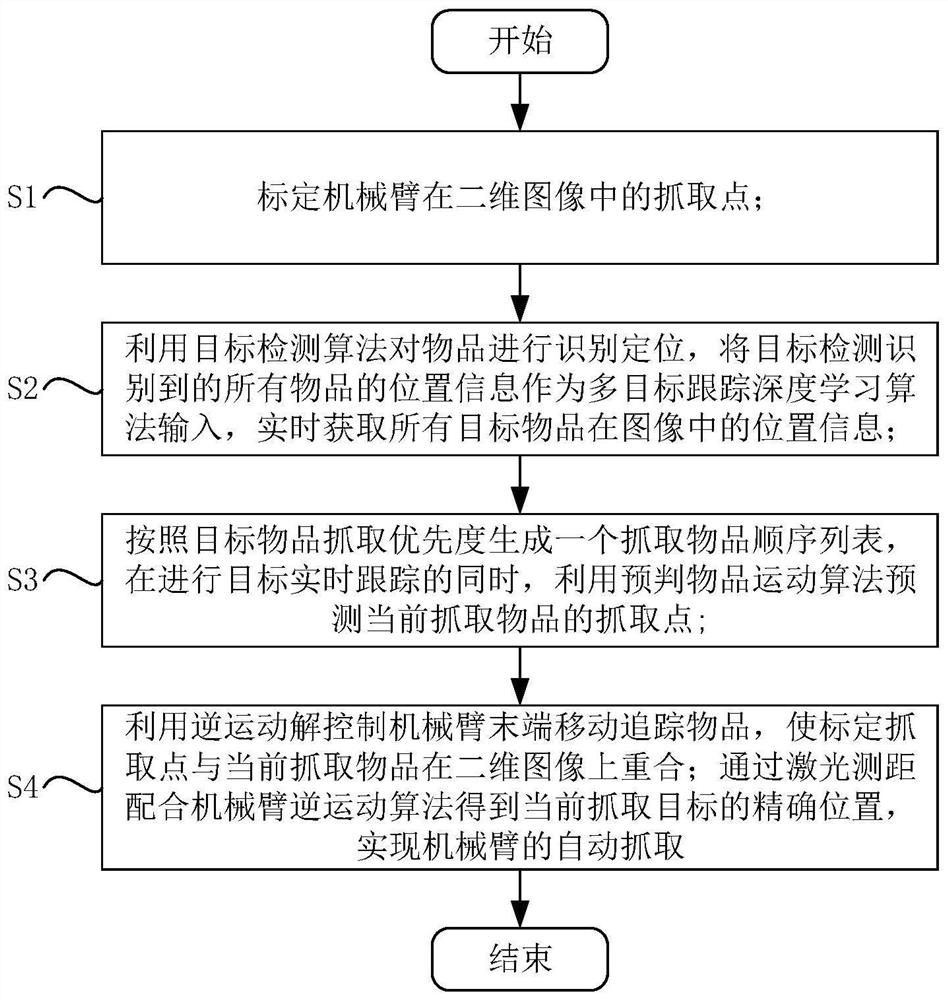

[0081] Such as figure 1 As shown, the automatic grasping method of visual manipulator for moving objects includes the following steps:

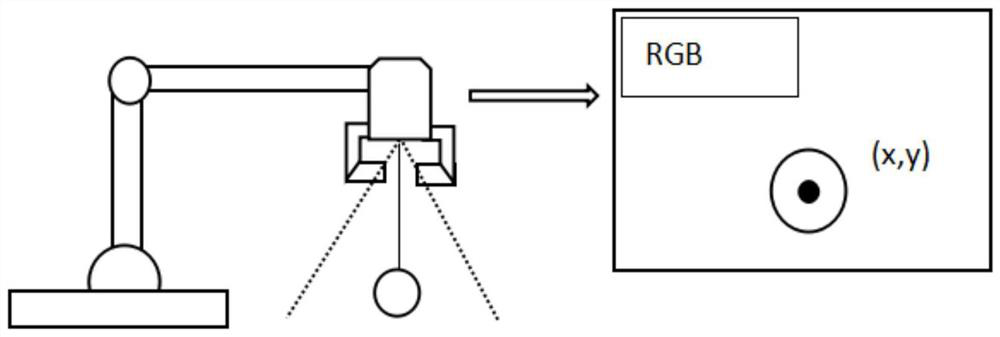

[0082] S1: Calibrate the grasping point of the manipulator in the two-dimensional image;

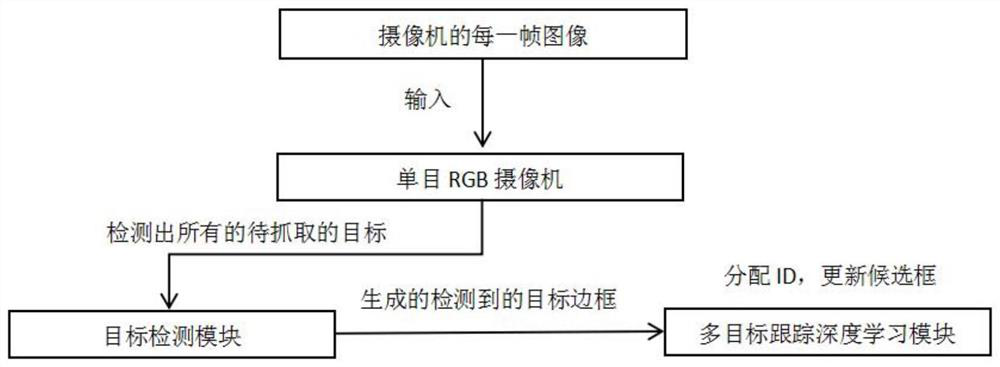

[0083] S2: Use the target detection algorithm to identify and locate the items, and use the position information of all items identified by the target detection as the input of the multi-target tracking deep learning algorithm to obtain the position information of all target items in the image in real time;

[0084] S3: Generate a sequence list of grabbing items according to the grabbing priority of the target item, and use the predictive item motion algorithm to predict the grabbing point of the currently grabbed item while performing real-time target tracking;

[0085] S4: Use the inverse kinematic solution to control the end of the robotic arm to move and track the object, so that the calibrated grabbing point coincides with the currently grabbed it...

Embodiment 2

[0112] More specifically, on the basis of Example 1, such as Image 6 As shown, the present invention also provides a visual robotic arm automatic grasping system for moving items, including a robotic arm, a controller, a processor, and a detection device; wherein:

[0113] The control end of the mechanical arm is electrically connected to the controller;

[0114] The detection device is arranged on the mechanical arm, the control end of the detection device is electrically connected to the controller, and the output end of the detection device is electrically connected to the processor;

[0115] The controller is electrically connected with the processor to realize information interaction; wherein:

[0116] The processor is provided with a target detection algorithm, a multi-target tracking deep learning algorithm, an algorithm for predicting object motion, and an inverse motion algorithm; the specific operating principles of the system are:

[0117] First, the detection eq...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com