Robust localization and mapping method and system based on fusion of laser and visual information

A visual information and visual technology, applied in the field of robust positioning and mapping methods and systems, can solve problems such as poor positioning accuracy, poor mapping effect, and insufficient information, so as to make up for the lack of closed-loop capabilities and improve relocation efficiency , The effect of precise robot pose

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

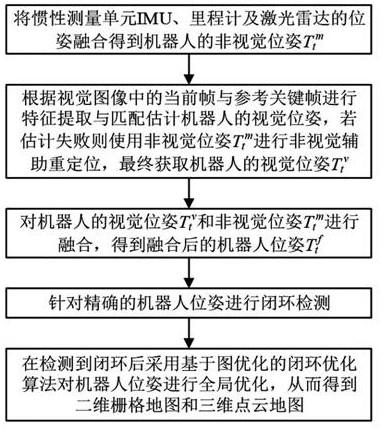

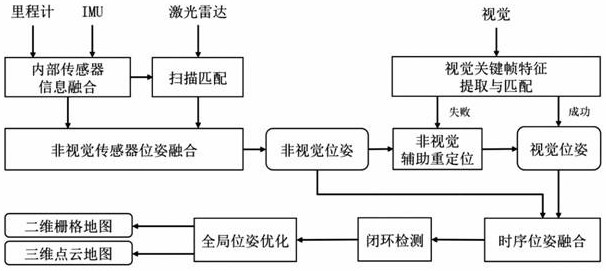

[0051] like figure 1 and figure 2 As shown, the robust positioning and mapping method for fusion of laser and visual information in this embodiment includes:

[0052] 1) The non-visual pose of the robot is obtained by fusing the poses of the inertial measurement unit IMU, odometer and lidar T t m , which can eliminate the cumulative error generated by the inertial measurement unit IMU over time;

[0053] 2) According to the current frame in the visual image and the reference key frame, perform feature extraction and matching to estimate the visual pose of the robot. If the estimation fails, use the non-visual pose T t m Perform non-visual assisted relocation, and finally obtain the visual pose of the robot T t v ;

[0054] 3) The visual pose of the robot T t v and non-visual pose T t m Perform fusion to obtain the fused robot pose T t f ;

[0055] 4) For the fused robot pose T t f Carry out closed-loop detection, and use a closed-loop optimization algo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com