Pull-up number calculation method based on machine vision

A pull-up and machine vision technology, applied in the field of machine learning visual recognition, can solve the problem of high misjudgment rate of pull-up item counting and high labor cost, and achieve strong applicability, high counting accuracy, and strong robustness. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] In this embodiment, a method for measuring the number of pull-ups based on machine vision is applied to a collection environment where a camera is arranged above a single pole, and is performed in the following steps:

[0030] Step 1: Face and hand collection:

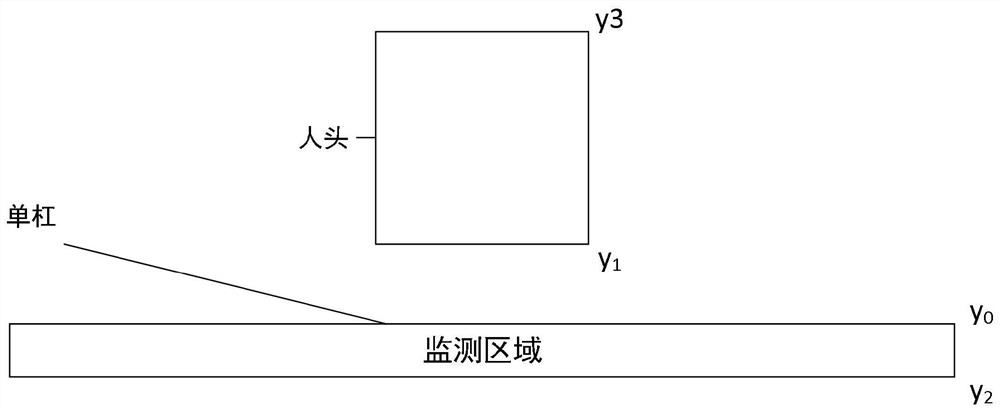

[0031] Use the camera to collect the front face image and the forehand grip image as the training set of the current tester, where figure 1 It is a schematic diagram of the position of the head and a single pole. After marking the faces and hands in the training set, input the YOLO target detection model for iterative training, and obtain the corrected human hand image and human face image;

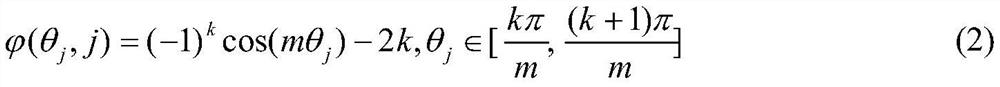

[0032] Step 2: Detect whether the hand is holding the bar on the corrected human hand image. If the arm is close to 180°, that is, the angle |X 1 -X 0 |>G, it is judged that the hand has held the rod correctly; otherwise, return to step 1, where X 1 Indicates the angle of the upper limb of the arm; X 0 Indicates the ang...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com