Multi-target tracking method and system suitable for embedded terminal

A multi-target tracking, embedded terminal technology, applied in neural learning methods, character and pattern recognition, image enhancement, etc., can solve problems such as inability to achieve real-time tracking effects, hardware equipment limitations, etc., and achieve high practicability and market promotion value. , the effect of reduced computing scale and low hardware performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

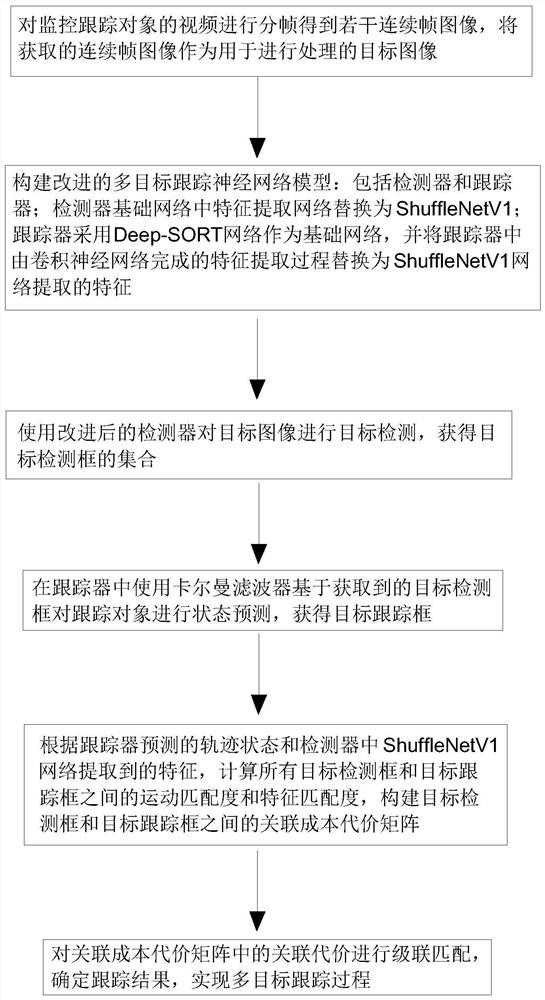

[0074] Such as figure 1 As shown, this embodiment provides a multi-target tracking method suitable for embedded terminals, and the multi-target tracking method includes the following steps:

[0075] S1: Frame the video of the monitoring and tracking object to obtain several consecutive frame images, and use the obtained continuous frame images as the target image for processing;

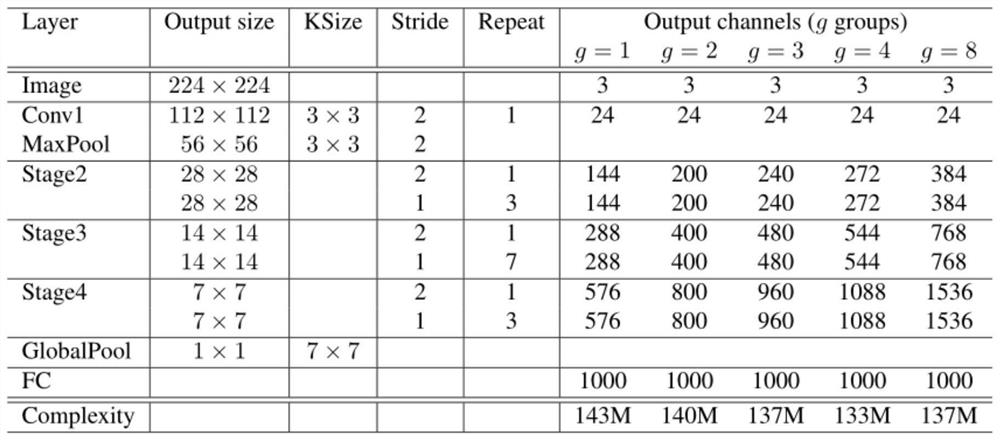

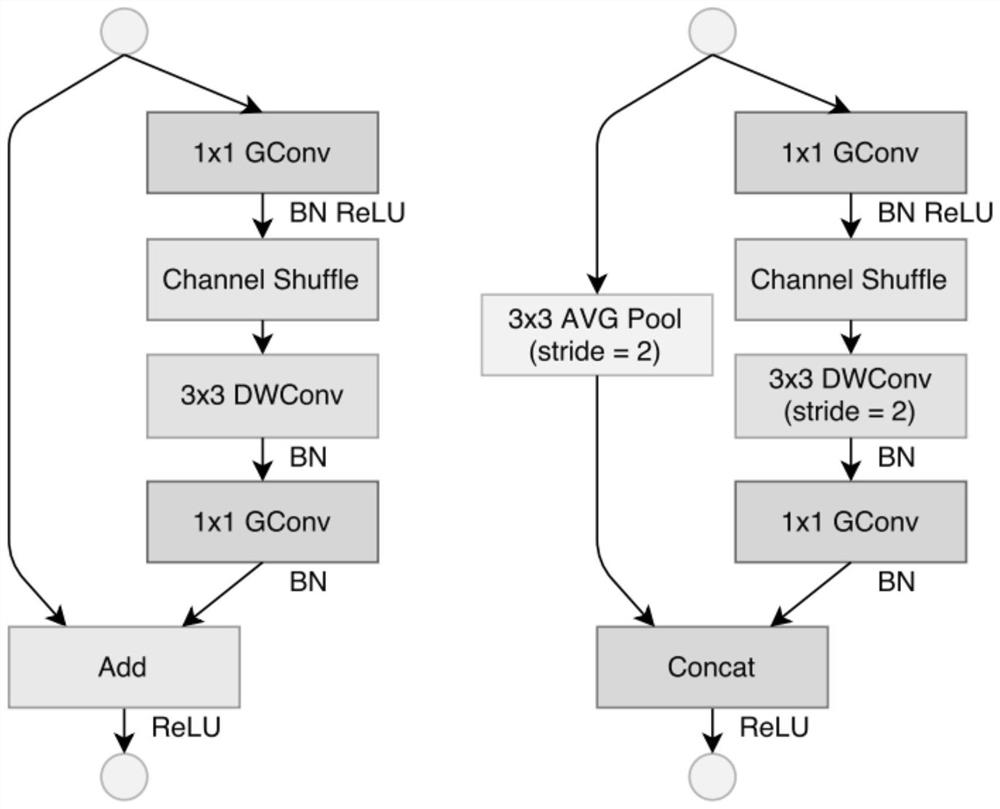

[0076] S2: Build an improved multi-target tracking neural network model. The multi-target tracking neural network model includes a detector and a tracker; the detector uses the YOLOv4 network as the basic network, and replaces the CSPDarkNet53 feature extraction network in the YOLOv4 network structure with a lightweight one. The backbone network ShuffleNetV1; the tracker uses the Deep-SORT network as the basic network, and replaces the feature extraction process completed by the convolutional neural network in the tracker with the features extracted by the ShuffleNetV1 network.

[0077] The CSPDarkN...

Embodiment 2

[0126] Such as Figure 5 As shown, this implementation also provides a multi-target tracking system suitable for embedded terminals. The system adopts the aforementioned multi-target tracking method suitable for embedded terminals. Based on the continuous target images obtained after video framing, the The target object is identified, detected and continuously tracked; and the detected and tracked target objects are matched and associated; the multi-target tracking system includes: a video preprocessing module, a multi-target tracking neural network module, an associated cost matrix building module, and a level Link matching module.

[0127] The video preprocessing module is used to divide the video used for monitoring and tracking objects into frames, and use the continuous frame images obtained after the frame division processing as the target images for multi-target tracking to form a sample data set.

[0128] The multi-target tracking neural network module includes a dete...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com