Real-time high-precision detection method for appearance defects of power adapter

A power adapter and appearance defect technology, which is applied in the field of power adapter appearance defect detection, can solve problems such as difficult sample collection of defects, random appearance characteristics, and no way to replace manual labor, etc., to reduce the amount of calculation, improve the detection range, The effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

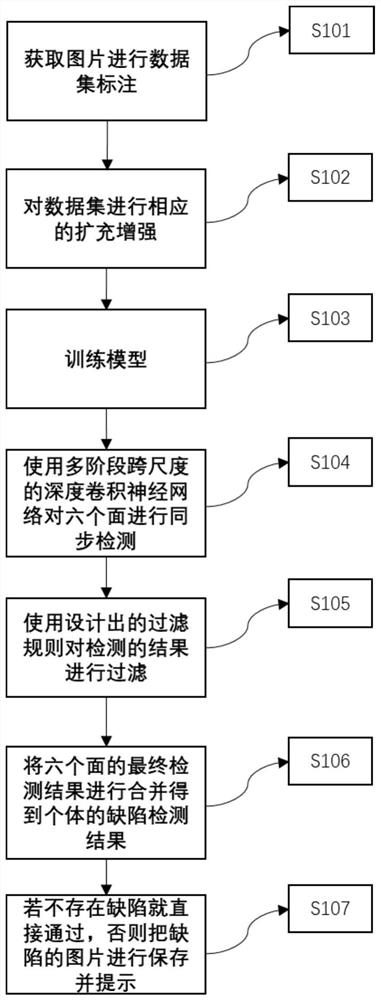

[0056] Such as figure 1 As shown, the real-time high-precision detection method for the appearance defect of the power adapter includes the following steps:

[0057] S101. Obtain the high-definition picture of the power adapter and mark it:

[0058] First of all, the defect judgment of the power adapter needs to be judged one by one on the six sides of the power adapter. The three high-definition cameras arranged on the left, right and right above the production line can obtain three different sides at a time. Pictures, when the robot arm is exchanged and flipped, pictures of the other three sides can be obtained, so that all high-definition pictures can be obtained, and then the pictures are marked to determine the location and type of defects.

[0059] S102. Perform corresponding expansion and enhancement on the data set:

[0060] Extract the defect parts in the picture, and then expand and enhance the defect data set. The main expansion and enhancement methods are basic t...

Embodiment 2

[0072] On the basis of Example 1, such as figure 2 As shown, the specific operations for corresponding expansion and enhancement of the data set in step S102 are:

[0073] S201. Preprocessing the input picture:

[0074] Preprocess the input image and convert the size of the image so that the size of the image is the same as Figure 5 The convolutional network for feature multiplexing shown in the figure requires the same input size, and at the same time, the amount of subsequent calculations can be reduced through preliminary processing.

[0075] S202. Input the preprocessed picture into the feature-multiplexed convolutional network:

[0076] Use different convolution kernels to extract the features of the picture. In order to reduce time consumption, a feature multiplexing convolution form is designed, which can greatly reduce the number of layers in the network.

[0077] S203. Use the cyclic feature pyramid to fuse features:

[0078] The information finally extracted by...

Embodiment 3

[0088] On the basis of Example 1 and Example 2, as Figure 4 As shown, in step S202, the specific operations in the convolutional network for multiplexing the preprocessed image input features are:

[0089] S301. Input the processed picture:

[0090] Preprocess the input image and convert the size of the image so that the size of the image is the same as the input size required by the convolutional network for feature multiplexing. At the same time, the initial processing can reduce the amount of subsequent calculations.

[0091] S302. Use the first convolution block to extract shallow features:

[0092] Use a deformable convolution kernel to extract features, and perform a pooling operation after convolution. The formula is as follows:

[0093]

[0094] The width of the input pooled picture is W, the height is H, F is the size of the convolution kernel, S is the step size of the pooling, W 1 is the width of the output after pooling, H 1 It is the high output after poolin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com