Flight time depth image iterative optimization method based on convolutional neural network

A convolutional neural network and time-of-flight technology, applied in the field of 3D vision, can solve problems such as difficult packaging, deviation, and low reliability and accuracy, and achieve the effects of expanding application prospects, improving accuracy, and eliminating various errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0083] The present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments.

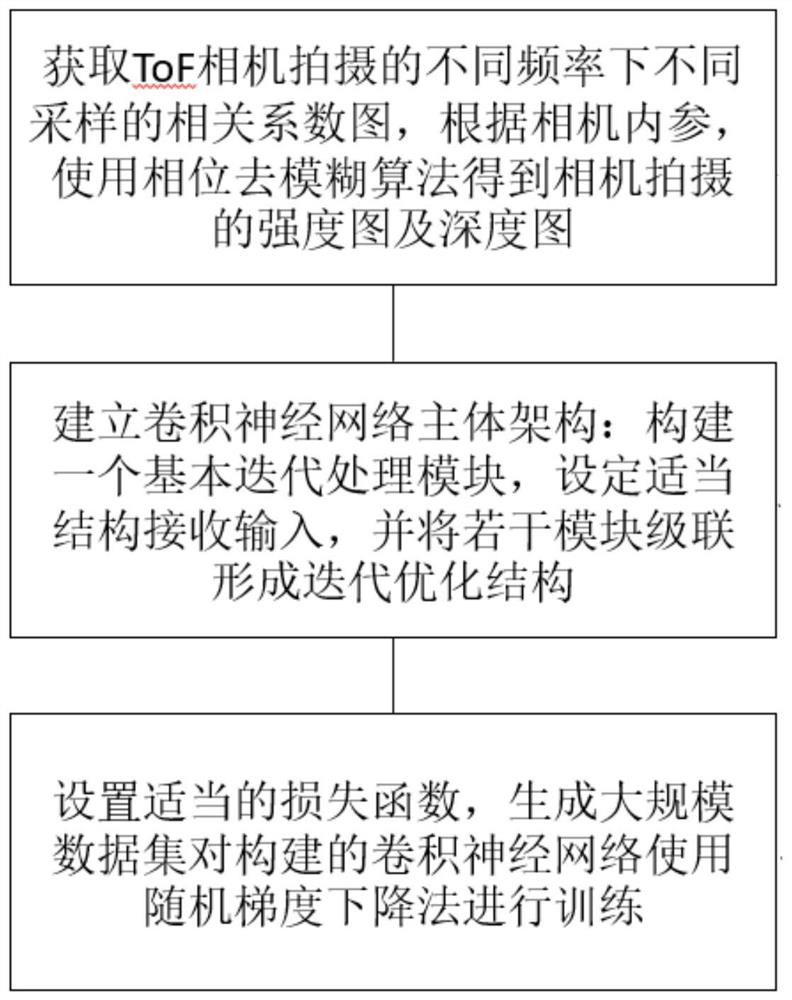

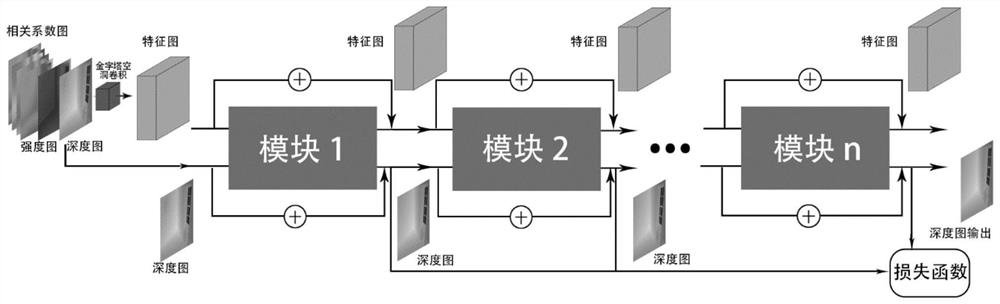

[0084] Such as figure 1 As shown, the iterative optimization method of the time-of-flight depth image based on the convolutional neural network of the present invention comprises the following steps:

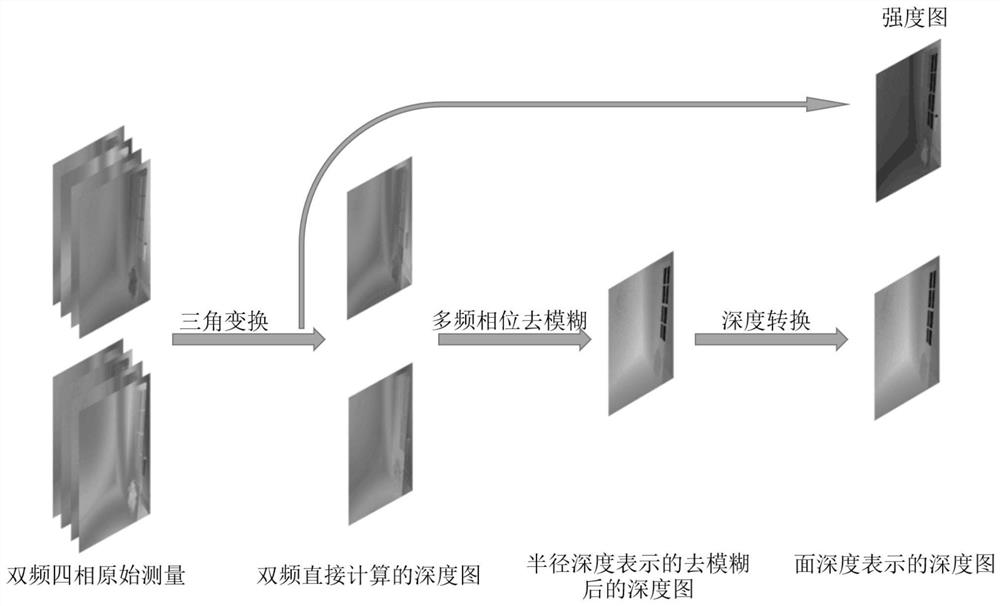

[0085] Step 1: If figure 2 As shown, the correlation coefficient map obtained by ToF camera imaging is obtained by using basic triangular transformation and multi-frequency phase deblurring algorithm to obtain the initial depth map and reflection intensity map.

[0086] The working mode of the ToF camera is dual-frequency four-sampling. The ToF camera emits amplitude-modulated continuous waves of two different frequencies, and performs basic triangular transformation and multi-frequency phase deblurring algorithm on the amplitude-modulated continuous waves of each frequency to obtain an initial Depth map and a reflection intensity ma...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com