Outdoor natural scene illumination estimation method and device

A technology for illumination estimation and natural scenes, applied in the field of image processing, can solve the problems of inability to estimate illumination information, limited illumination expressiveness, and inability to guarantee the demand for diverse data of deep neural networks, so as to improve quality, expand application scope, The effect of reducing difficulty and time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

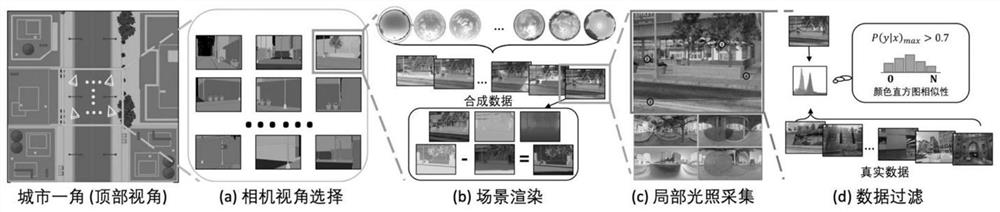

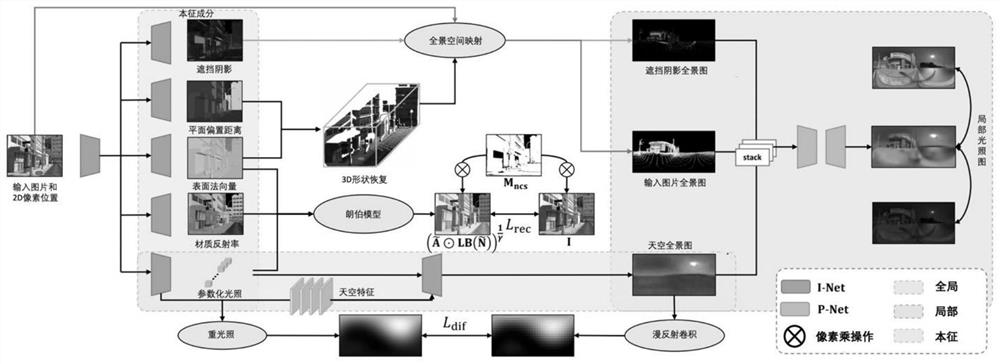

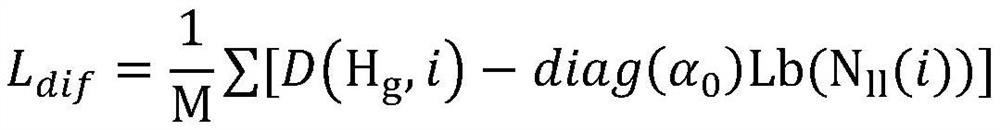

[0044] Specific implementation mode 1. Combination figure 1 and figure 2 Describe this embodiment, the outdoor natural scene illumination estimation method, this embodiment proposes the outdoor local illumination estimation technology (Outdoor Local Illumination Estimation), which refers to taking an image of an outdoor scene with a given single low dynamic range and the corresponding scene in the image When the pixel position is determined, the high dynamic range (High Dynamic Range, HDR) lighting information corresponding to the pixel point in the 3D scene can be estimated. In this embodiment, a method of generating a large amount of data based on a large-scale 3D virtual city scene and combining a specially designed deep neural network structure to realize outdoor local illumination estimation is proposed. Generally, complete lighting information capture requires special shooting equipment (such as ND filters, cameras with fisheye lenses, etc.) Shooting settings, this me...

specific Embodiment approach 2

[0068] Specific embodiment two, combine figure 1 and figure 2 Describe this embodiment, this embodiment is the estimating device of the outdoor natural scene illumination estimation method described in the specific embodiment, the device includes a synthetic data acquisition module and a neural network module; the neural network module includes an intrinsic image decomposition module (this Image decomposition network, I-Net), image space to panoramic space mapping module and panoramic illumination information completion module (panoramic illumination completion network, P-Net);

[0069] The synthetic data acquisition module is used to select different camera shooting angles for scene acquisition, and obtain the true value of the intrinsic attribute in the rendering process by extracting the rendering buffer in the rendering process; at the same time, through local illumination collection and data filtering, obtain Compared with the collected real scene images, the rendered i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com