Storage method and device for hybrid cache

A cache and data block technology, applied in the storage field, can solve the problem of inability to balance randomness and throughput, and achieve the effect of reducing table entry operations, improving CPU utilization, and improving command efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0029] The embodiment of the present disclosure discloses a hybrid cache storage method, including the following process:

[0030] Divide the data into blocks of preset size;

[0031] Associate the data blocks in the form of a data linked list and write them into the queue cache, and start reading and parsing the command cache after the device receives the command interruption;

[0032] After the storage device acquires the command, it reconstructs the data link list based on the command length;

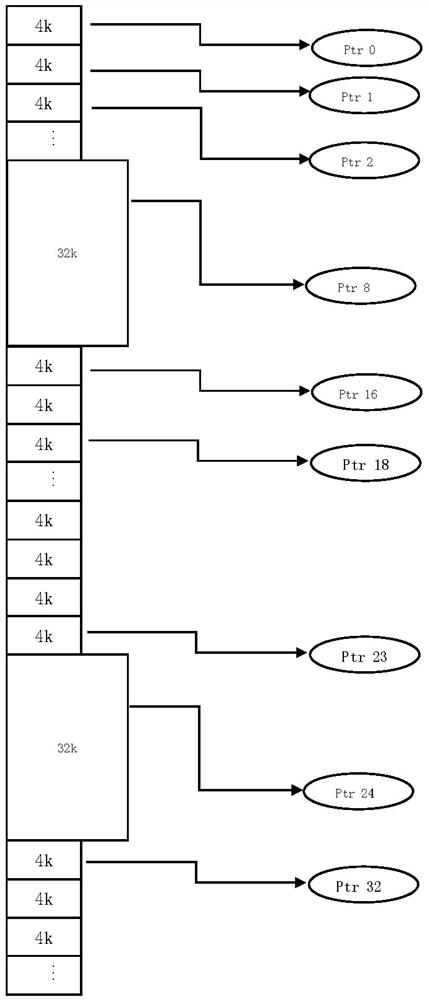

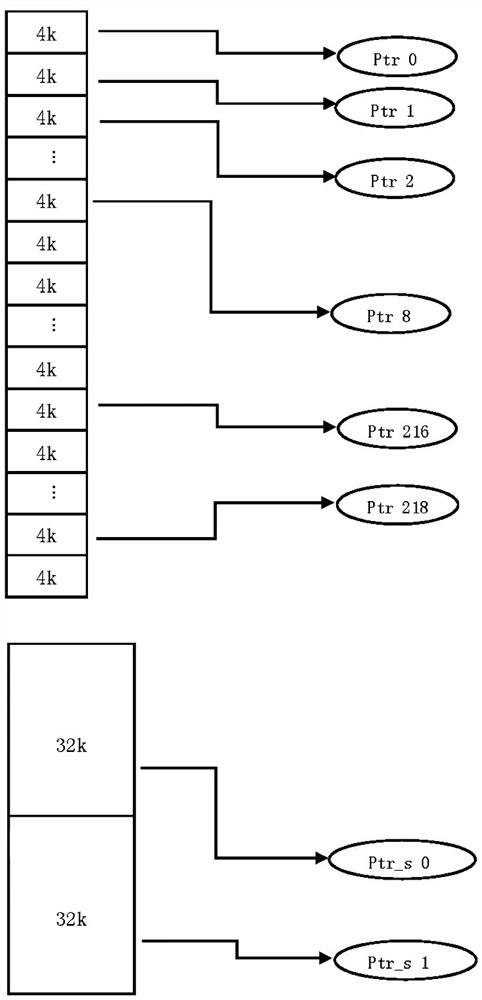

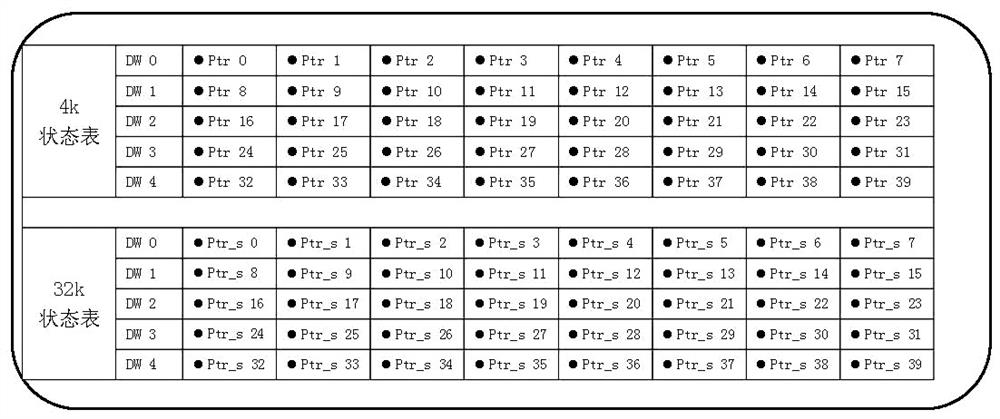

[0033] The command reconstruction includes: when the data address is continuous, the logic circuit merges the small data blocks into the largest data block that can be supported, and uses the largest data block mapping, so that the command transmission efficiency can be doubled; when the data address is discrete or When it is a discrete command, the cache block mapping with the same size as the operating system data block is used;

[0034] A cache class flag is set in the command r...

Embodiment 2

[0044]The embodiment of the present disclosure discloses a storage device adopting a hybrid cache storage method. The storage device stores a computer program. When the storage device performs data storage operations, the aforementioned computer program is executed by a processor, which can implement the first embodiment. The storage method of the hybrid cache. First, the data is divided into data blocks of a preset size; the data blocks are associated in the form of a data linked list and written into the queue cache. After the device receives a command interrupt, it starts to read and parse the command cache.

[0045] After the storage device acquires the command, it reconstructs the data link list based on the command length; the command reconstruction includes: when the data addresses are continuous, the logic circuit merges the small data blocks into the largest data block that can be supported, and uses the largest data block Block mapping, which can double the command t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com