Multi-source information fusion robot positioning method and system for unstructured environment

A technology of multi-source information fusion and robot positioning, which is applied in the direction of radio wave measurement systems, instruments, and utilization of re-radiation, etc., to ensure stable operation and achieve the effect of tight coupling

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

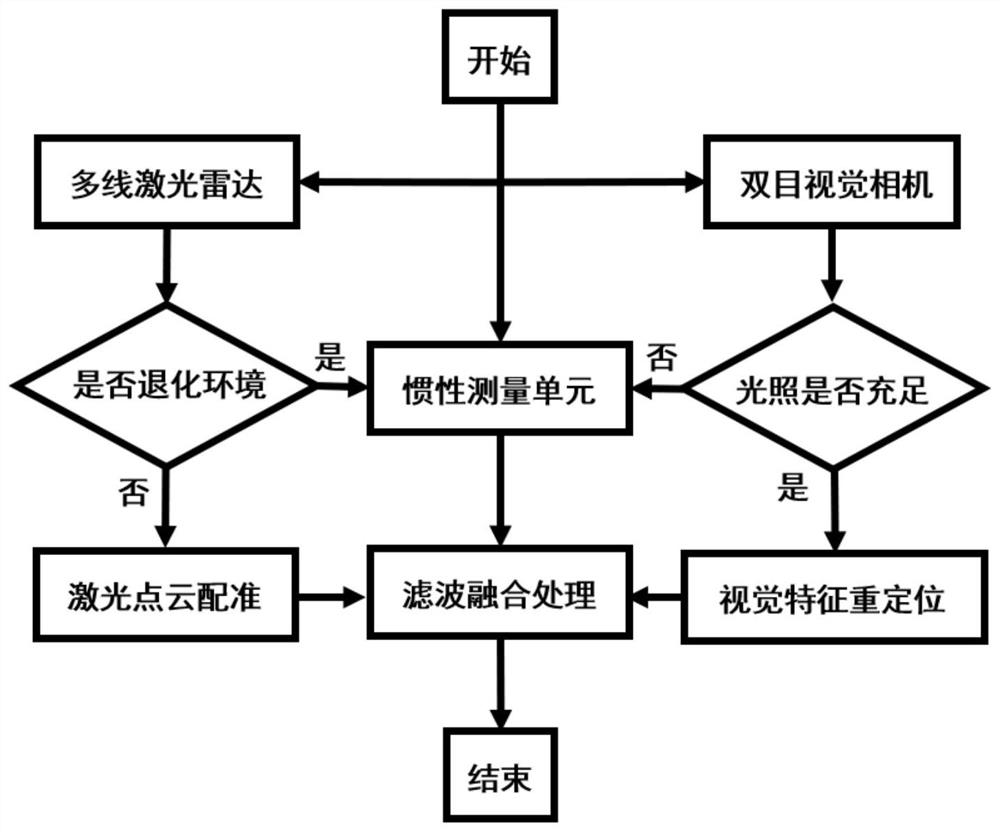

[0041] like figure 1 As shown, the present invention provides a multi-source information fusion robot positioning method for unstructured environment, including:

[0042] Use the real-time laser point cloud emitted by the multi-line lidar and the constructed point cloud map to perform point cloud registration to calculate the current robot body pose information;

[0043] Use the binocular camera to obtain the image information of the current environment, extract the ORB visual feature points in each frame of the image to form a visual key frame, and relocate and match the current key frame and the built visual feature map to realize the current position information of the robot. Obtain;

[0044] At the same time, during the movement of the robot, the acceleration of the inertial measurement unit is integrated and processed to output the odometer information;

[0045]Based on the pose information obtained by the above three sensors, the real-time state of the robot is estimat...

Embodiment 2

[0060] This embodiment provides a multi-source information fusion robot positioning system for an unstructured environment, including:

[0061] The laser point cloud processing module is configured to: obtain the real-time laser point cloud emitted by the robot, perform point cloud registration with the preset point cloud map, and calculate the pose information of the current robot;

[0062] The image information processing module is configured to: obtain the image information of the current environment of the robot, extract the ORB visual feature points in each frame of the image to form a visual key frame, and relocate and match the current key frame and the preset visual feature map to obtain The current position information of the robot;

[0063] The acceleration processing module is configured to: obtain the acceleration of the robot, and obtain the odometer information by integrating;

[0064] The positioning information prediction module is configured to: filter the ob...

Embodiment 3

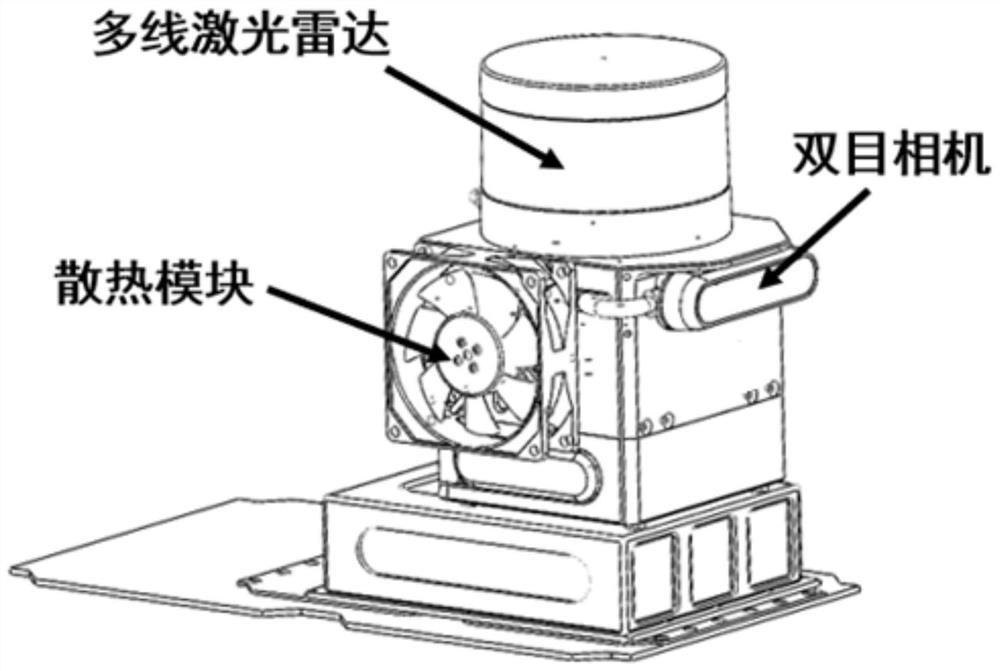

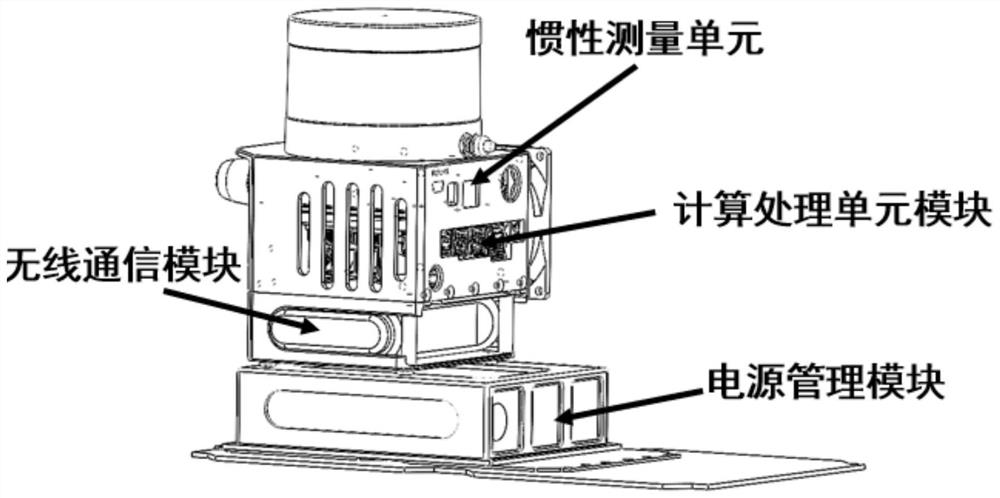

[0067] The present embodiment provides a control system based on a multi-source information fusion positioning robot in an unstructured environment, including:

[0068] The multi-line laser radar positioning module is configured to: emit a multi-line laser point cloud to scan the physical environment around the robot, perform registration and positioning based on the real-time laser point cloud and the existing environment point cloud map; use the laser to generate real-time point cloud information of the robot's surrounding environment , calculate the current robot pose information through the point cloud registration algorithm;

[0069] The binocular vision positioning module is configured to: obtain the real-time image data around the robot, and relocate it with the existing environmental visual feature point map; obtain the real-time image information in front of the robot, and use the image pixel information to extract the visual ORB feature points; According to the visua...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com