Image super-resolution reconstruction method based on convolutional neural network

A technology of super-resolution reconstruction and convolutional neural network, applied in the field of image super-resolution reconstruction based on convolutional neural network, to achieve the effect of improving image quality, improving accuracy, and enhancing the effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

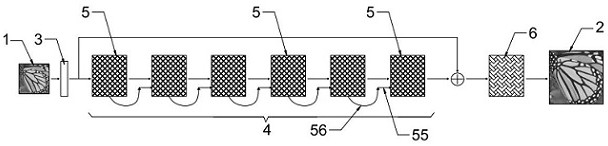

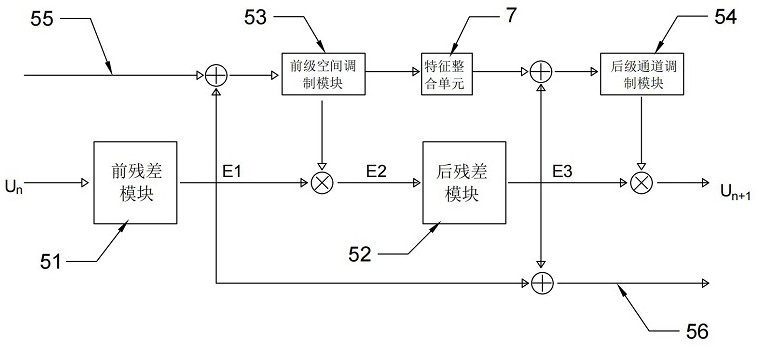

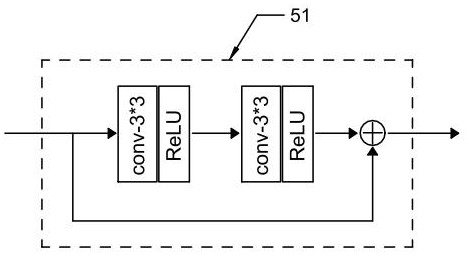

[0041] Using python language and deep learning framework, build figure 1 Image super-resolution reconstruction convolutional neural network shown. Among them, the preliminary convolution layer 3 is an ordinary convolution operation layer, and its convolution kernel size is 3*3. The deep feature mapping unit 4 includes 6 integrated feature extraction modules 5, and the internal structure of the integrated feature extraction module 5 is as follows: figure 2 As shown, the internal structures of the pre-residual module 51 and the post-residual module 52 are both as follows image 3 As shown, the internal structures of the former stage spatial modulation module 53, the latter stage channel modulation module 54 and the feature integration unit 7 are as follows: Figure 4 shown. The function of the image reconstruction unit 6 is to perform upsampling and super-resolution reconstruction on the feature map, and output the reconstructed image 2. The image reconstruction unit 6 can d...

Embodiment 2

[0052] In order to illustrate the effect of setting the neighbor incoming connection 55 and the neighbor outgoing connection 56 in the integrated feature extraction module 5 to improve the image reconstruction effect, the overall network architecture, the pre-residual module 51, the post-residual module 52, The components such as the front-stage spatial modulation module 53 , the rear-stage channel modulation module 54 , and the image reconstruction unit 6 are the same as those in Embodiment 1, except that the adjacent incoming connection 55 and the adjacent outgoing connection 56 in Embodiment 1 are removed. In the embodiment 2, the structure of the integrated feature extraction module 5 is as follows Image 6 As shown, using the same dataset and model training process, the test results are shown in the following table:

[0053]

[0054] It can be seen from the results that after setting the nearest neighbor incoming connection 55 and the nearest neighbor outgoing connecti...

Embodiment 3

[0056] Similar to Embodiment 2, in order to illustrate the effect of setting the feature integration unit 7 in the integrated feature extraction module 5, only the feature integration unit 7 in Embodiment 2 is removed, and other parts of the network in Embodiment 3 are completely the same as those in Embodiment 2. The same, the structure of the integrated feature extraction module 5 in the embodiment 3 is as follows Figure 7 shown. Using the same dataset and training process, the test results are shown in the following table:

[0057]

[0058] The above test results well illustrate the effectiveness of setting the feature integration unit 7 to improve the quality of network super-resolution reconstructed images.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com