Artificial head robot with facial expression and multiple perceptional functions

A facial expression and perception function technology, applied in the field of humanoid avatar robots, can solve the problems of single expression and no multi-sensing function of robots, and achieve the effects of high cost performance, small size and large torque

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

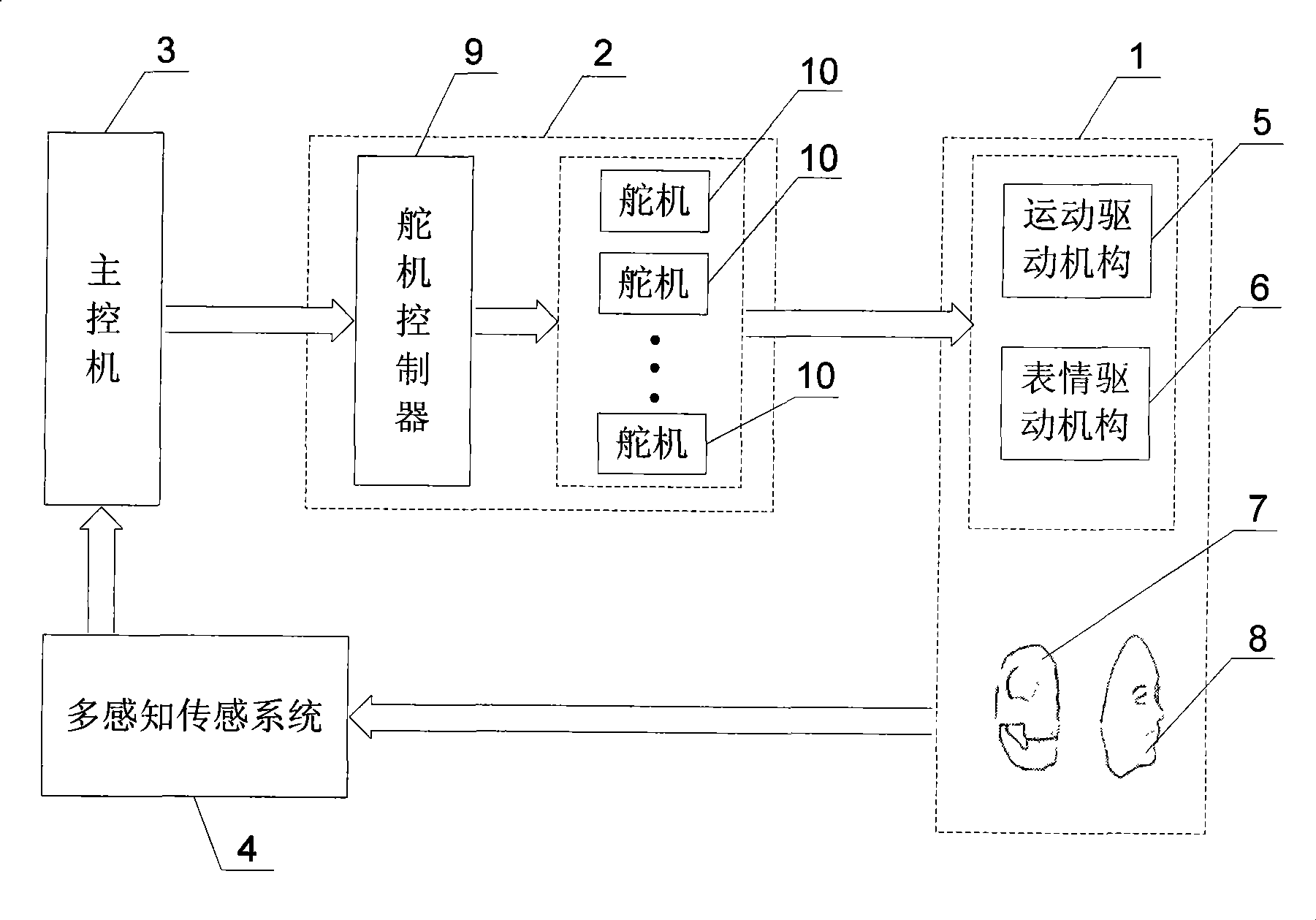

[0011] Specific implementation mode one, the following combination figure 1 , Figure 4 Describe this embodiment, this embodiment includes a robot body 1, a motion control system 2, a master computer 3 and a multi-sensory sensing system 4,

[0012] The robot body 1 includes a motion drive mechanism 5, an expression drive mechanism 6, a facial shell 7 and a facial elastic skin 8,

[0013] The motion control system 2 includes a steering gear controller 9 and a plurality of steering gears 10,

[0014] The information sensed by the multi-sensory sensing system 4 is output to the main control machine 3 for processing, and the main control machine 3 outputs corresponding command information to the steering gear controller 9, and the steering gear controller 9 outputs PWM pulses to drive the corresponding steering gear 10 to rotate To the designated position, the steering gear 10 drives the movement drive mechanism 5 to move the lip skin together to realize the function of the robo...

specific Embodiment approach 2

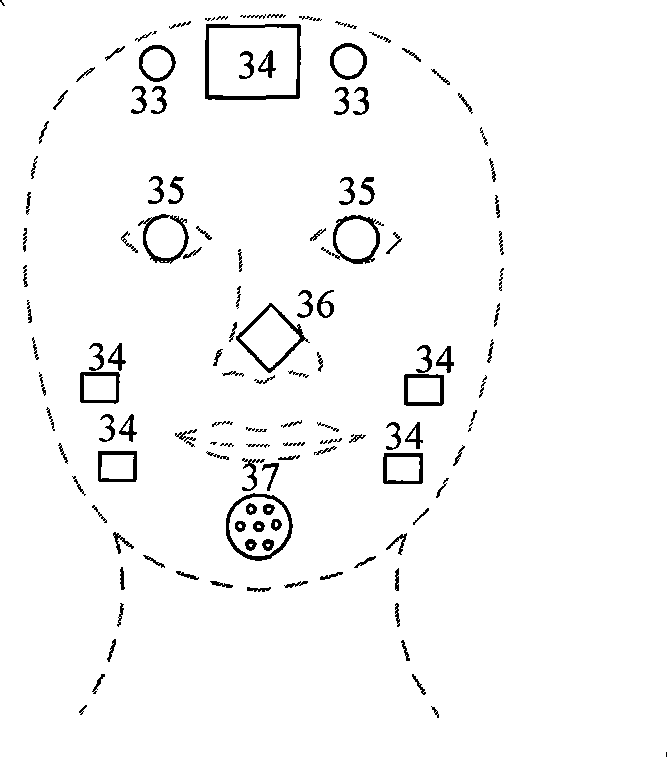

[0019] Specific implementation mode two, the following combination figure 2 Describe this embodiment, the difference between this embodiment and Embodiment 1 is that the multisensory sensing system 4 includes 2 temperature sensors 33, 5 tactile sensors 34, 2 visual sensors 35, olfactory sensors 36 and auditory sensors 37 , two temperature sensors 33 are respectively arranged on the left and right sides of the forehead, one tactile sensor 34 is arranged on the middle of the forehead, two tactile sensors 34 are respectively arranged on the left and right cheeks, and two visual sensors 35 are respectively arranged in the left and right eye sockets , the olfactory sensor 36 is arranged at the nose position, and the auditory sensor 37 is arranged at the middle of the chin, and the others are the same as those in the first embodiment.

[0020] figure 2 Shown is the distribution diagram of each sensor in the multi-sensory sensing system. In this embodiment, the temperature sensor...

specific Embodiment approach 3

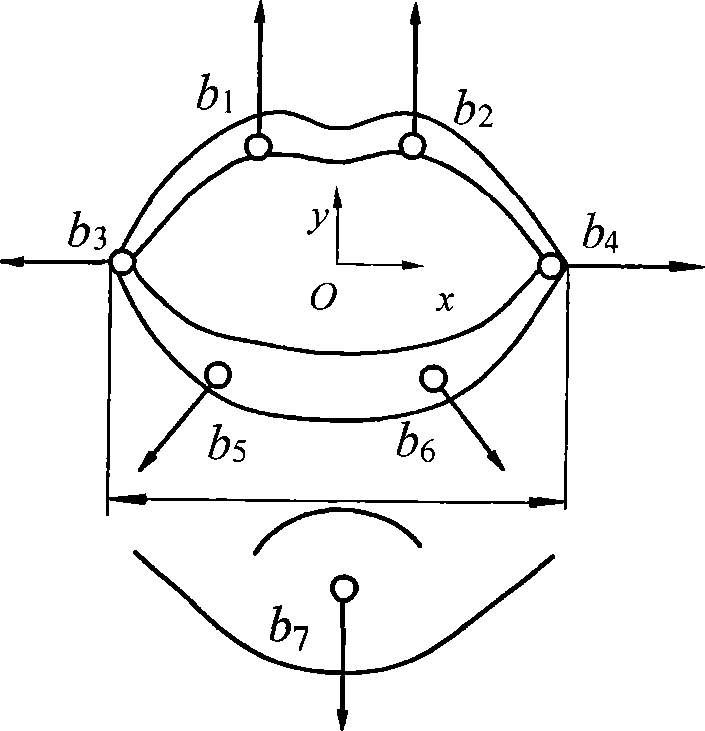

[0022] Specific implementation mode three, the following combination Figure 4 , Figure 5 Describe this embodiment. The difference between this embodiment and Embodiment 1 is that the motion drive mechanism 5 includes an eyelid drive mechanism, an eyeball drive mechanism and a jaw drive mechanism. The eyelid drive mechanism is used to drive the eyelid 17 to move up and down. The eyeball drive mechanism It is used to drive the eyeball 24 to rotate left and right and up and down, and the lower jaw drive mechanism is used to drive the lower jaw to move up and down. By cooperating with the mouth shape drive mechanism, the function of imitating the shape of the mouth is realized. Others are the same as the first embodiment.

[0023] The motion driving mechanism 5 has 4 degrees of freedom, wherein the eyeball 24 has 2 degrees of freedom, the eyelid 17 has 1 degree of freedom, and the jaw 22 has 1 degree of freedom.

[0024] Such as Figure 4 As shown, the head organs include eyeb...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com