Distributed visual sensing network-based movable navigation system

A technology of visual sensing and navigation system, applied in the field of communication and control, can solve the problems of relatively fixed work, difficult robot navigation, large amount of calculation and storage space, etc., and achieve the effect of high reliability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

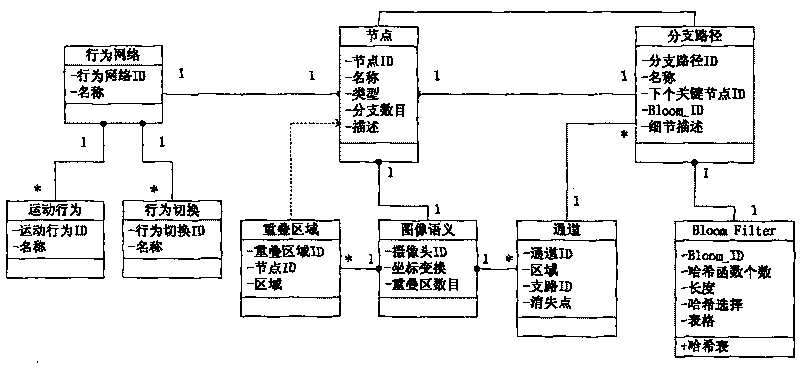

[0024] A mobile navigation system based on a distributed visual sensor network, including a visual network system and moving objects; the visual network system is mainly composed of a plurality of visual sensors connected through a wireless or wired network to provide an intelligent environment; the The moving object has a control box that can communicate with the vision network system, which controls the movement.

[0025] The visual sensor includes a camera and a microprocessor; the camera is used for capturing video information; the microprocessor is used for real-time optical flow extraction of the video information, monitoring of changes in background images, monitoring of intruding objects, and determining Motion entity, output the target motion information with entity tag or semantics.

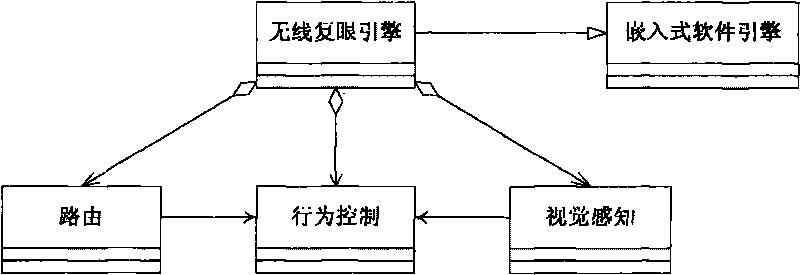

[0026] The camera is a CMOS camera or a closed-circuit surveillance camera; the camera is provided with an embedded software engine, and the embedded software engine includes a routing ...

Embodiment 2

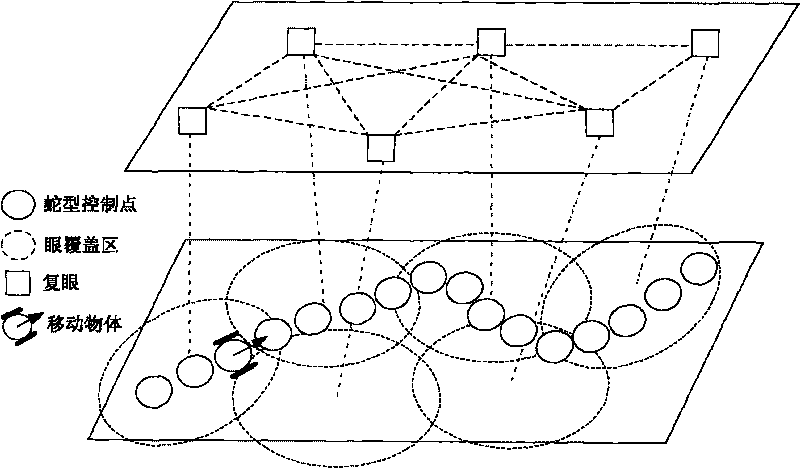

[0029] The overall system block diagram of this embodiment is as follows: figure 1 shown, including the following four parts:

[0030] 1. Install distributed vision sensors in the navigation environment. The vision sensor can be a dedicated CMOS camera or use the original closed-circuit surveillance camera, a microprocessor performs real-time optical flow extraction on video information, background image change detection, intrusion object detection, determination of moving entities, and output with Entity tagged / semantic target motion information. The vision sensor communicates with other vision sensors through a wireless or wired network to form a distributed vision network.

[0031] 2. A mobile vehicle wirelessly controlled by a distributed vision network. The mobile vehicle has very little on-board computing power, and only has simple emergency stop and obstacle avoidance capabilities, while the motion control is coordinated by the visual sensor that controls the current...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com