Remote Sensing Image Fusion Method Based on Sparse Tensor Nearest Neighbor Embedding

A remote sensing image fusion and sparse technology, applied in the field of remote sensing image fusion, can solve the problems of failing to fully consider the full utilization of information, ignoring the application of high-order statistical characteristics, and spectral distortion of fusion results, so as to improve spectral distortion and color distortion, The effect of enhancing spatial resolution and improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

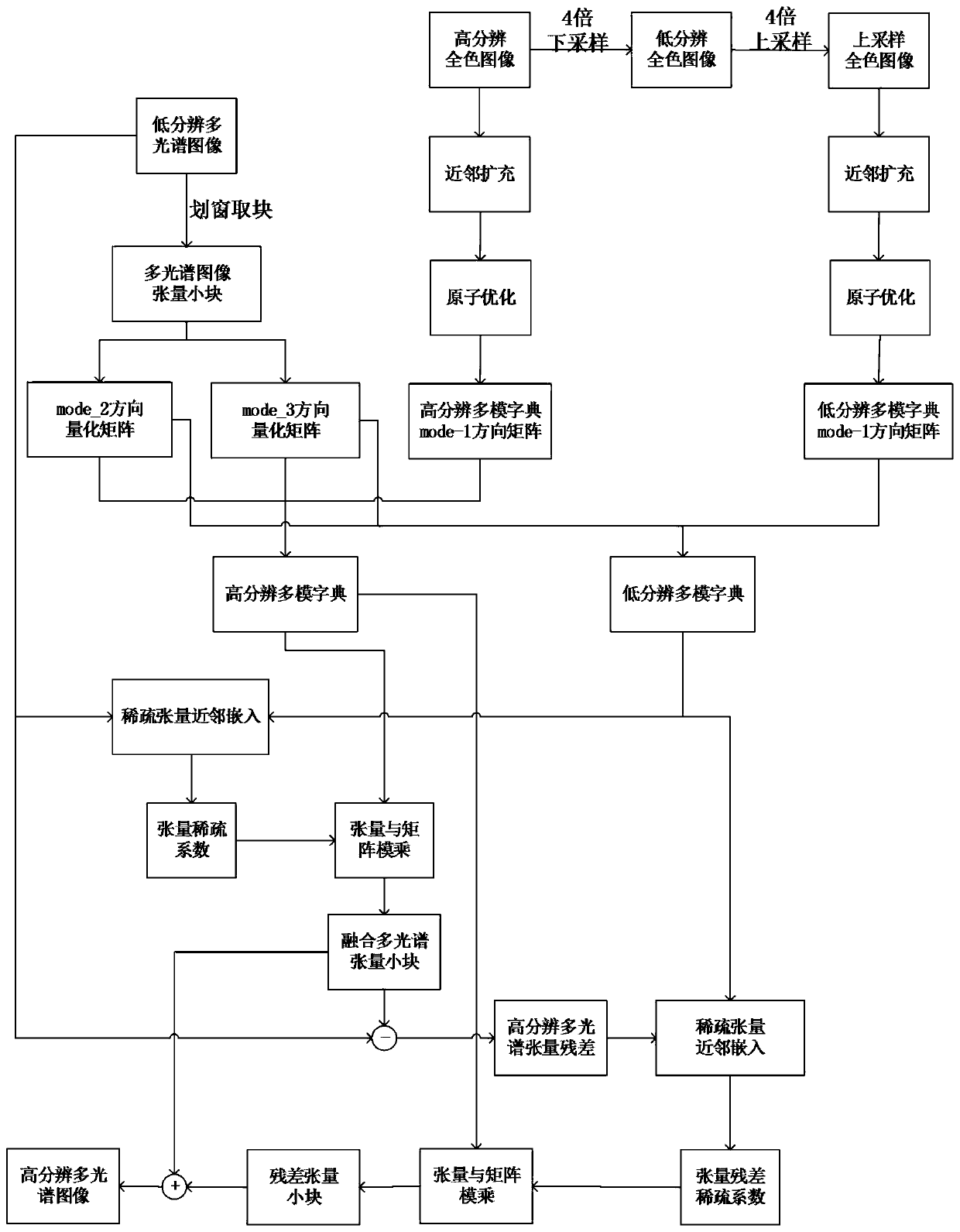

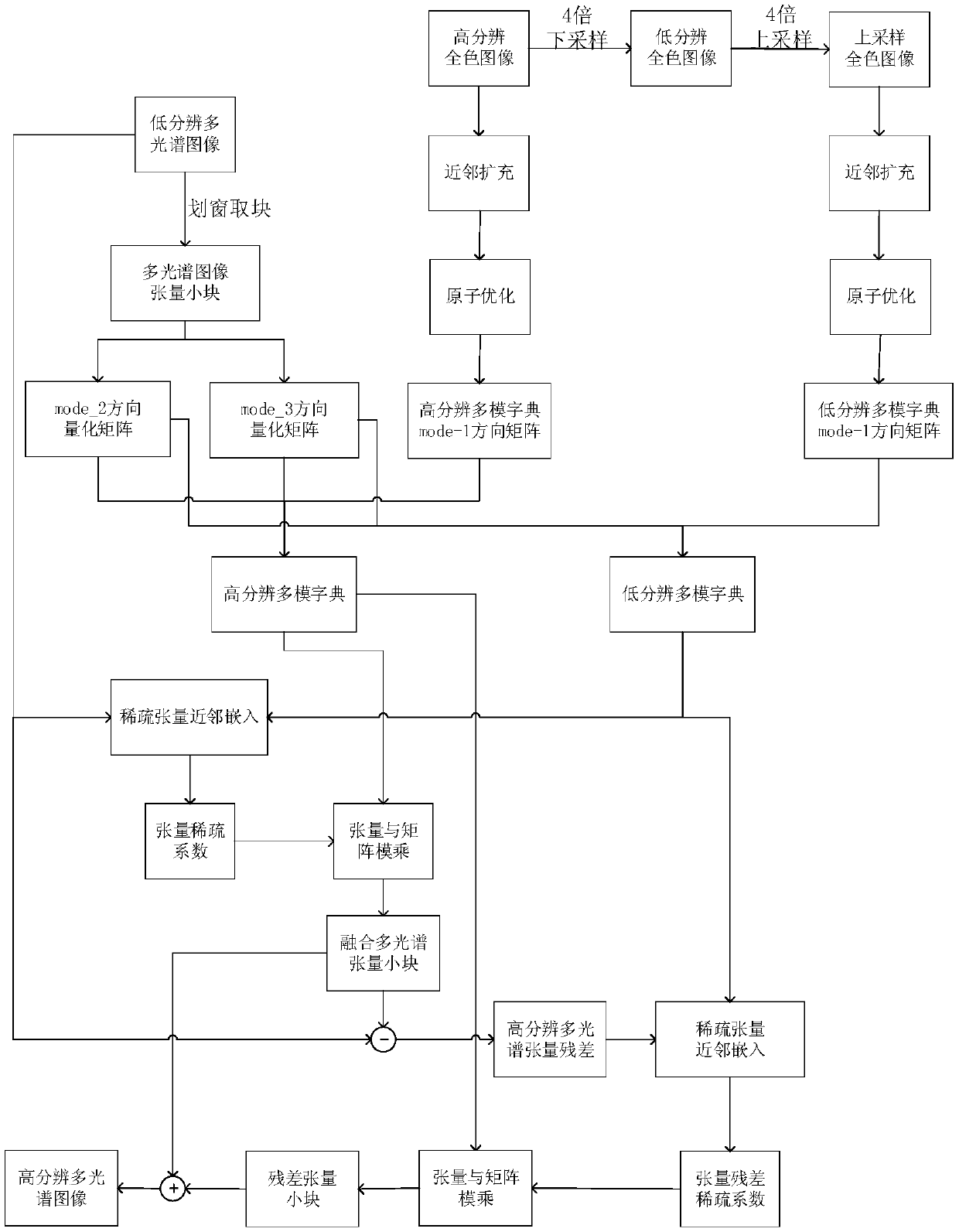

[0041] refer to figure 1 , the specific embodiment of the present invention is as follows:

[0042] Step 1, respectively input the low-resolution multispectral image M and the high-resolution panchromatic image P,

[0043] (1.1) The size of the low-resolution multispectral image M input in the embodiment of the present invention is 64×64×4, and the resolution is 2m; the size of the high-resolution panchromatic image P is 256×256, and the resolution is 0.5m;

[0044] (1.2) Upsampling the low-resolution multispectral image M to the same resolution size as the high-resolution panchromatic image P to obtain an upsampled multispectral image M1. In this example, the size of M1 is 256×256×4.

[0045] Step 2: Input a high-resolution panchromatic image P to obtain a downsampled panchromatic image P1 and an upsampled panchromatic image P2.

[0046] (2.1) Input a high-resolution panchromatic image P, and downsample it to obtain a downsampled panchromatic image P1 with a size of 64×64;

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com