Autonomous landing method and system for unmanned aerial vehicle based on monocular vision and electronic device

An autonomous landing and monocular vision technology, applied in the field of visual navigation, can solve the problem of low positioning accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

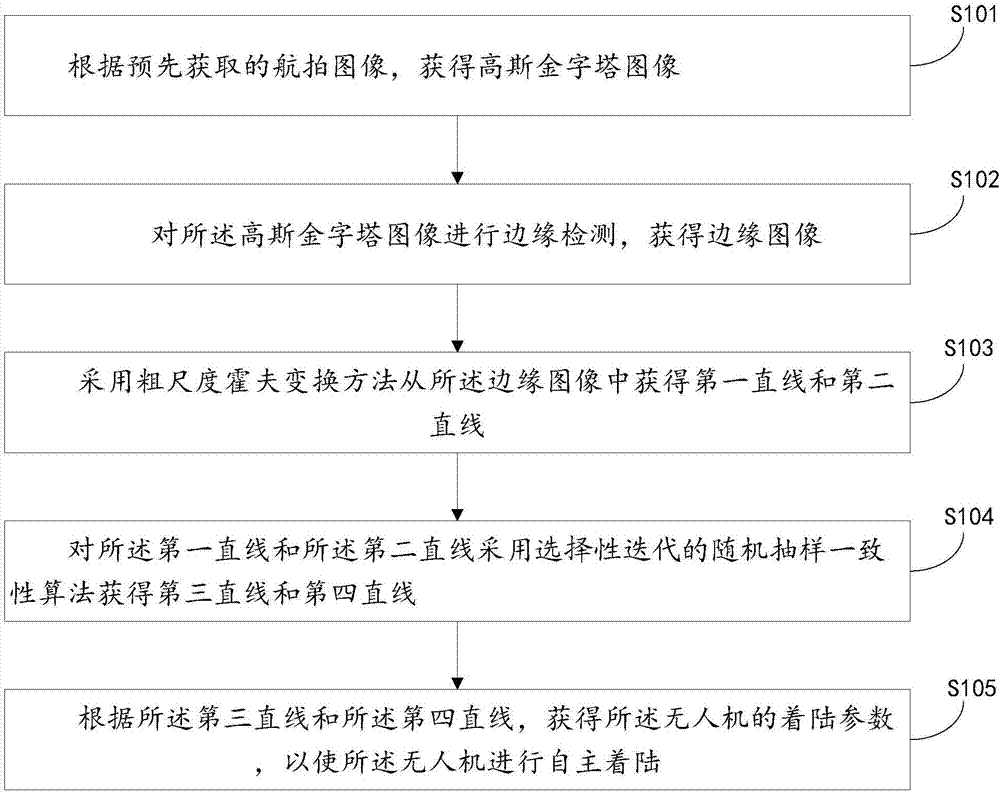

[0023] This embodiment provides a method for autonomous landing of drones based on monocular vision, please refer to figure 1 , the method includes:

[0024] Step S101: Obtain a Gaussian pyramid image according to the pre-acquired aerial image;

[0025] Step S102: performing edge detection on the Gaussian pyramid image to obtain an edge image;

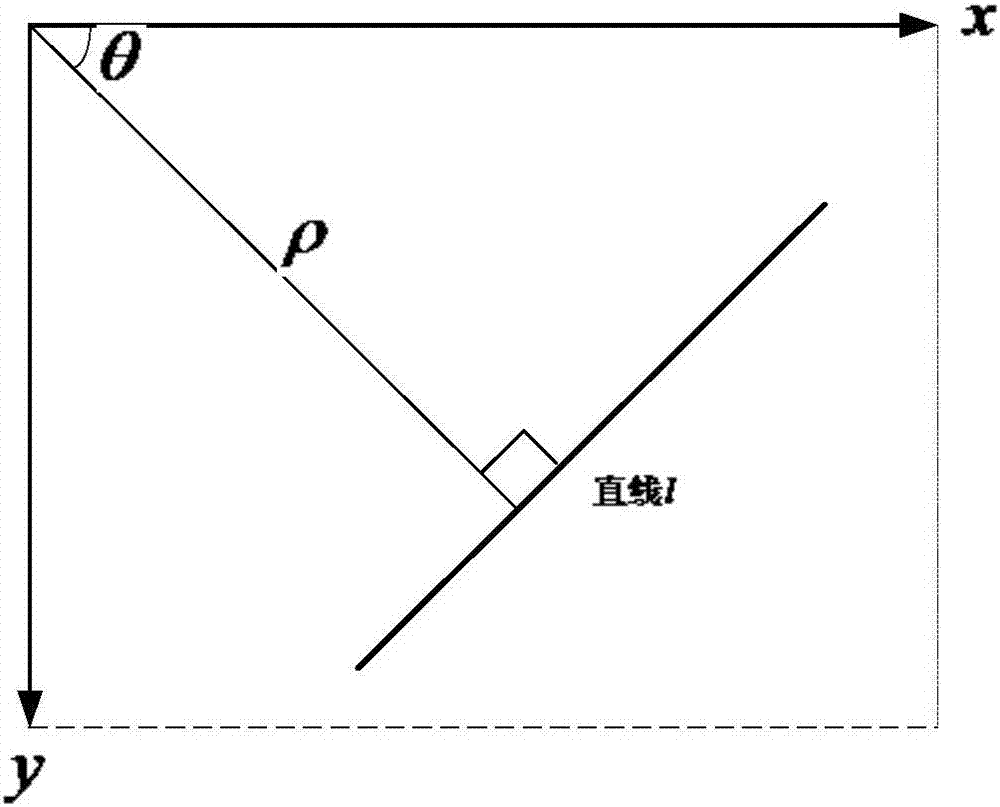

[0026] Step S103: Obtain a first straight line and a second straight line from the edge image by using a coarse-scale Hough transform method;

[0027] Step S104: Obtaining a third straight line and a fourth straight line by using a selective iterative random sampling consensus algorithm on the first straight line and the second straight line,

[0028] Step S105: Obtain the landing parameters of the UAV according to the third straight line and the fourth straight line, so that the UAV can land autonomously.

[0029] It should be noted that, in this application, Gaussian pyramid images are first obtained based on pre-acquired aerial i...

Embodiment 2

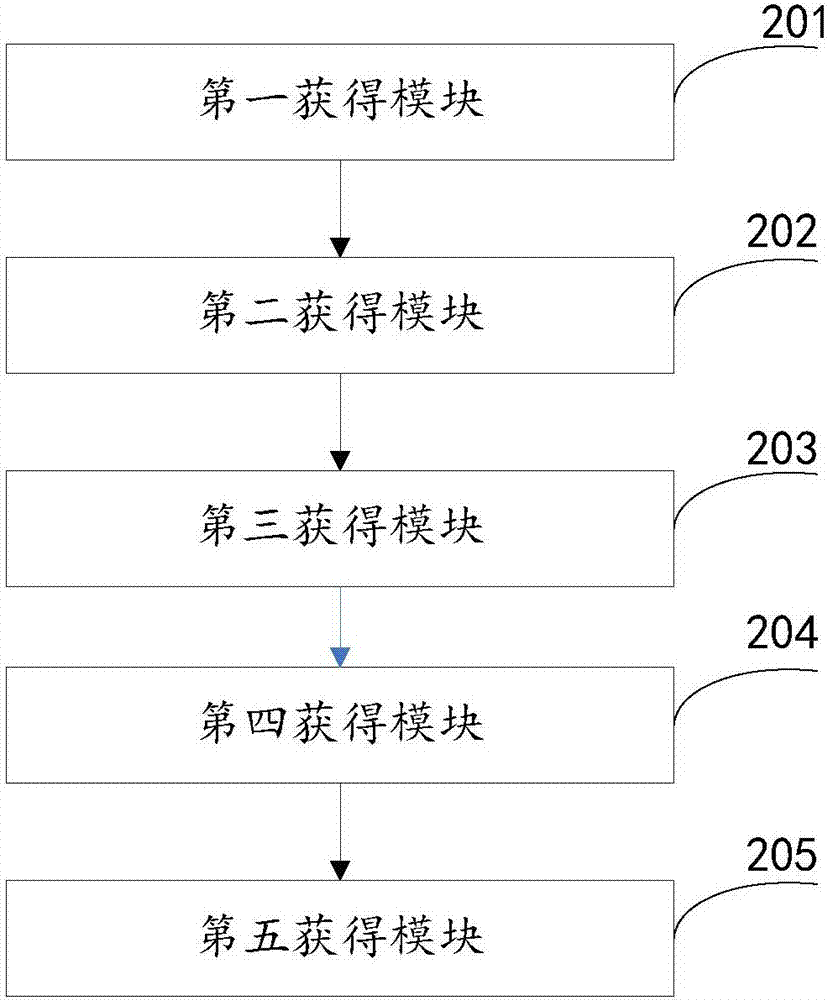

[0070] Based on the same inventive concept as Embodiment 1, Embodiment 2 of the present invention provides a system for autonomous landing of drones based on monocular vision. Please refer to figure 2 , the system includes:

[0071] The first obtaining module 201 is used to obtain a Gaussian pyramid image according to a pre-acquired aerial image;

[0072] The second obtaining module 202 is configured to perform edge detection on the Gaussian pyramid image to obtain an edge image;

[0073] A third obtaining module 203, configured to obtain the first straight line and the second straight line from the edge image by using a coarse-scale Hough transform method;

[0074] A fourth obtaining module 204, configured to obtain a third straight line and a fourth straight line by using a selective iterative random sampling consensus algorithm on the first straight line and the second straight line,

[0075] The fifth obtaining module 205 is configured to obtain the landing parameters o...

Embodiment 3

[0100] Based on the same inventive concept as that of Embodiment 1, Embodiment 3 of the present invention provides an electronic device. Please refer to Figure 6 , comprising a memory 301, a processor 302 and a computer program stored on the memory 301 and operable on the processor 302, the processor 302 implements the following steps when executing the program:

[0101] Obtain a Gaussian pyramid image based on the pre-acquired aerial image;

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com