Multi-layer feedforward neural network parallel accelerator

A feed-forward neural network and accelerator technology, applied in the field of multi-layer feed-forward neural network parallel accelerators, can solve the problems of data migration and calculation that cannot achieve higher efficiency, transistors cannot function, and poor scalability, etc., to achieve resource consumption Fewer, adjustable parallelism, good scalability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention will be described in detail below in conjunction with the accompanying drawings and specific implementation cases.

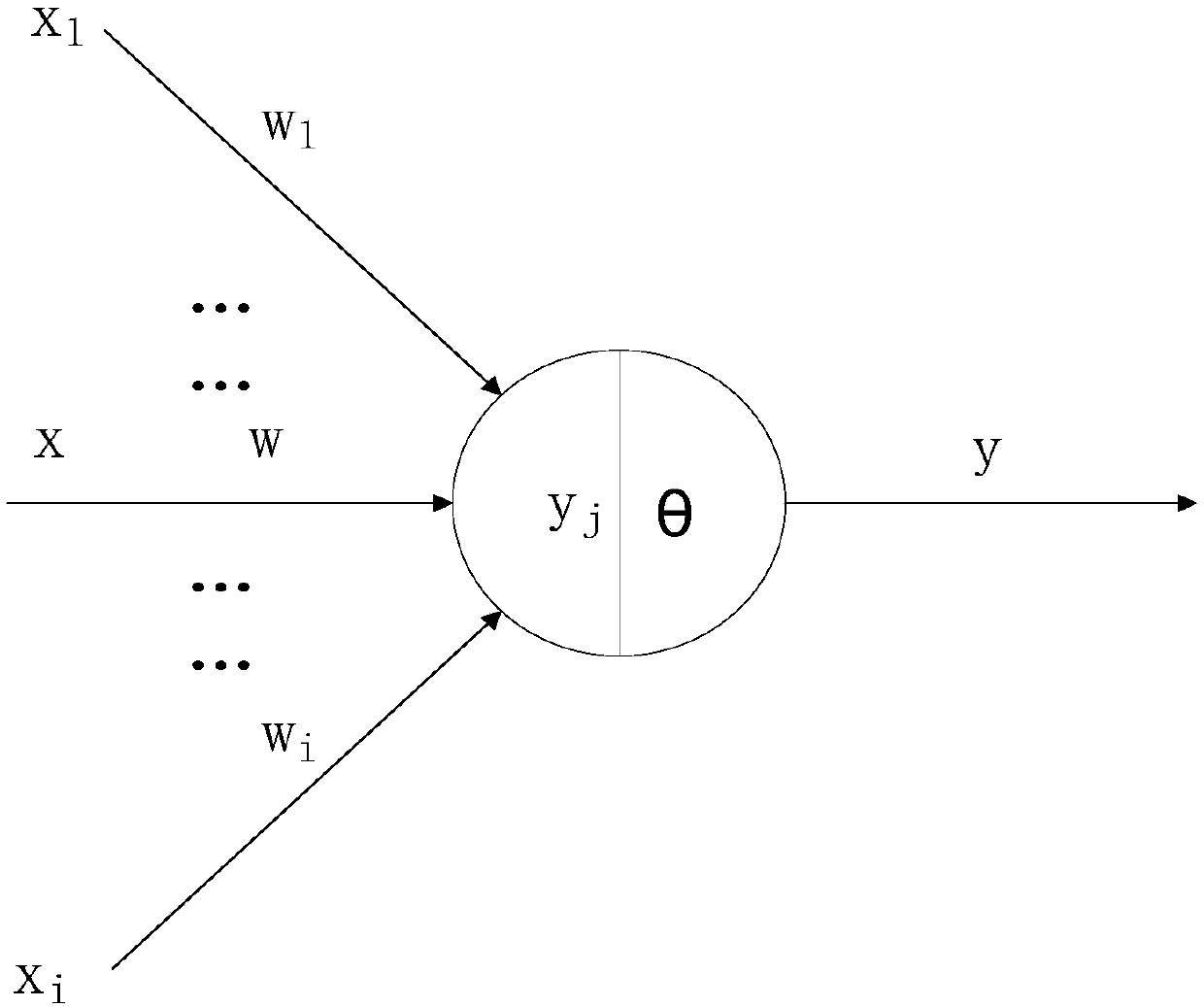

[0028] Such as figure 1 As shown, a typical neuron such as figure 1 Shown: Receive input signals from n other neurons, these input signals are transmitted through weighted connections, the total input value received by the neuron will be compared with the neuron threshold, and then processed by the "activation function" Produce neuron output.

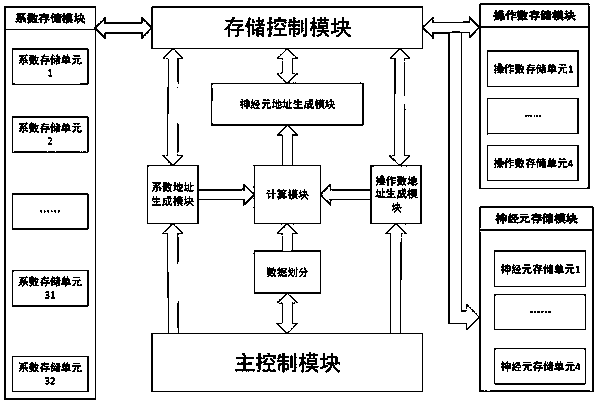

[0029] The multi-layer feed-forward neural network parallel accelerator of this embodiment is mainly composed of a main control module, a data division module, a coefficient address generation module, an operand address generation module, a neuron address generation module, a storage control module and a storage module, see figure 2 .

[0030] Among them, the main control module receives the system start signal, calls the data division module to allocate the calculation of hidden layer neu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com