A CNN iterative training method of an artificial intelligence framework

An artificial intelligence and training method technology, applied in the field of video recognition, can solve the problems of complex iterative training and low degree of automation, and achieve the effect of simple principle and low degree of automation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0033] A kind of artificial intelligence frame carries out CNN iterative training method, comprises sample set, is characterized in that: comprise the following steps:

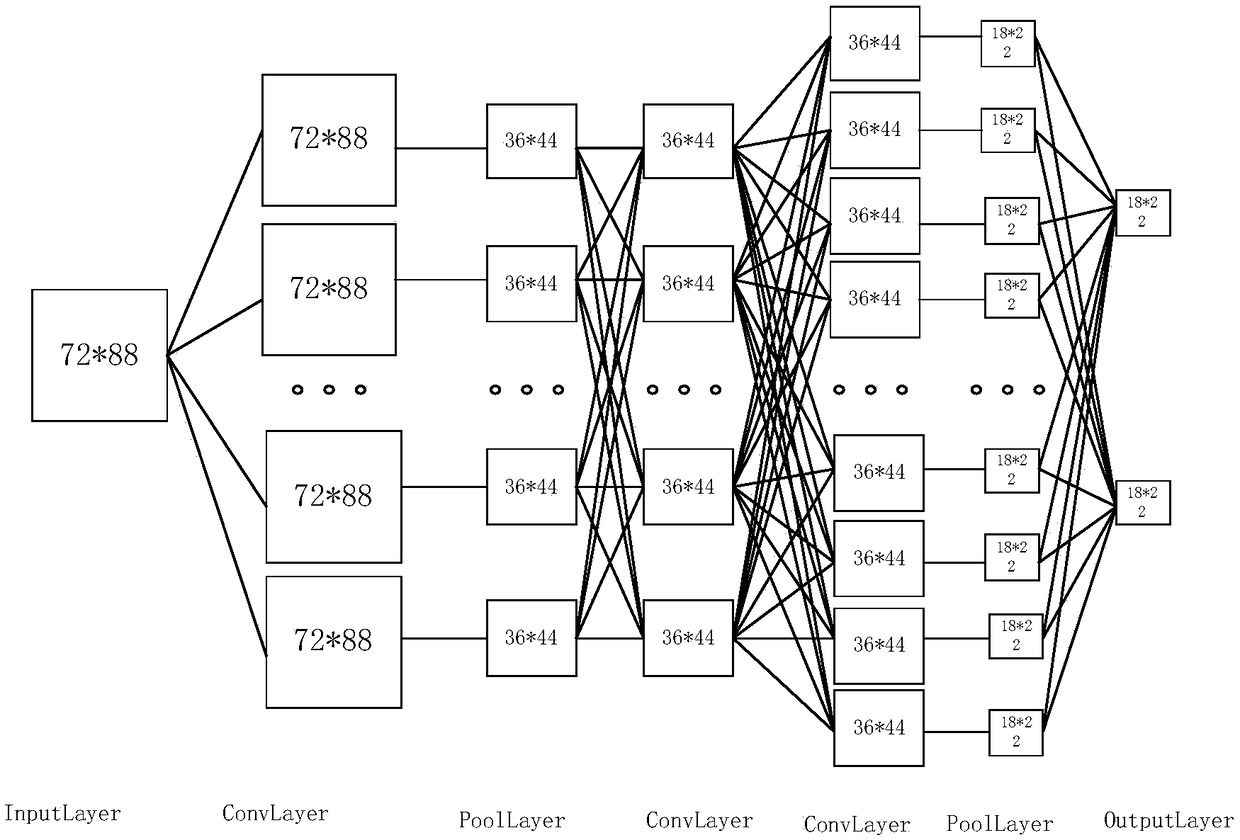

[0034] S1, construct CNN network structure, described CNN network structure is training network, and this training network comprises three convolutional layers, two pooling layers and an output layer; Wherein:

[0035] The first layer is a convolutional layer, using the Relu activation function, and the convolution method is convolution with edges;

[0036] The second layer is the pooling layer, which is pooled according to the maximum value;

[0037] The third layer is the convolutional layer, using the Relu activation function, and the convolution method is convolution with edges;

[0038] The fourth layer is the convolutional layer, using the Relu activation function, and the convolution method is convolution with edges;

[0039] The fifth layer is the pooling layer, using the Relu activation function;

...

Embodiment 2

[0046] The difference between this embodiment and Embodiment 1 is that further, the sample set is a video image sample set, and the video image sample set is obtained by intercepting key frames of video samples.

[0047] Further, it also includes classifying the video image sample set. The sample images are divided into two categories: images that include objects and images that do not include objects.

[0048] Further, it also includes extracting a test set and a training set from the video sample set according to a preset size.

[0049]Further, the step S2 also includes a method of transforming the images in the training set. The method of image transformation includes horizontal translation, vertical translation, rotation, changing image contrast, changing brightness, setting the range of the blurred area and the degree of blurring and Adjust the amount of noise and the amount of transformations with detailed control for each type of transformation. The image transformati...

Embodiment 3

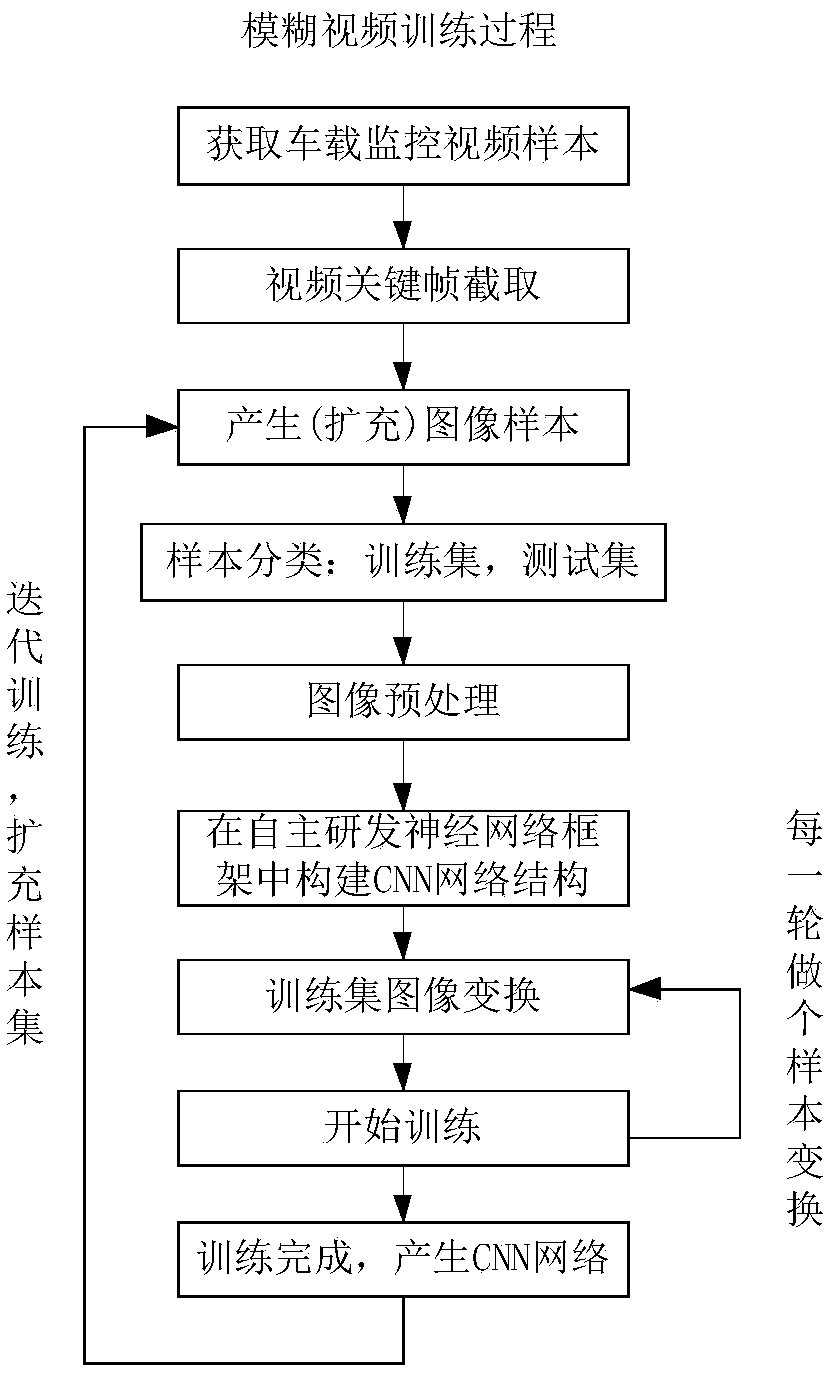

[0052] Such as figure 1 , 2 As shown, the specific steps of the detailed training process of fuzzy video recognition are as follows:

[0053] First obtain the vehicle monitoring video sample. The sample data in this embodiment is generated based on the in-vehicle video of a passenger and truck on a third-party monitoring platform, and the sample resolution of the acquired monitoring video is generally 352*288. Of course, it can also be obtained in other ways, and the resolution is not limited to 352*288. Then keyframe capture is performed on the video. After obtaining a large number of vehicle surveillance videos, key frame interception is performed on these videos. A video is composed of many frames of images, so analyzing the blurring degree of a video can be replaced by analyzing the blurring degree of a certain frame image to a certain extent. This method uses the OpenCV graphics library for image capture. The length of the selected video is about 60s, and each video...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com