3D point cloud segmentation method based on attention network

An attention and network technology, applied in the field of computer vision, can solve the problems of insufficient capture of global context information, blurred object boundaries, lack of context information, etc., to facilitate identification and segmentation, enhance spatial differences, and achieve good segmentation effects.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

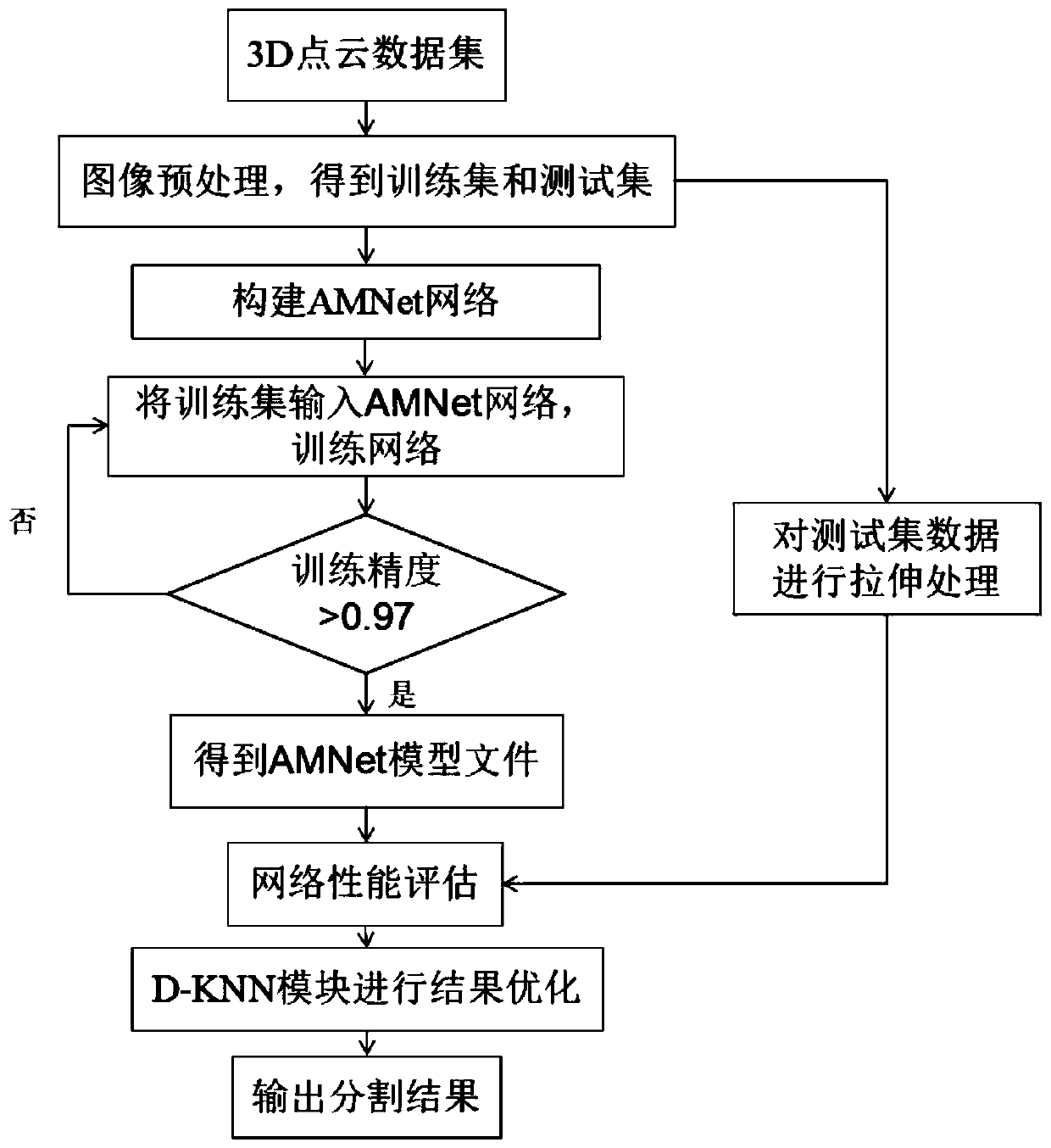

Embodiment 1

[0021] At present, the wide use of various 3D scanning devices has produced a large amount of point cloud data. At the same time, the application environment of 3D printing, virtual reality, and scene reconstruction has put forward various requirements for the processing of point cloud data. The processing of point cloud data, especially point cloud segmentation is the basis of various applications or task processing such as 3D reconstruction, scene understanding and target recognition and tracking. The segmentation results are beneficial to object recognition and classification, which is a hot research issue in the field of artificial intelligence Difficult problems have attracted the attention of more and more researchers.

[0022] Existing point cloud segmentation networks, such as PointNet, PointNet++, PointSIFT networks, etc., directly input 3D point cloud data into the network for training, but still do not make full use of global context information to learn better featu...

Embodiment 2

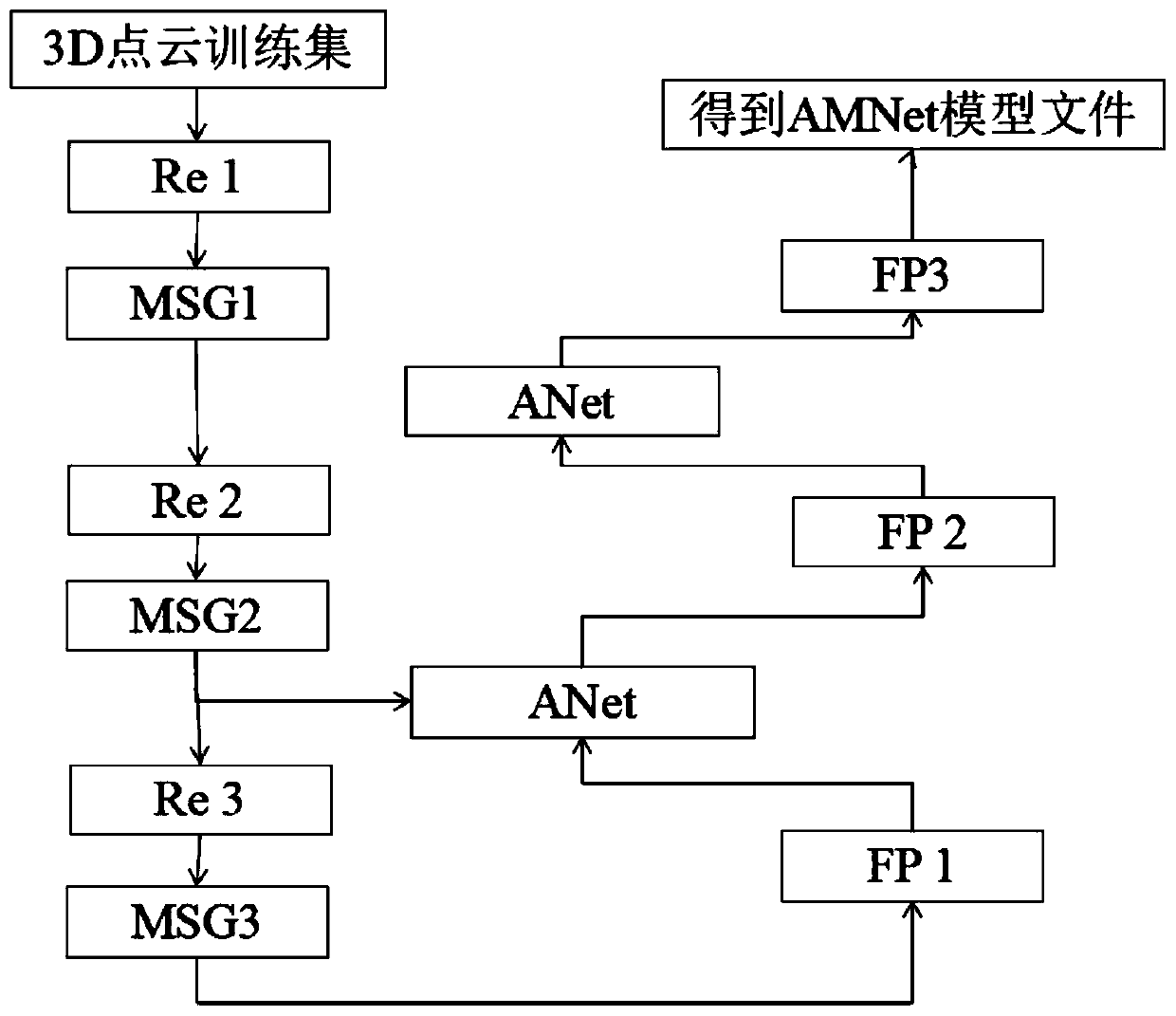

[0032] The 3D point cloud segmentation method based on attention network is the same as embodiment 1, obtains the AMNet model file described in step 2, specifically comprises the following steps:

[0033] (2.1) Build a training network: the training network uses an attention network (ANet for short) and a multi-scale module (Multi-scale group model, MSG for short) to form a point cloud segmentation network, called AMNet for short; the AMNet backbone network includes a MSG Module, an ANet branch network, three downsampling layers (Res model, Re for short), and three upsampling layers (FP model, FP for short).

[0034] Among them, the attention branch network (Attention Network, referred to as ANet) includes two transposition units, two multiplication units, one addition unit, two convolutional layers, and the convolution kernel size of each convolutional layer is 1×1 with a step size of 1.

[0035]Among them, the multi-scale module (MSG module) includes MSG1, MSG2, and MSG3. M...

Embodiment 3

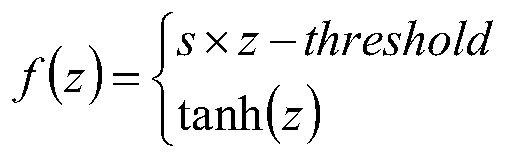

[0052] The 3D point cloud segmentation method based on the attention network is the same as embodiment 1-2, the stretching formula described in step 3, specifically:

[0053]

[0054] s=1-(tanh(z 1 )) 2

[0055] z 1 =(ln((1+threshold) / (1-threshold))) / (-2)

[0056] Among them: f(z) represents the new z value obtained after the z value of the point cloud data of the test set is processed by the stretching formula, the threshold controls the slope s value of the linear function and the intersection point z of the linear function and the tanh function 1 The size of the value, the value range of threshold is [1 / 2, 1].

[0057] The larger the threshold, the intersection point z of the linear function and the tanh function 1 The farther the distance from the origin, the z value of the point cloud data of the test set is smaller than z 1 When , the tanh function is used to stretch, and the z value of the point cloud data of the test set is greater than or equal to z 1 Values...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com