A Fast Robot Vision Positioning Method Based on Dead Reckoning

A technology of robot vision and positioning method, applied in the field of visual positioning of mobile robots, can solve the problems of low ultrasonic propagation speed, insufficient positioning accuracy to achieve positioning, affecting positioning accuracy, etc., to shorten image processing time and reduce image processing time. , to ensure the effect of positioning accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

[0035] The specific implementation of the whole process of the present invention is illustrated below by way of example:

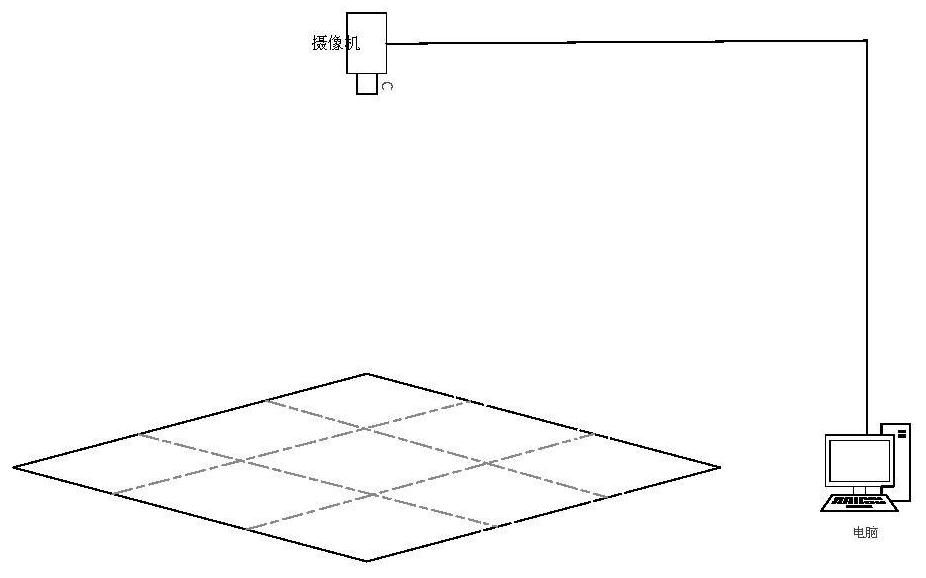

[0036] Step 1. Install the camera on the R-shaped bracket, fix it at a certain height, and ensure that the camera mirror is level with the positioning area, then connect the power supply and network cable, start shooting, and use the upper computer software on the desktop computer to obtain the original color image of the positioning area. Such as figure 1 shown.

[0037] Step 2: Adjust the lens aperture and focus well and keep it unchanged, place the calibration board in the positioning area, then continuously change the calibration board pose in front of the lens, collect multiple images, obtain the calibration board images in different poses and save.

[0038] Step 3: After the acquisition is completed, use MATLAB to process the image of the calibration board, perform camera calibration, and obtain camera parameters.

[0039]Step 4. Under normal circ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com