Natural image matting method based on deep learning

A natural image matting and deep learning technology, applied in neural learning methods, image enhancement, image analysis, etc., can solve problems such as unfavorable high-level semantic information acquisition, lack of overall features, and decreased accuracy, to expand the scope of context information, improve Cutout quality effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments. It should be noted that these descriptions are only exemplary and not intended to limit the scope of the present invention.

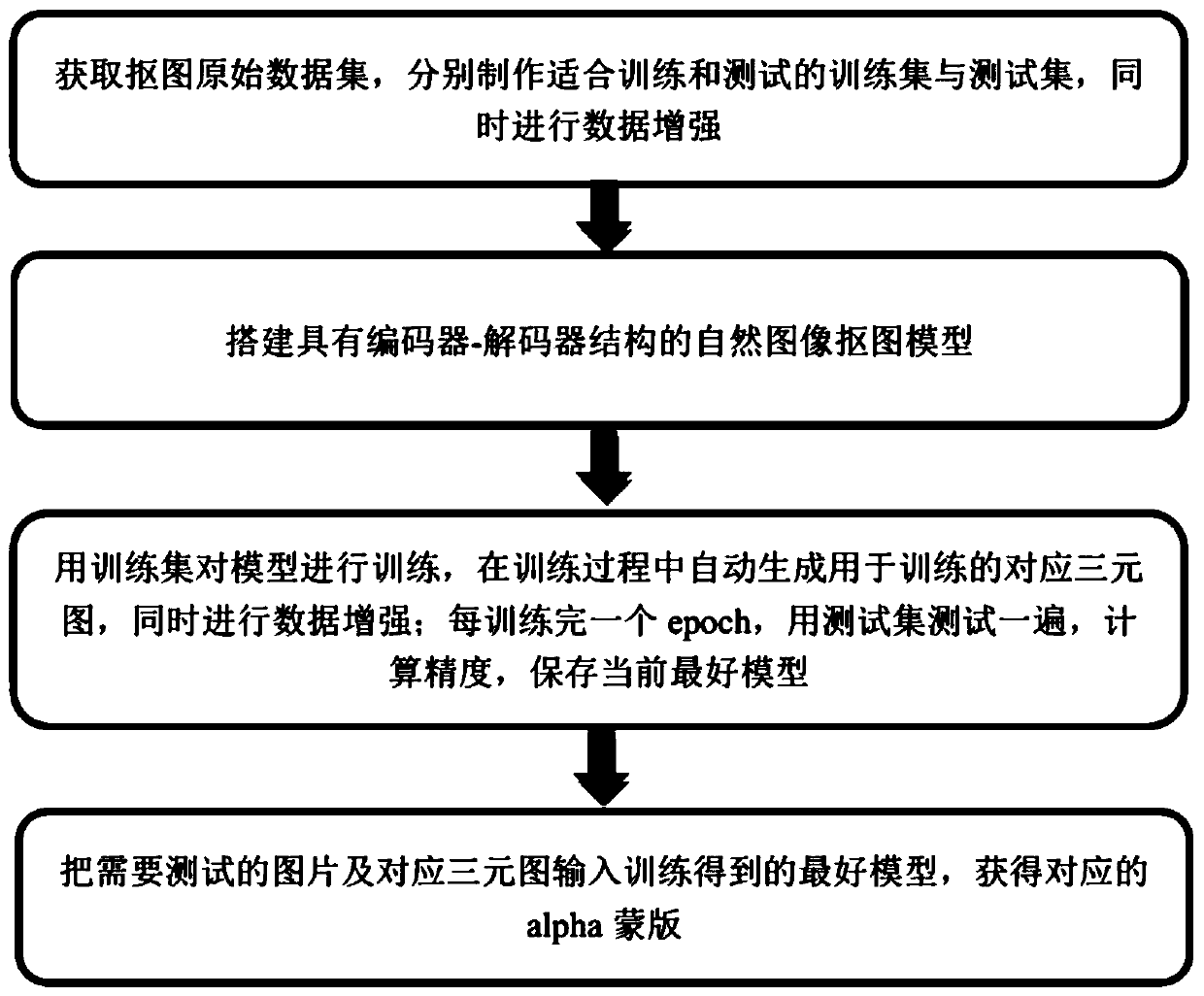

[0044] figure 1 It is a flow chart of a natural image matting method based on deep learning according to an embodiment of the present invention, as follows figure 1 Some specific implementation processes of the present invention are described by way of example. Specific steps are as follows:

[0045] Step S1: Obtain a matting data set, make a training set and a test set suitable for training and testing respectively, and perform data enhancement at the same time. Here, take the Adobe Deep Matting data set released by Xu et al. in 2017 as an example.

[0046] The Adobe Deep Matting dataset only provides the foreground image and alpha mask of the training set, and the foreground image, alpha mask, and ternary image of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com