Distributed memory management method based on network and page granularity management

A technology of memory management and page granularity, applied in memory address/allocation/relocation, data processing input/output process, memory system, etc., can solve the problems that the platform cannot support performance and cannot handle data-intensive applications, etc., to achieve Excellent performance, low latency, and easy-to-use effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0026] The present invention connects by the PDMM interface of following table 1:

[0027] Table 1: PDMM interface

[0028]

[0029] After the external interface provided by PDMM is connected, the Malloc and Free functions allow the application to create or release a piece of memory from the GPM, and its distributed memory management is carried out according to the following steps:

[0030] (1) Assignment request

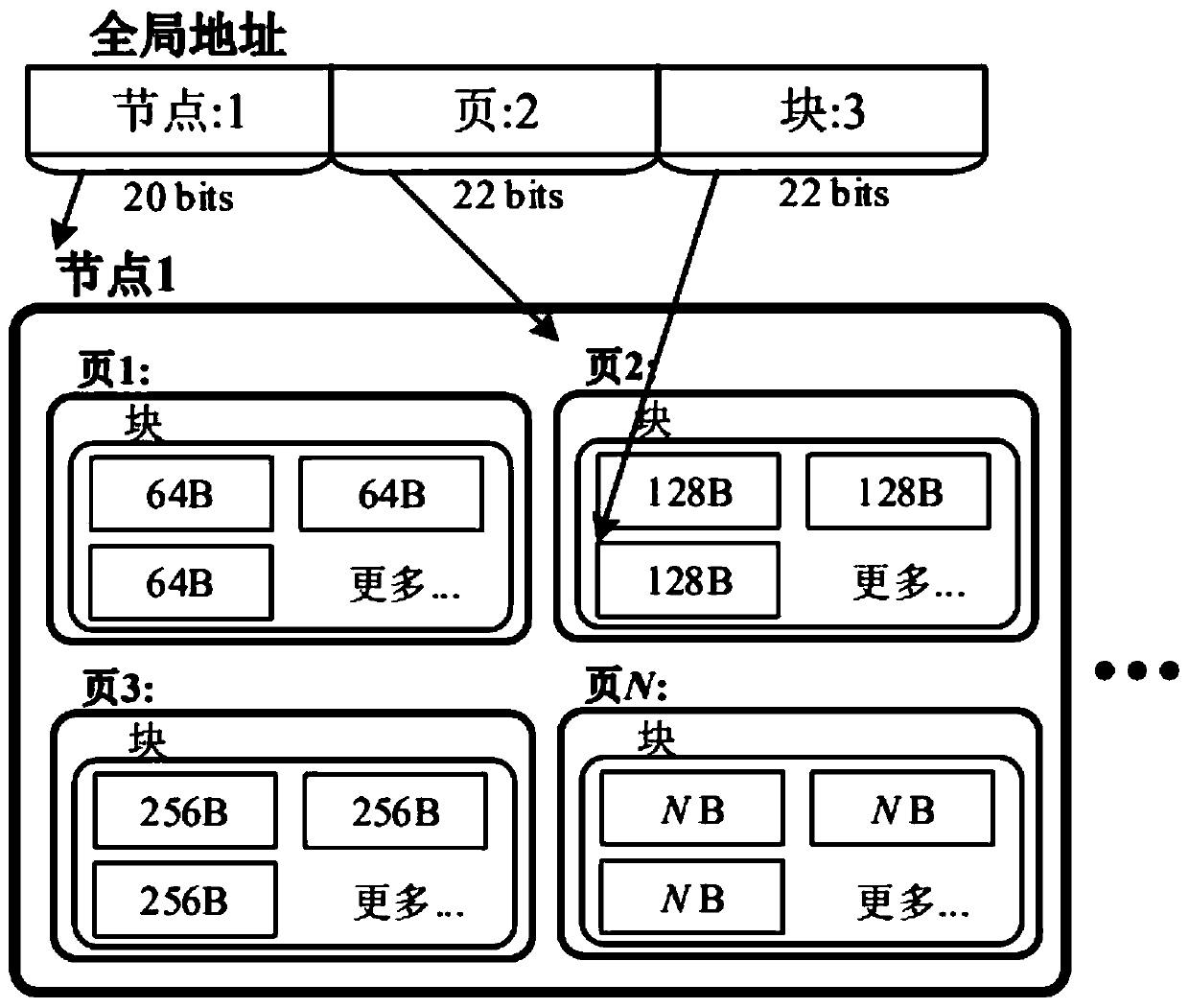

[0031] See attached figure 1 , the node processes an allocation request (see attached figure 1 Line 2 of the middle code block), first try to allocate a memory space of just the right size in the local memory according to the given size parameter, if the requested memory size exceeds the remaining memory space of the current node, then the node will send data to the cluster based on the metadata Another node forwards the allocation request.

[0032] (2) Memory access

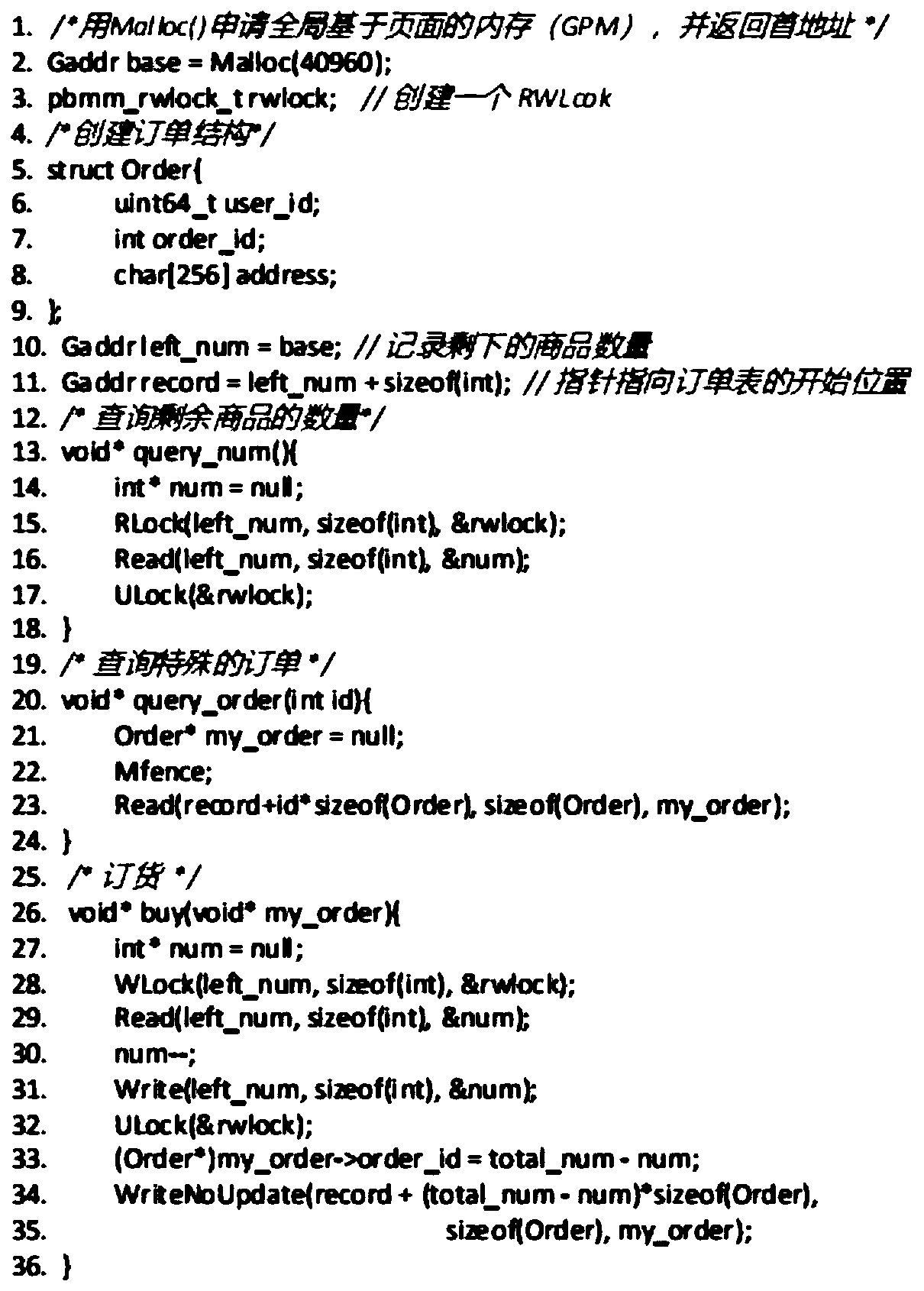

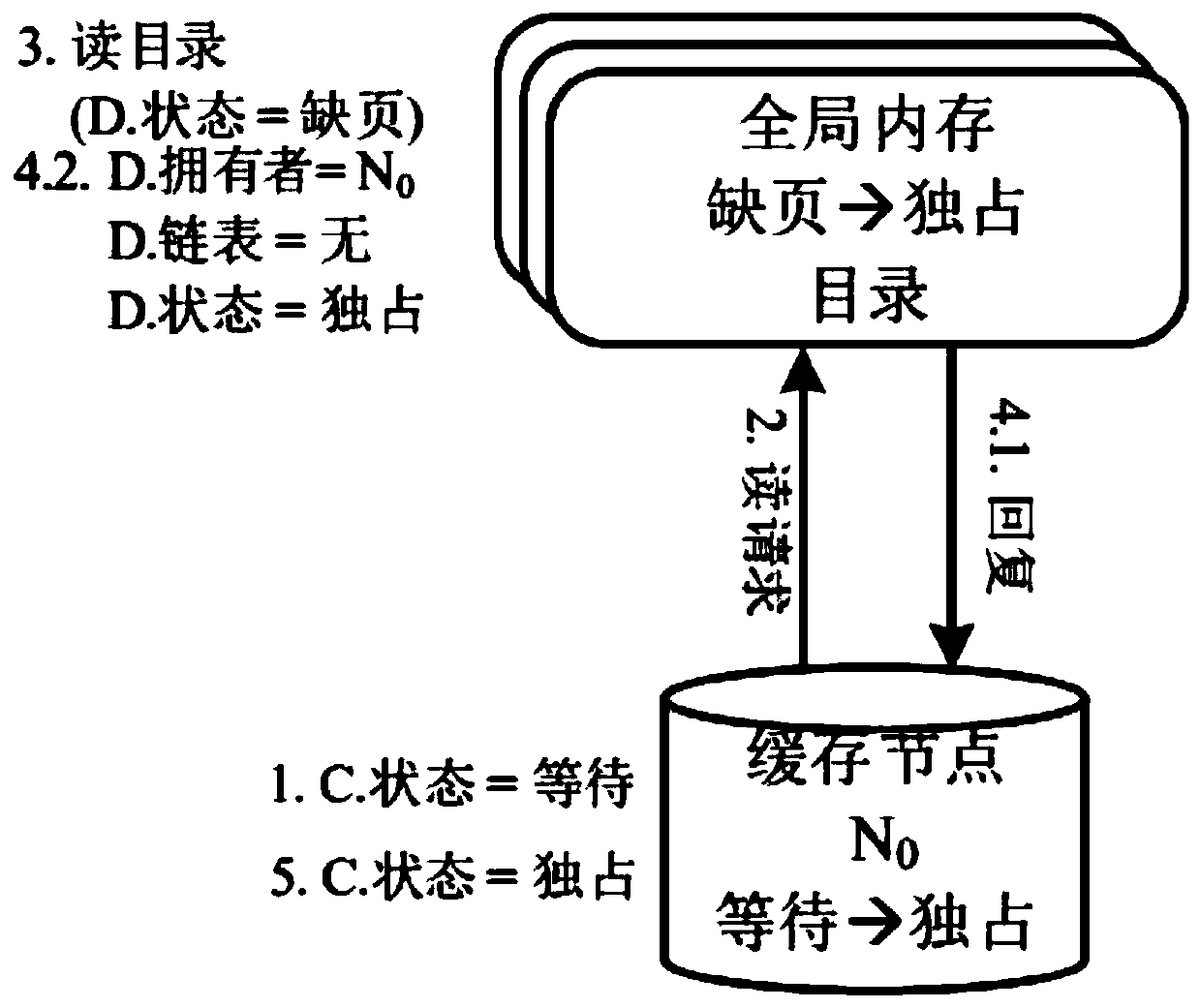

[0033] See attached figure 2 , the data accessed on the GPM should be extracted as a pag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com