Convolutional neural network acceleration processing system and method based on FPGA, and terminal

A convolutional neural network and processing method technology, applied in the field of convolutional neural network accelerated processing systems, can solve problems such as large differences in parallelism, mismatch between computing characteristics and deployed on-chip network architecture, and different memory access characteristics, to achieve improved The effect of accelerating efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

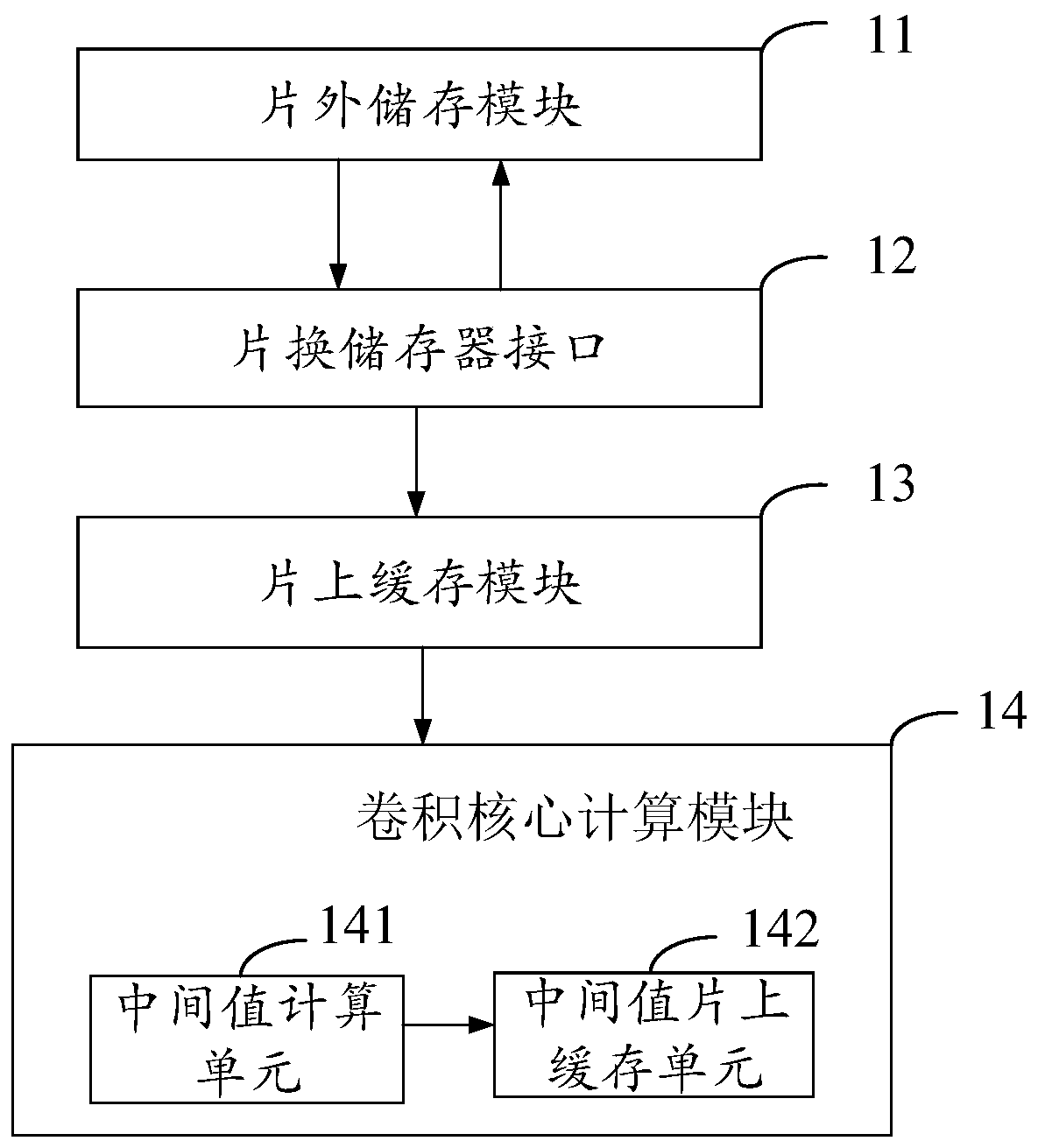

[0068] Embodiment 1: a kind of FPGA-based convolutional neural network accelerated processing system, please refer to Figure 7 .

[0069] Based on the pipeline architecture, the system includes:

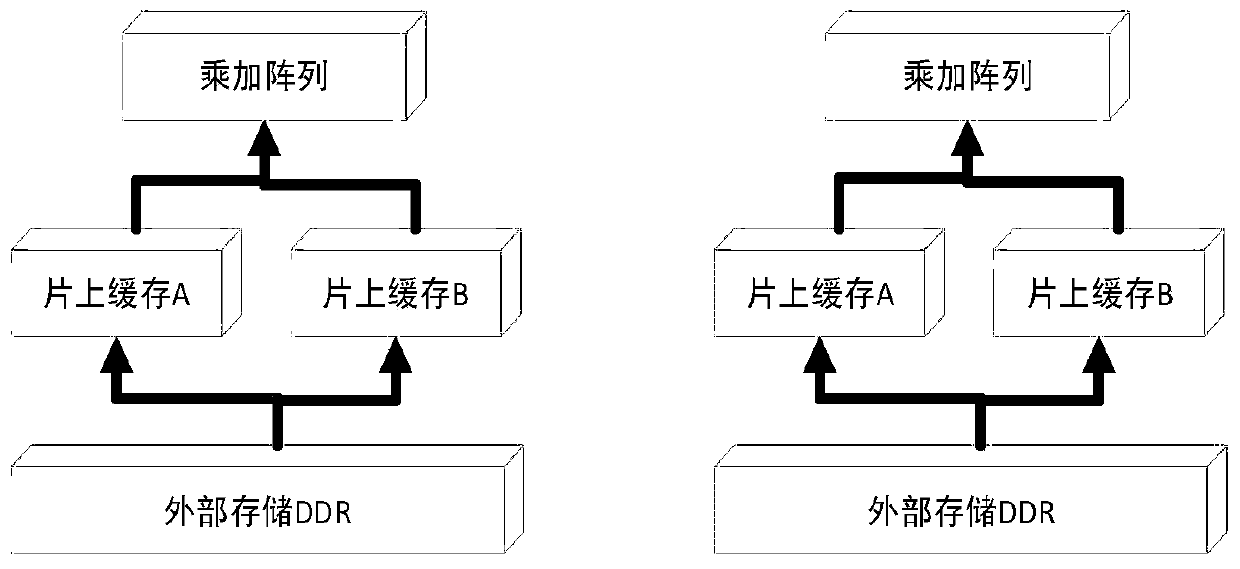

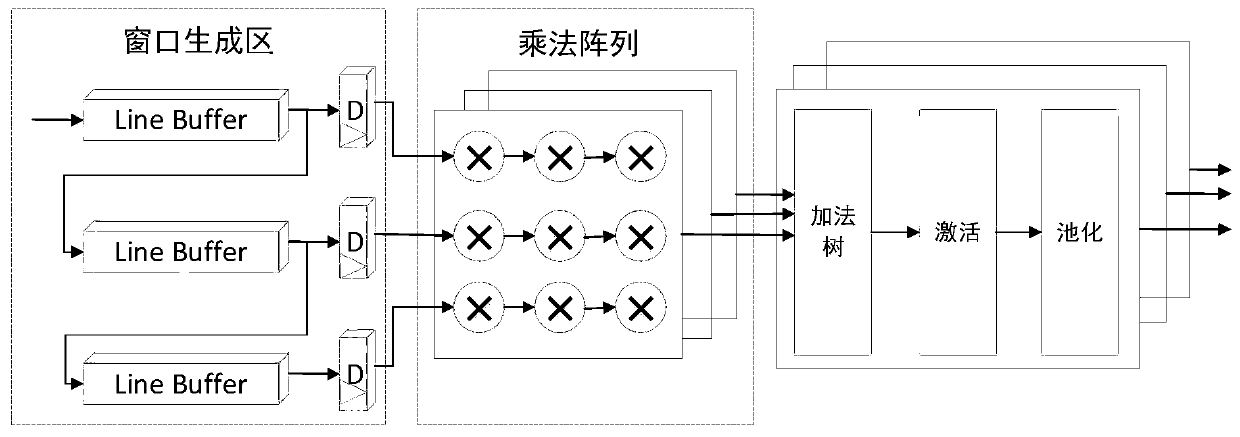

[0070] It consists of off-chip DDR memory, chip-swap memory interface, direct memory access controller, convolution calculation core engine, input feature on-chip cache unit, weight on-chip cache unit, intermediate value on-chip cache unit, and pipeline controller unit. in Figure 7 The solid arrows in the figure are data paths, and the dotted arrows are control paths.

[0071] The off-chip DDR memory sends off-chip input data to the chip-changing memory interface to realize data transmission with the chip; the input feature on-chip buffer unit is used to read the convolution on the off-chip input data The input feature map data of the neural network; the weight on-chip cache unit, connected to the input feature on-chip cache unit, for reading the weight data corresponding to the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com