Infrared weak and small target detection method and detection system

A technology of weak and small targets and detection methods, applied in the field of image processing, can solve problems such as poor real-time performance and limitations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

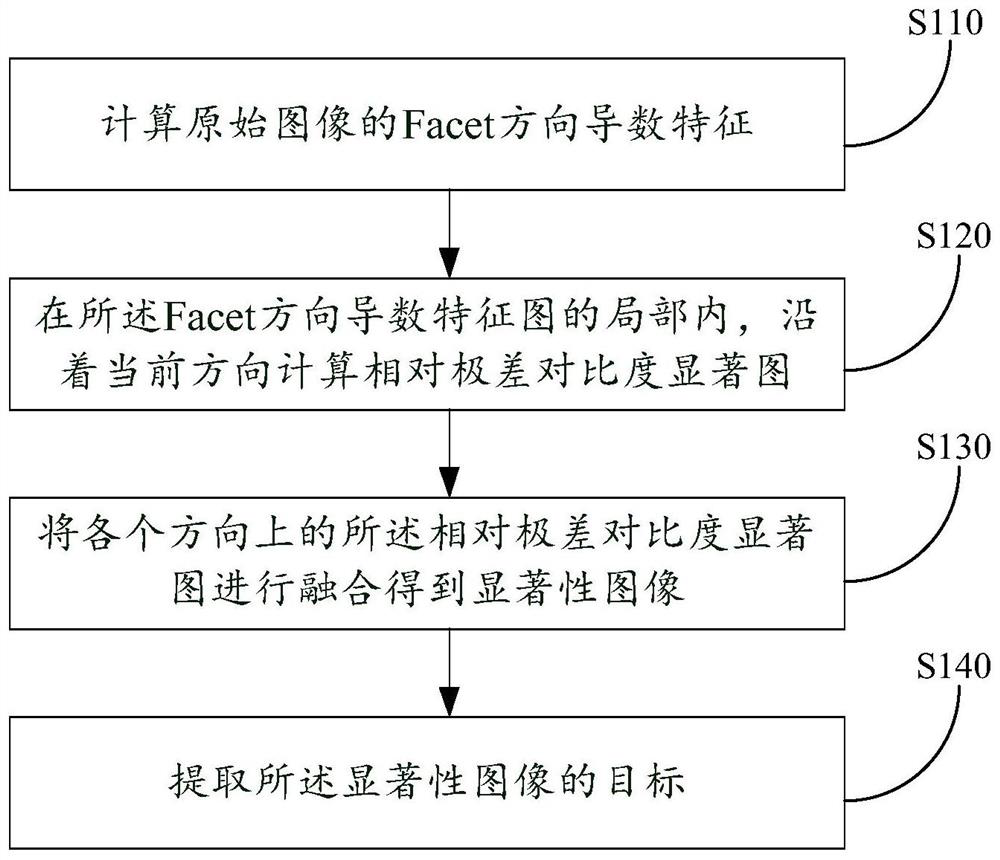

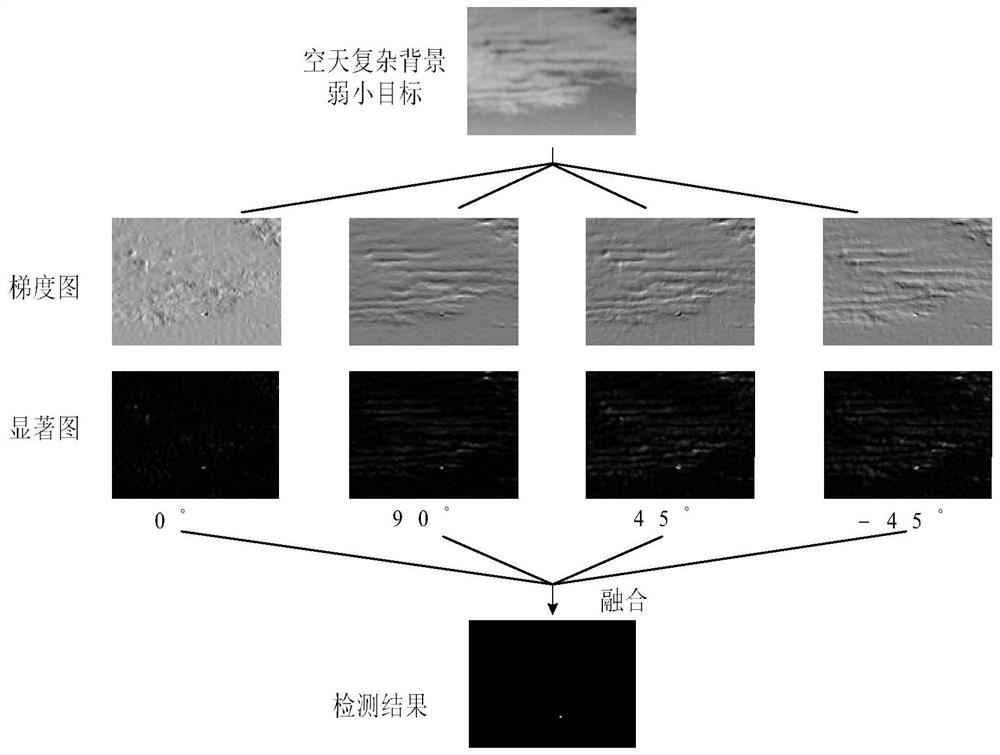

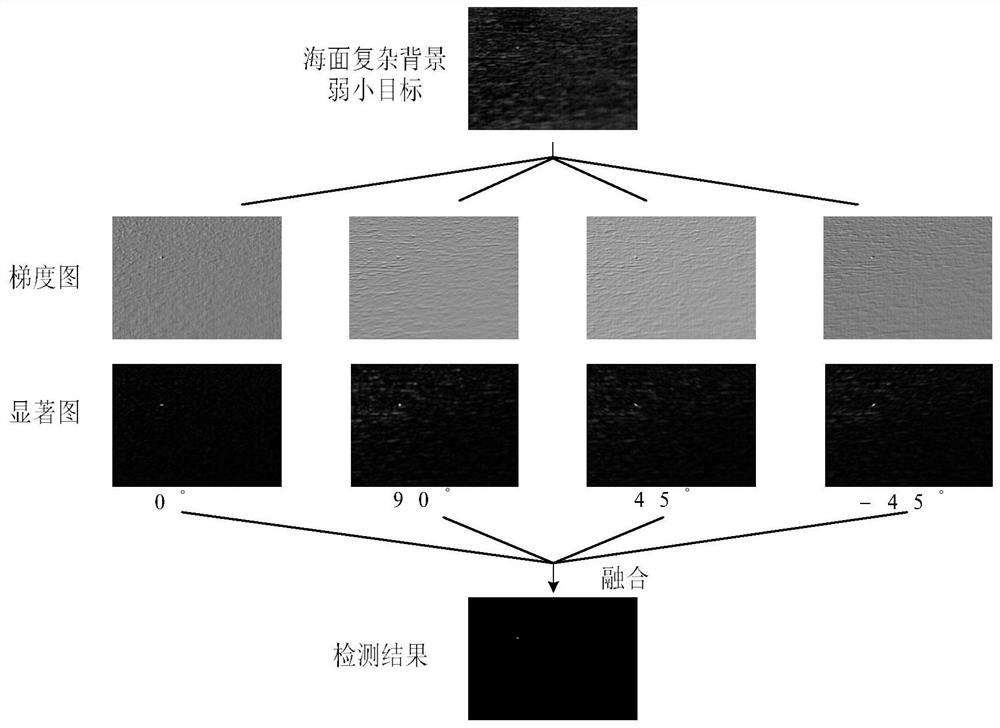

[0056] see figure 1 , the infrared weak and small target detection method provided in Embodiment 1 of the present invention, comprises the following steps:

[0057] Step S110: Calculate the Facet direction derivative feature of the original image.

[0058]In some preferred embodiments, in the step of calculating the Facet direction derivative feature of the original image, the following steps are included:

[0059] Based on the Facet model, the expression of a binary cubic polynomial f(r,c) is fitted to the gray intensity surface in the 5x5 neighborhood of the original image, the expression is as follows, where r, c are the row and column coordinates in the 5x5 neighborhood , K i is the fit coefficient:

[0060]

[0061] The formula for calculating the derivative characteristic of 0 degree direction, 90 degree direction and any α degree direction is:

[0062]

[0063] where K i It can be composed of the original image I and the convolution kernel w i Fast calculati...

Embodiment 2

[0100] see Figure 4 , a kind of infrared dim target detection system provided by the present invention, comprises: feature extraction module 110, calculates the Facet direction derivative feature of original image; Saliency value acquisition module 120, in the part of described Facet direction derivative feature map, along Calculating the relative range contrast saliency map according to the current direction; the image fusion module 130 fuses the relative range contrast saliency maps in each direction to obtain a saliency image; and the target extraction module 140 extracts the target of the saliency image .

[0101] The working mode of each module is described in detail below.

[0102] In some preferred embodiments, the feature extraction module 110 includes: based on the Facet model, an expression of a binary cubic polynomial f(r,c) is fitted to the gray intensity surface in the 5x5 neighborhood of the original image, the expression The formula is as follows, where r and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com