Neural network acceleration coprocessor, processing system and processing method

A coprocessor and neural network technology, applied in the field of artificial intelligence and chip design, can solve problems such as low computing efficiency, achieve fast reading and writing speed, improve scalability, and simple algorithms

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

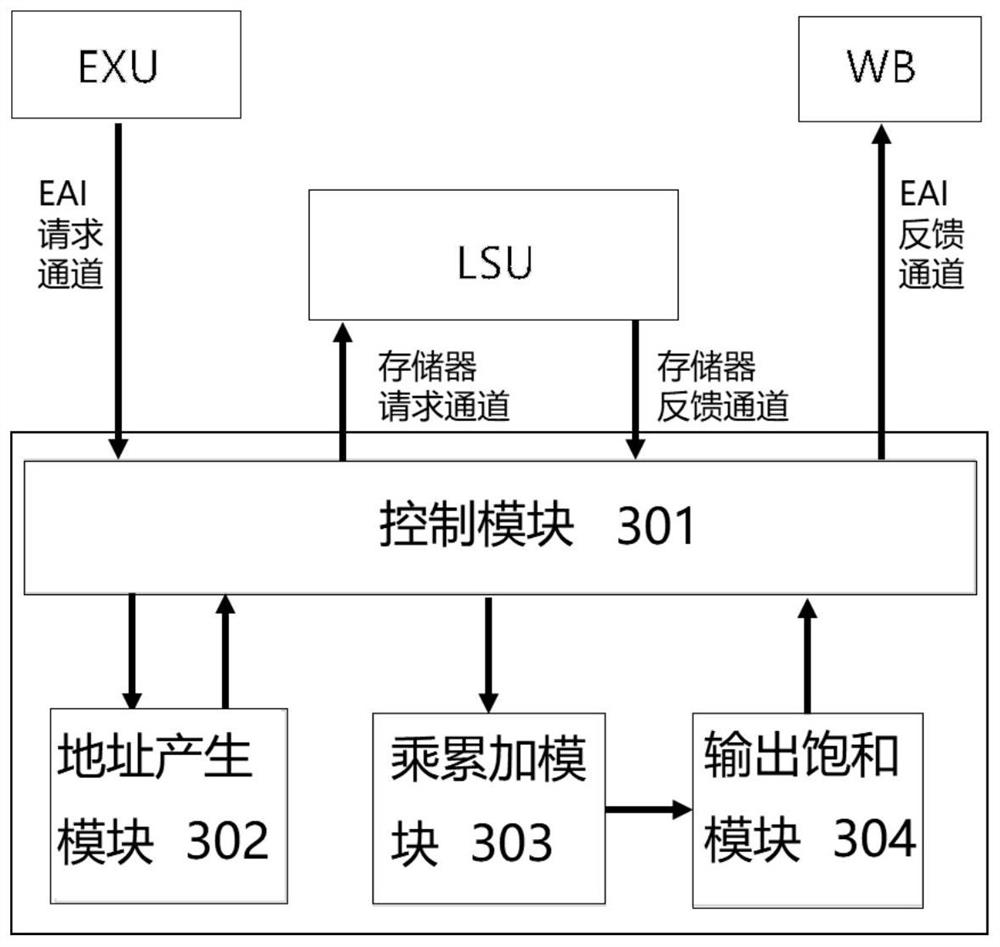

[0053] Such as figure 2 As shown, the embodiment of one aspect of the present invention provides a neural network acceleration coprocessor, including a control module 301, an address generation module 302, a multiply-accumulate module 303 and an output saturation module 304;

[0054] The address generating module 302 is used for matching storage addresses for input data and corresponding output data;

[0055] The multiply-accumulate module 303 is used for neural network convolution operation;

[0056] The output saturation module 304 is used to limit the range of output data, and output operation results;

[0057] The control module 301 is used to receive the extended instruction sent by the main processor, control the address generating module to match the input and corresponding output data address according to the extended instruction, read data from the memory according to the matching address, and control the multiplication and accumulation module to read Perform convolu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com