Federal learning computing unloading computing system and method based on cloud side end

It is a computing offloading and edge-end technology, which is applied in computing, program control design, program loading/starting, etc. It can solve the problem of limited wireless and computing capabilities, unloading tasks, data volume, calculation offloading, difficulty in making accurate decisions, and inability to provide services on terminals. And other issues

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

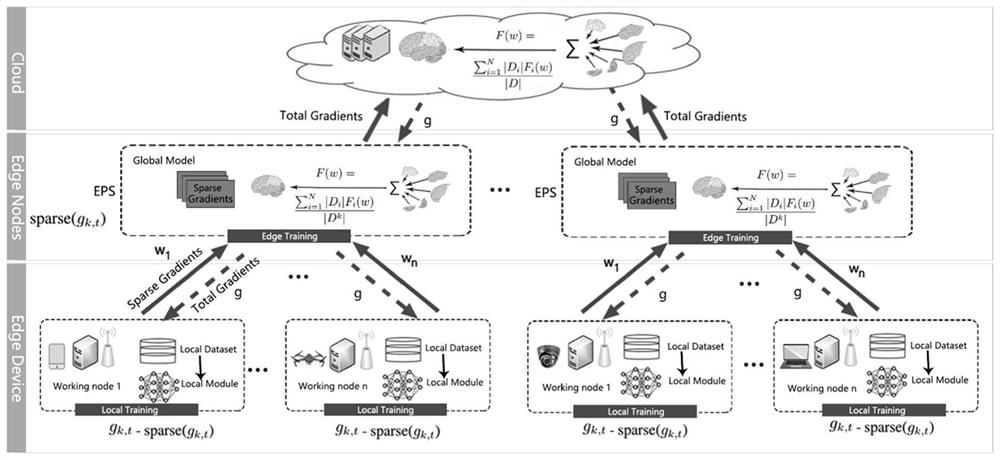

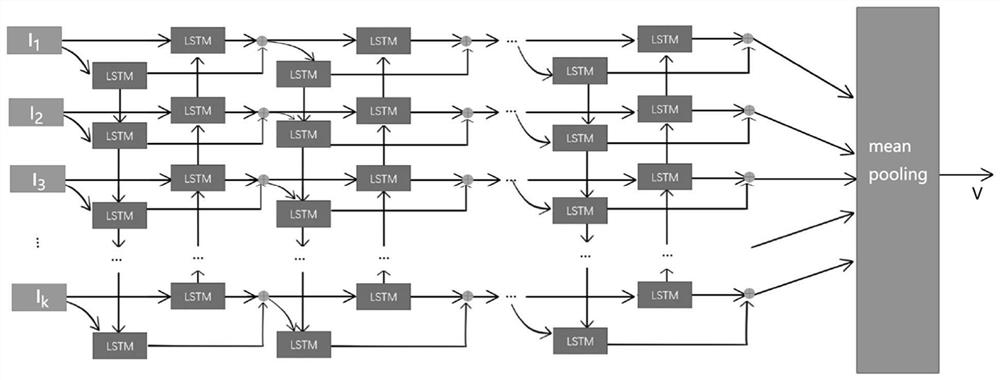

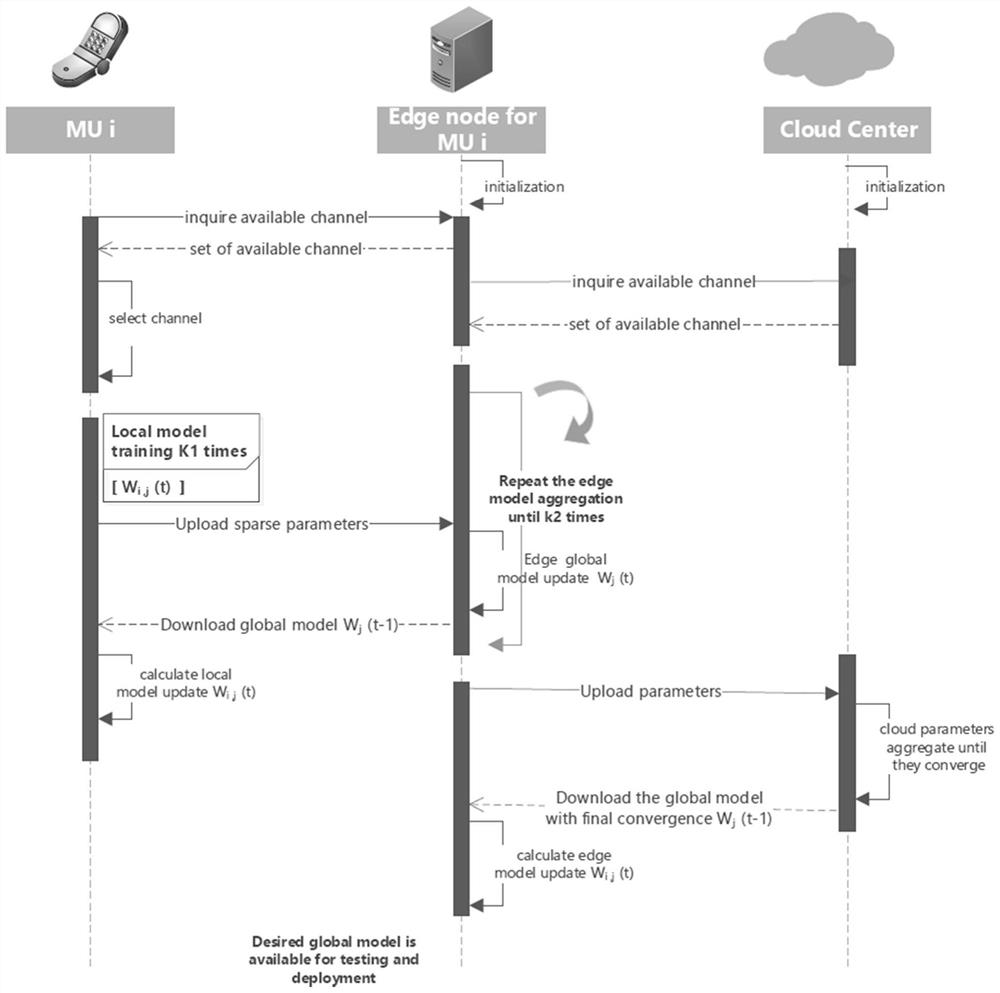

[0091] Firstly, a BiLSTM model is trained on each local device (client) using historical unloading tasks, and a global model is formed by aggregation on the edge server and cloud server; when a new unloading task of the next task arrives, the global model formed by aggregation is used for the task Forecasting, the predicted output is used as a guide for computing offloading decision-making and resource allocation. During the training process, we compress and upload the gradient data each time through the data sparse method, thereby greatly reducing communication overhead, speeding up model convergence and computing decision-making. The complexity of resource allocation.

[0092] The present invention establishes a complete set of model training prediction to communication optimization method, and finally can quickly solve calculation unloading and resource allocation. The framework we consider can correspond to a static IoT network under the current 5G-driven MEC network, wher...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com