Indoor mobile robot visual positioning method based on convolutional neural network

A convolutional neural network and mobile robot technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve problems such as heavy computing load and large environmental impact, achieve small computing load, enhance application range, and improve Effects on Reliability and Robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

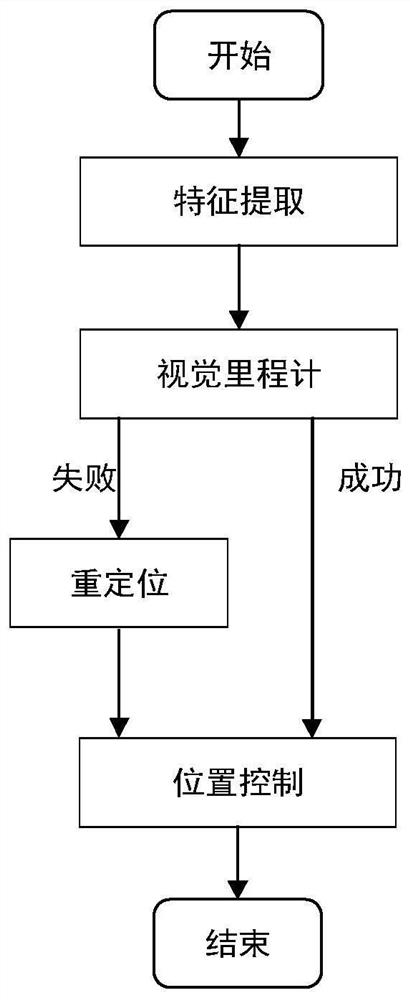

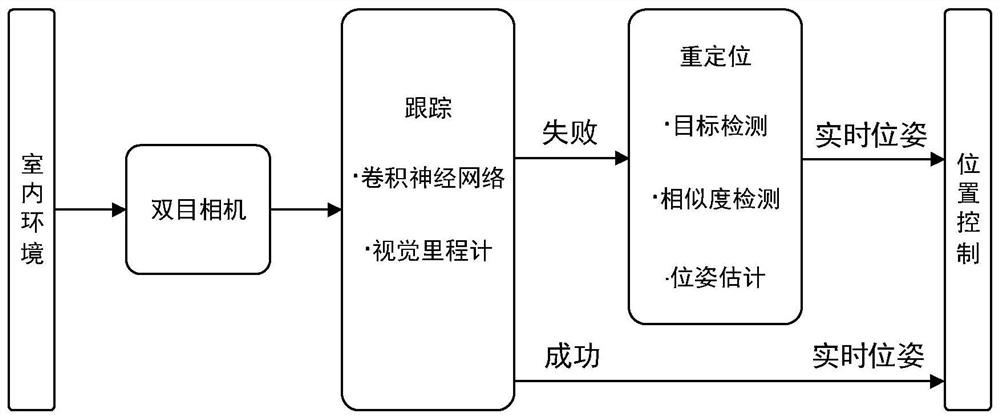

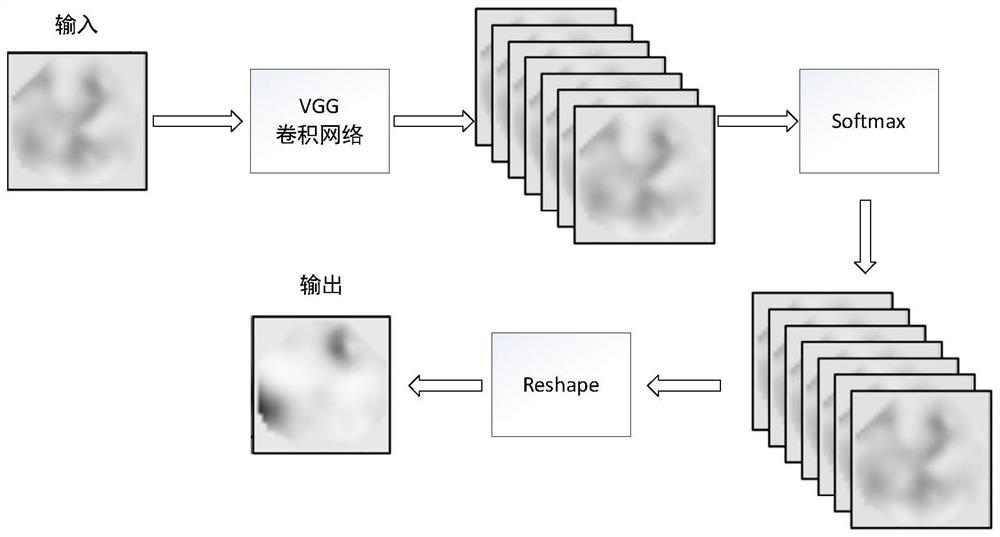

[0041] A method for visual positioning of an indoor mobile robot based on a convolutional neural network of the present invention collects binocular images through a binocular camera to realize positioning and control of the robot; a method based on a convolutional neural network is used to extract feature points of the binocular image, and The BA method performs image tracking, and uses the target detection algorithm to determine the image pose when the tracking fails. The image pose is used as the control signal of the robot to control the position of the robot. The invention gets rid of the defect that the image is sensitive to the environment change (such as the change of the illumination condition), and realizes the robust positioning and control of the indoor mobile robot in the lack of GPS environment.

[0042] The technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

[00...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com