Micro-expression recognition method based on adaptive motion amplification and convolutional neural network

A convolutional neural network and motion amplification technology, applied in the field of image processing, can solve the problems of neglect, excessive blurring, and noise of amplification results, and achieve the effect of ensuring recognition accuracy, good amplification effect, and good recognition performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

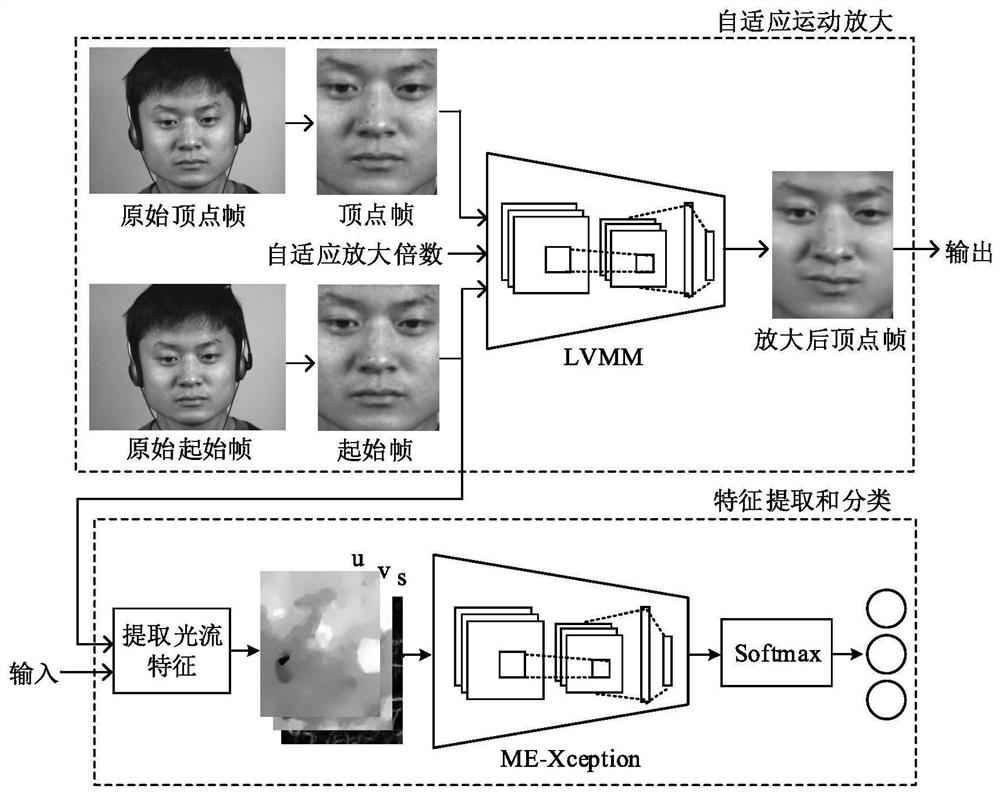

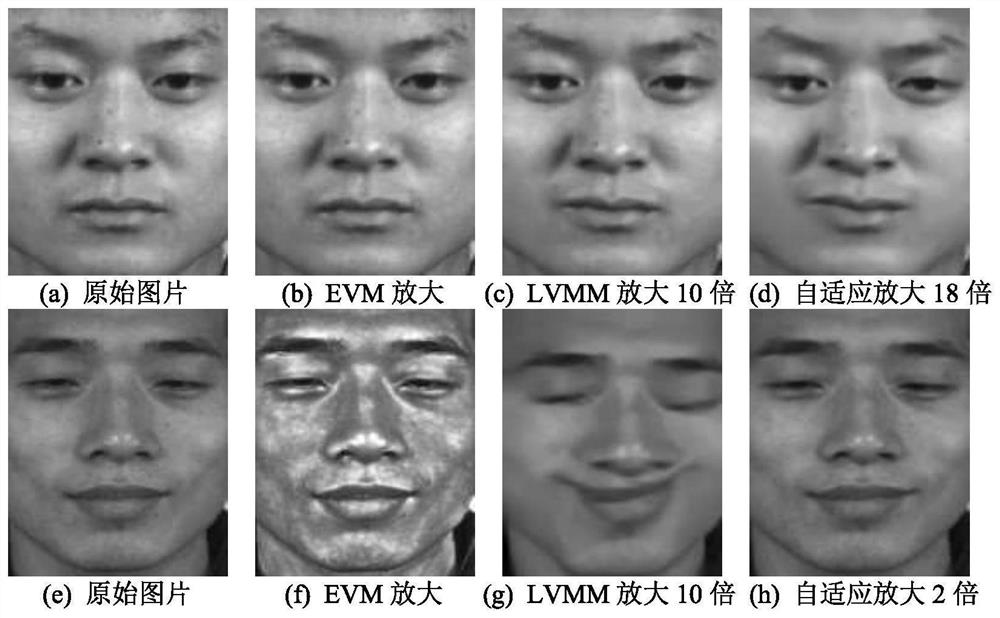

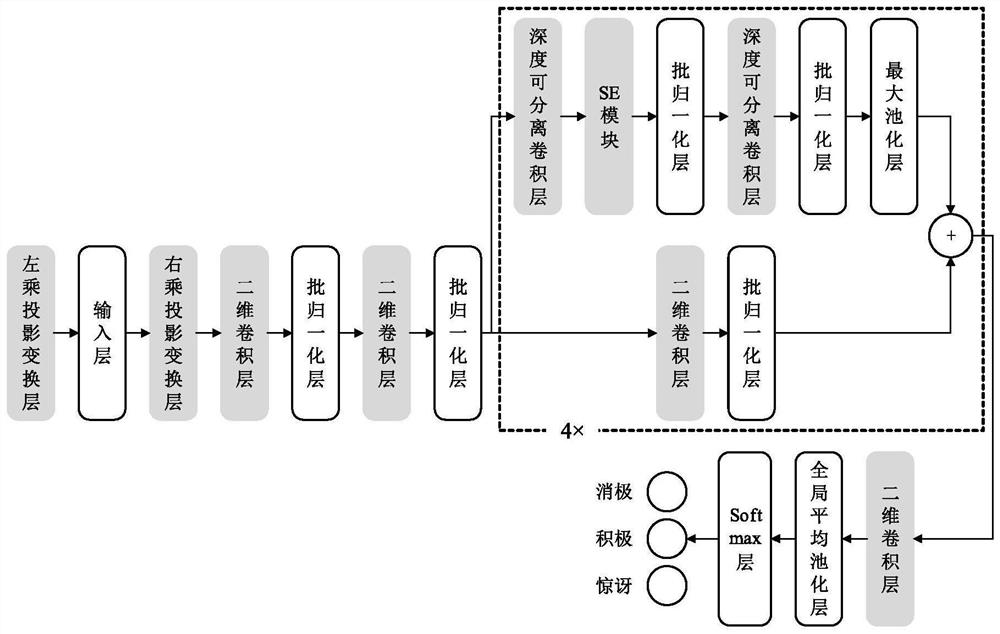

Method used

Image

Examples

Embodiment 1

[0045] The micro-expression recognition method based on adaptive motion amplification and convolutional neural network according to this embodiment specifically includes the following steps:

[0046] Step 1: Convert the micro-expression video into an image sequence sample, and perform face cropping and alignment; this embodiment uses the Dlib face detector in OpenCV to detect faces from the image sequence, and only uses the first frame of the image sequence to detect The face key points are used to crop and enlarge the face of all frames of the image sequence;

[0047] Step 2: Read the start frame of the micro-expression image sequence, and use the vertex frame positioning algorithm to calculate the vertex frame;

[0048] According to this embodiment, the start frame is the image frame at the beginning of the micro-expression image sequence, and the vertex frame is the image frame with the highest intensity in the micro-expression image sequence. The present invention only use...

Embodiment 2

[0089] In order to verify the effectiveness of the micro-expression recognition method provided by the present invention, the present embodiment adopts CK+macro-expression data set to pre-train the ME-Xception network model, and then conducts it on the CASME II data set, SAMM data set, and SMIC data set respectively Leave One Subject Out (LOSO) experiment. Among them, the CASME II data set is a spontaneous micro-expression data set proposed by Fu Xiaolan's team at the Institute of Psychology, Chinese Academy of Sciences in 2014. The SMIC spontaneous micro-expression data set was designed and collected by Zhao Guoying's team at the University of Oulu in Finland in 2012. SAMM spontaneously The micro-expression dataset was proposed by the Moi Hoon Yap research team at the University of Manchester in 2018.

[0090] In this embodiment, the micro-expression video samples are divided into three categories: negative, positive, and surprise. Among them, the negative micro-expression la...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com