Systematic target identification and tracking method based on multi-modal data

A target recognition, multi-modal technology, applied in image data processing, instruments, computing and other directions, can solve the problems of no laser points, increased sparseness of point clouds, redundant recognition system and increased computing load, etc. There are many unknown interferences, the effect of overcoming the large computational load and enhancing the adaptability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The present invention will be described in detail below with reference to the accompanying drawings and examples.

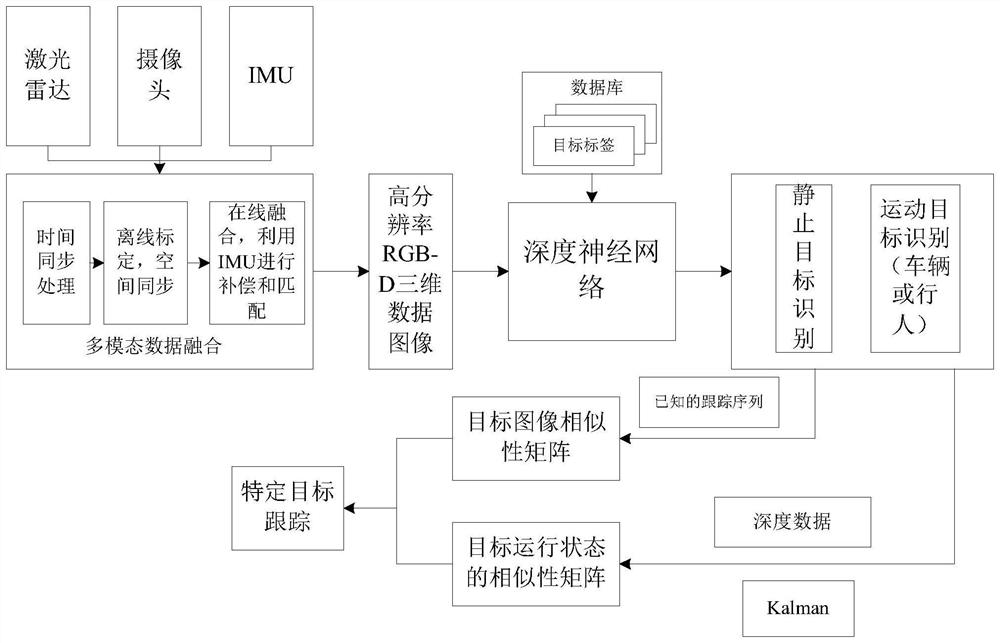

[0039] This embodiment provides a systematic target recognition and tracking method based on multi-modal data, see attached figure 1 ,Specific steps are as follows:

[0040] Step 1, the ground unmanned platform is loaded with lidar, camera and inertial measurement unit (English is Inertialmeasurement unit, referred to as IMU); wherein, the IMU can be equipped with GPS or not; Collect the point cloud data of laser ranging in the environment, collect the image data of the environment around the ground unmanned platform through the camera, and collect the pose data of the ground unmanned platform through the IMU. The point cloud data, image Data and pose data are multi-modal data;

[0041] Step 2, time synchronization processing is performed on the collected multimodal data to ensure time consistency of each multimodal data;

[0042]Step 3: Use the offline...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com