FPGA (field programmable gate array)-based universal fixed-point-number neural network convolution accelerator hardware structure

A neural network and hardware structure technology, applied in the field of electronic information and deep learning, can solve the problems of complex read and write control logic, difficult FPGA design, and non-reusable logic, and achieve accurate data accuracy, high computing speed and portability. The effect of high flexibility and low design complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] Embodiments of the present invention will be further described below in conjunction with the accompanying drawings. Examples of the embodiments are shown in the accompanying drawings, wherein the same or similar reference numerals represent the same or similar elements or elements with similar functions. The embodiments described below by referring to the figures are exemplary and are intended to explain the present invention and should not be construed as limiting the present invention.

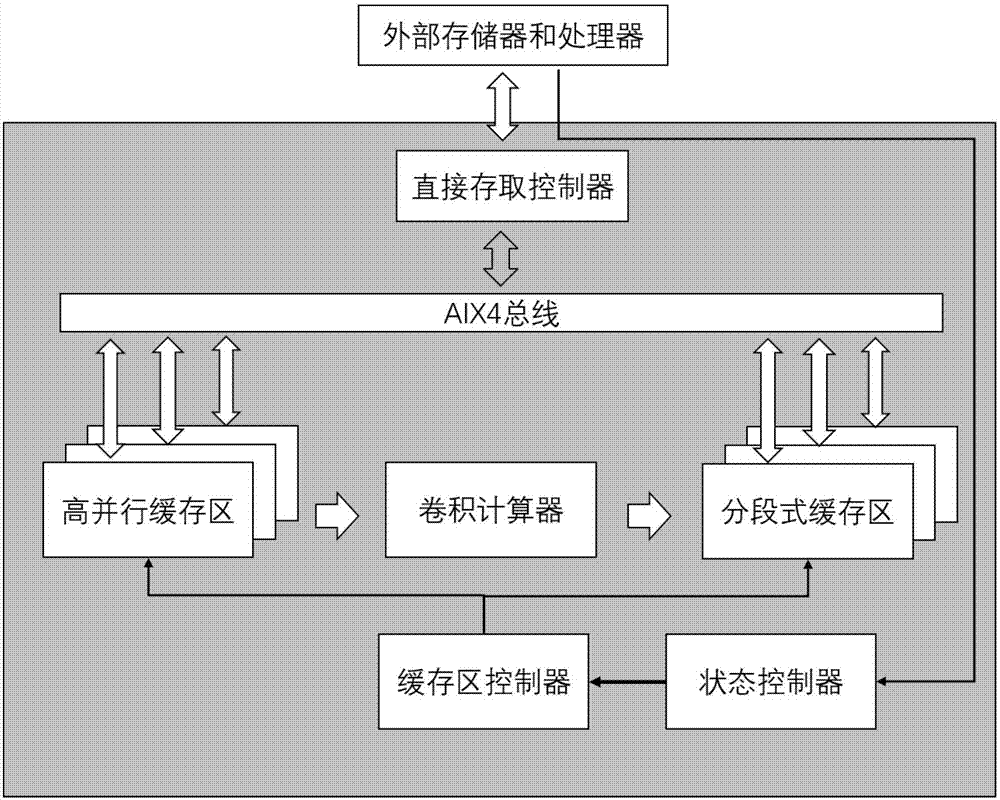

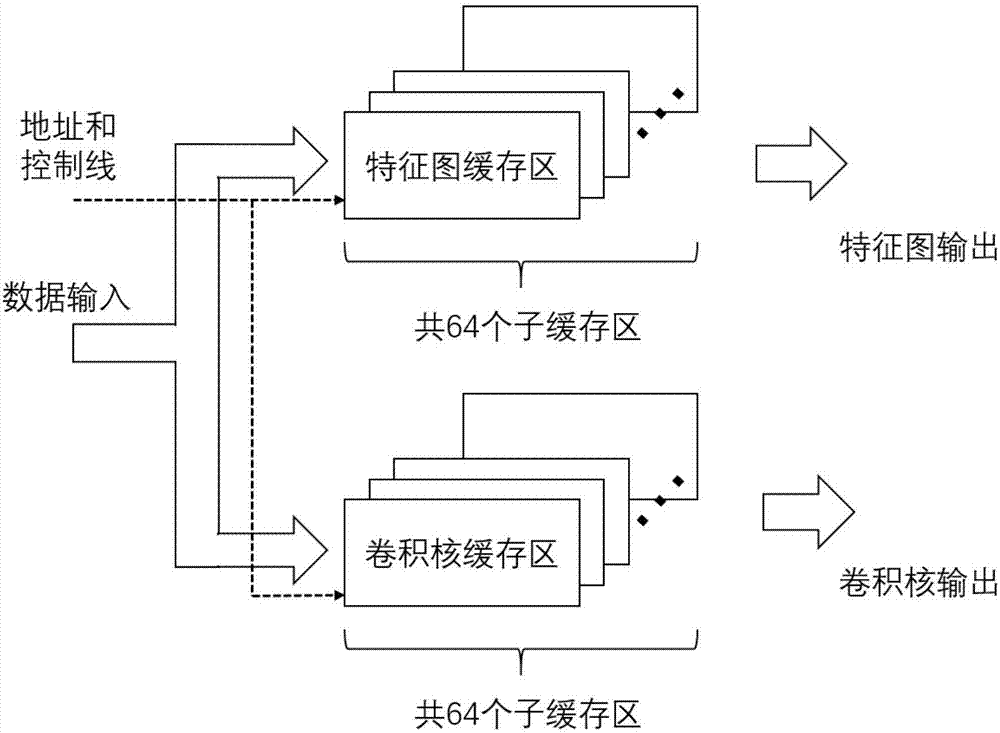

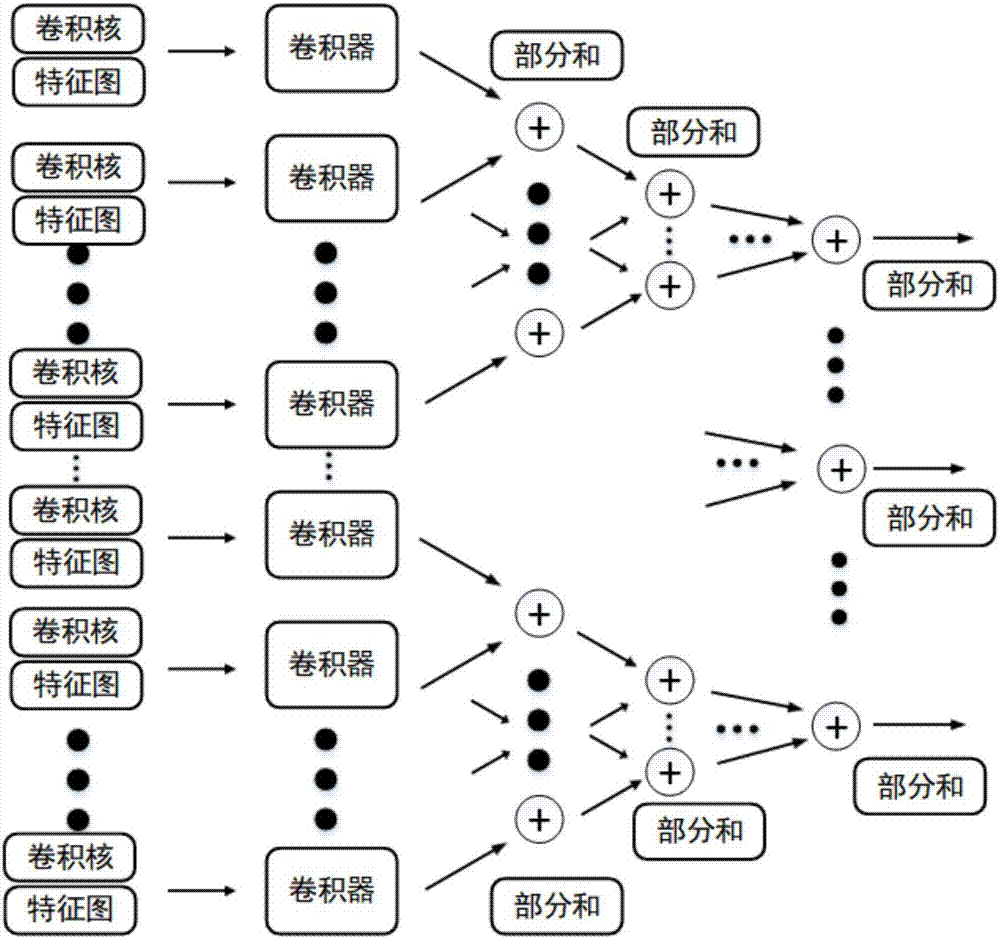

[0024] The hardware structure of the FPGA-based general-purpose fixed-point number neural network convolution accelerator proposed according to the embodiment of the present invention is described below with reference to the accompanying drawings. refer to figure 1 As shown, the FPGA-based general-purpose fixed-point number neural network convolution accelerator hardware structure includes: direct access controller, AXI4 bus interface protocol, high parallel buffer area (highly parall...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com